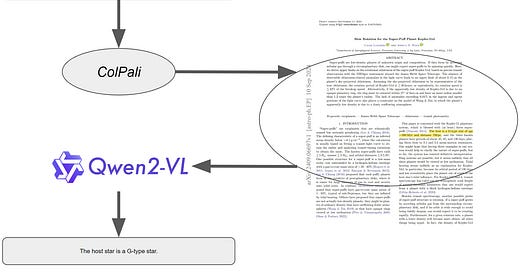

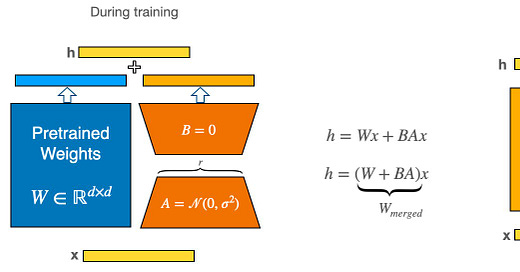

Gemma 3n: Fine-Tuning, Inference, and Submodel Extraction

Running Gemma 3n with vLLM and fine-tuning with TRL

The Kaitchup – AI on a Budget

Weekly tutorials, tips, and news on fine-tuning, running, and serving large language models on your computer. The Kaitchup also publishes two new AI notebooks every week.