Multimodal RAG with ColPali and Qwen2-VL on Your Computer

Retrieve and exploit information from PDFs without OCR

A standard Retrieval-Augmented Generation (RAG) system retrieves chunks of text from documents and incorporates them into the context of a user’s prompt. By leveraging in-context learning, a large language model (LLM) can use the retrieved information to provide more relevant and accurate answers.

However, what if the documents contain a lot of tables, plots, and images?

A standard RAG system will often overlook this additional information. When processing text, such as from PDF files, traditional RAG systems rely on OCR tools. Unfortunately, OCR accuracy is typically low for documents with complex formatting, tables, or images. As a result, irrelevant or misformatted text chunks may be indexed, which, when retrieved and injected into the LLM’s context, can negatively impact the quality of its answers.

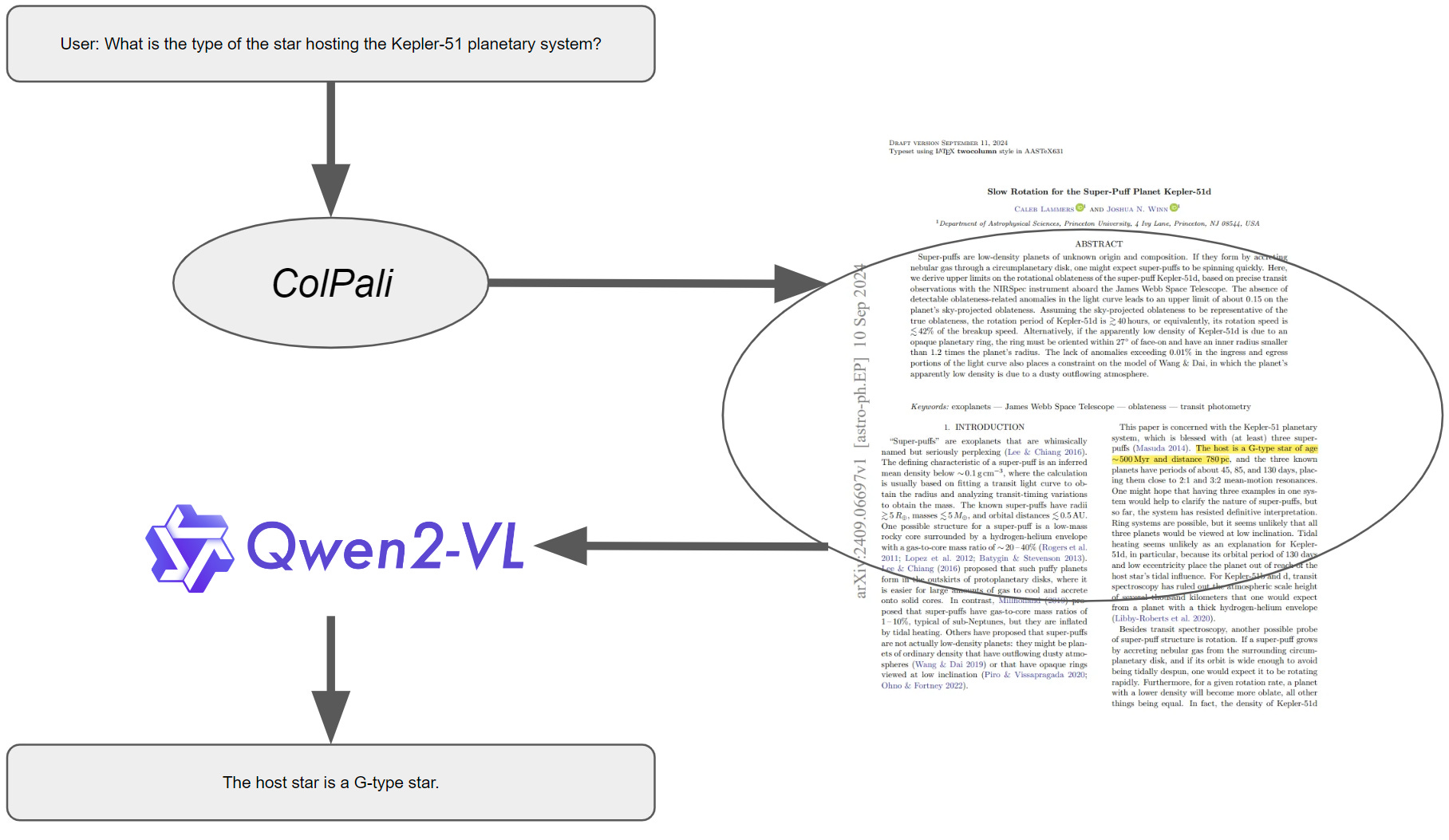

To avoid relying on OCR and to harness additional information from images, plots, and tables, we can implement a multimodal RAG system. By using a vision-language model (VLM) like Qwen2-VL or Pixtral, we can build a RAG system capable of reading both text and visual data.

In this article, I explain how a multimodal RAG system works. We’ll then see how to set up a multimodal RAG system using ColPali to chunk and encode images and PDF documents, while Qwen2-VL is used to process and exploit the retrieved multimodal information to answer user prompts. For instance, I applied this approach to a complex scientific paper from the arXiv’s Earth and Planetary Astrophysics section.

Here’s a notebook that demonstrates how to implement a multimodal RAG system using ColPali and Qwen2-VL:

The notebook consumes around 27 GB of GPU VRAM. I used RundPod’s A40 (referral link) to run it but it also works with Google Colab’s A100 (which is way more expensive). If you want to run it on a consumer GPU with less than 24 GB of VRAM, you can use the quantized version of Qwen2-VL-7B-Instruct (GPTQ or AWQ) or Qwen2-VL-2B-Instruct. You will find them in the Qwen2-VL collection.