Combine Multiple LoRA Adapters for Llama 2

Add skills to your LLM without fine-tuning new adapters

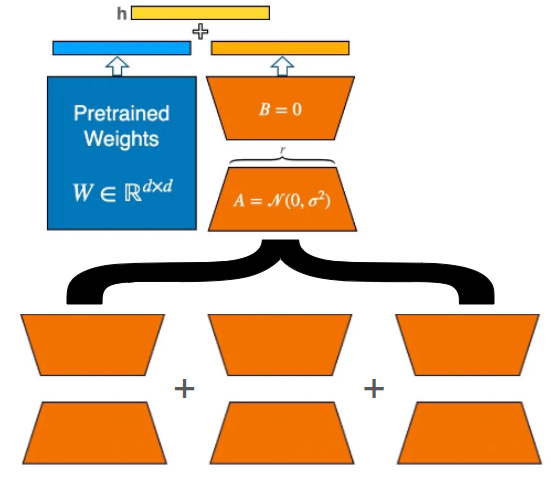

Fully fine-tuning a pre-trained large language model (LLM) for different tasks is very costly. Instead, we can freeze the parameters of the LLM while only fine-tuning a few million trainable parameters added through a LoRA adapter.

In other words, we only need to fine-tune an adapter to get the model to perform a target task. For instance, if we want to turn a pre-trained LLM into a translation model, we would fine-tune an adapter for translation. We can fine-tune one adapter for each ask that we want the LLM to perform.

But can we combine several adapters to get one single multi-task adapter?

For instance, if we have one adapter for translation and one adapter for summarization, can we combine both of them so that the LLM can do translation and summarization?

In this article, I show how to combine multiple LoRA adapters into a single multi-task adapter. We will see that it is very simple and that the resulting adapter can be as good as the adapters used for the combination.

Using Llama 2 7B, we will see how to combine an adapter fine-tuned for translation with another adapter fine-tuned for chat. With the resulting adapter, we will be able to make a Llama 2 that can translate and chat.

I have also implemented a notebook that can run all the code explained in this article. You can find it here: