Hi Everyone,

In this edition of The Weekly Kaitchup:

IFBench: A Good Alternative to IFEval?

ERNIE 4.5: Multimodal and Reasoning MoE Models

Unsloth’s Gemma 3n Notebook and Findings

IFBench: A Good Alternative to IFEval?

When testing a new language model, one of the first questions we ask is: How well does it follow instructions? A model might be highly knowledgeable or excel at reasoning, but that doesn’t matter much if it can’t understand your prompt or adhere to specific instructions.

Despite the importance of instruction-following, benchmarks that truly evaluate this ability are either rare or not very robust.

IFEval is a solid benchmark in this space. I use it frequently, especially when evaluating quantized models, as it often uncovers issues that more generic, classification-style benchmarks like MMLU miss. However, IFEval’s popularity has become a double-edged sword. Since many users rely on it, LLM developers increasingly optimize (or overfit) their models specifically for it. As a result, it’s possible to find models that score 80%+ on IFEval while still performing poorly on real-world instruction-following tasks.

To address this, AI2 has introduced a new benchmark: IFBench, designed to provide a more rigorous and diverse evaluation of a model’s ability to follow instructions accurately.

GitHub: allenai/IFBench

IFBench introduces 58 diverse and challenging constraints, including tasks like counting, formatting, and copying, that provide a more rigorous test of a model’s ability to generalize instruction-following behavior. Interestingly, even state-of-the-art models like GPT-4.1 and Claude 3.7 Sonnet score below 50% on IFBench, highlighting a surprising weakness: many leading LLMs still struggle to reliably follow instructions outside of familiar patterns.

To support training for better generalization, the authors also released IFTRAIN, a collection of 29 carefully designed constraints. These are intended to be used with techniques that rely on verifiable rewards, such as RLVR (Reinforcement Learning with Verifiable Rewards) and GRPO (Group Region Policy Optimization), enabling more effective and targeted instruction-following improvements. In the paper, they show how to use it for training:

ERNIE 4.5: Multimodal and Reasoning MoE Models

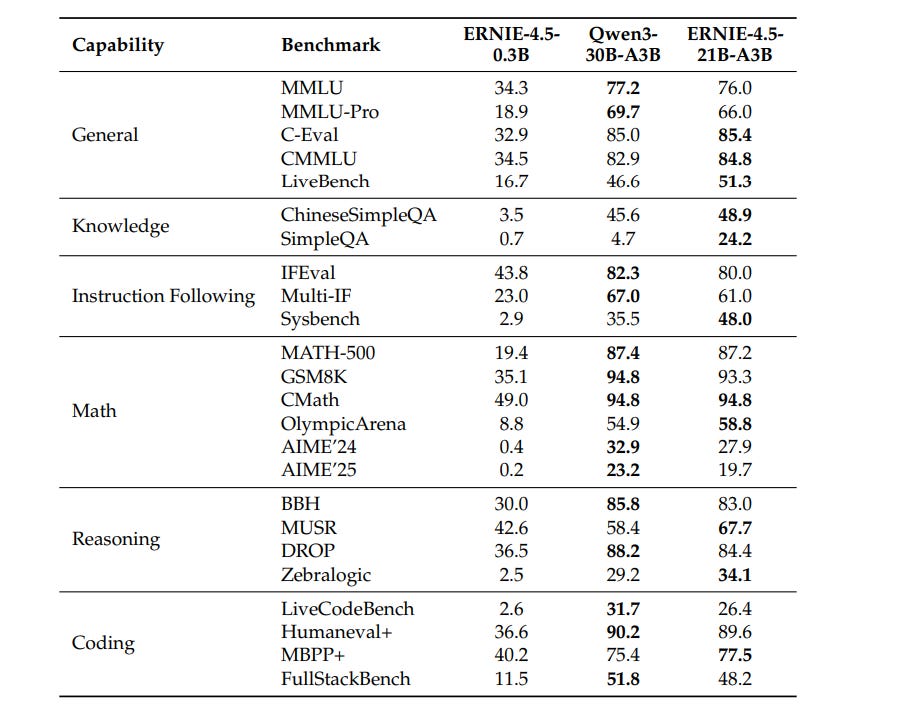

Baidu has released a series of LLMs that appear to perform on par with Qwen3 across most tasks.

Baidu's lineup includes both extremely large and very small models, ranging from 0.3B to 424B parameters. Most of them use a Mixture-of-Experts (MoE) architecture with lightweight experts, a design similar to what's seen in Qwen3 and DeepSeek.

What stands out most to me, though, is their technical report:

It’s unusually detailed. Most LLM technical reports tend to focus heavily on evaluation results, primarily to showcase performance, while revealing very little about the actual training process. Baidu took the opposite approach: their 43-page report is packed with equations, architecture diagrams, training pipelines, and implementation details, with technical content running all the way to page 36!

If you're looking for something worthwhile to read this weekend, I highly recommend it.

The models are available here:

Hugging Face: ERNIE 4.5

The naming can be a bit misleading. The models labeled “PT” are not "pre-trained" (as opposed to “instruct”) models (despite what the suffix might suggest, similar to Google’s use of "pt" for Gemma 3 base models). In this case, “PT” stands for PyTorch.

These are the versions you should use with vLLM (they’re compatible if you compile vLLM from source) or with Hugging Face Transformers.

Unsloth’s Gemma 3n Notebook and Findings

As usual, Unsloth uncovered some interesting insights while digging into Gemma 3n. Like the previous Gemma 3 models, these also suffer from activation values that frequently exceed the representable range of float16, leading to numerical instability. This is likely a side effect of training on TPUs, where bfloat16 is the preferred format and handles these values without issue.

This is especially the case for the vision encoder. Google is investigating it more closely and seems to have confirmed some other bugs (due to timm) that significantly hurt the model’s performance for multimodal use cases. You can find Unsloth’s analysis and notebook here.

My guide on fine-tuning Gemma 3n for text-only applications with TRL/Transformers is here:

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐ Tower+: Bridging Generality and Translation Specialization in Multilingual LLMs

SparseLoRA: Accelerating LLM Fine-Tuning with Contextual Sparsity

FineWeb2: One Pipeline to Scale Them All -- Adapting Pre-Training Data Processing to Every Language

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% discount for group subscriptions):

Have a nice weekend!