Mistral 7B: Recipes for Fine-tuning and Quantization on Your Computer

Cheap supervised fine-tuning with an impressive LLM

Mistral 7B is now a very popular large language model (LLM). It outperforms all the other pre-trained LLMs of similar size and is even better than larger LLMs such as Llama 2 13B.

It is also very well optimized for fast decoding, especially for long contexts, thanks to the use of a sliding window to compute attention and grouped-query attention (GQA). You can find more details in the arXiv paper presenting Mistral 7B.

Mistral 7B is good but small enough to be exploited with affordable hardware.

In this article, I will show you how to fine-tune Mistral 7B with QLoRA. We will use the dataset “ultrachat” that I modified for this article. Ultrachat was used by Hugging Face to create the very good Zephyr 7B. We will also see how to quantize Mistral7B with AutoGPTQ.

I wrote notebooks implementing all the sections. You can find them here:

Note: The links with a (*) are Amazon affiliate links.

Last update: March 8th, 2024

Supervised Fine-Tuning of Mistral 7B with TRL

Mistral 7B is a 7 billion parameter model. You roughly need 15 GB of VRAM to load it on a GPU. Then, full fine-tuning with batches will consume even more VRAM. You can do it with an RTX 4090 24 GB*.

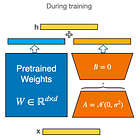

An alternative to standard full fine-tuning is to fine-tune with QLoRA. QLoRA fine-tunes LoRA adapters on top of a frozen quantized model. I have already used it to fine-tune Llama 2 7B.

Since QLoRA quantizes to 4-bit (NF4), we approximately divide by 4 the memory consumption for loading the model, i.e., Mistral 7B quantized with NF4 consumes around 4 GB of VRAM. If you have a GPU with 12 GB of VRAM, it leaves a lot of space for increasing batch size and targeting more modules with LoRA. If you are searching for a good GPU with that much VRAM, I recommend the RTX 4060 16 GB*.

In other words, you can fine-tune Mistral 7B for free, on your machine if you have enough VRAM, or use the free instance of Google Colab which is equipped with a T4 GPU.

To fine-tune with QLoRA, you will need to install the following libraries:

pip install -q -U bitsandbytes

pip install -q -U transformers

pip install -q -U peft

pip install -q -U accelerate

pip install -q -U datasets

pip install -q -U trlIn the notebook, I used FlashAttention-2. It can accelerate training but works only if you have an Ampere GPU (A100, RTX 30xx, etc.) or a more recent GPU. If you want to use FlashAttention-2, install the following:

pip install -q -U flash_attnThen, import the following:

import torch

from datasets import load_dataset

from peft import LoraConfig, PeftModel, prepare_model_for_kbit_training

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

AutoTokenizer,

TrainingArguments,

)

from trl import SFTTrainerNext, we load the tokenizer and configure it. As with Llama 2, we need to define a padding token. As usual, I chose the UNK token to pad the training examples.

Note: Mistral 7B is fully open. You don’t need to be connected to Hugging Face or to sign a license agreement before downloading the model and tokenizer.

model_name = "mistralai/Mistral-7B-v0.1"

#Tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name, add_eos_token=True, use_fast=True)

tokenizer.pad_token = tokenizer.eos_token

tokenizer.padding_side = 'left' #Necessary for FlashAttention compatibilityFor fine-tuning, I made a custom version of ultrachat. Ultrachat contains 774k dialogues in the JSON format. This is way too many for a cheap fine-tuning and the format is not optimal for fine-tuning with TRL on consumer hardware. Note: TRL is a library developed by Hugging Face that simplifies fine-tuning of instruct LLMs.

I randomly subsampled ultrachat only to keep 100k dialogues. Then, I flattened the dialogues. For instance, in the original dataset, one example is formatted like this:

[ "What percentage of the Earth's surface is covered by oceans?", "About 71% of the Earth's surface is covered by oceans.", "Wow, that's a lot of water! No wonder we call it the blue planet.", "Yes, it certainly is! The oceans play a vital role in regulating the Earth's climate and supporting life on our planet. And they're also a great source of food, energy, and recreation for us humans!", "Absolutely! I love visiting the beach and going for a swim in the ocean. It's amazing how vast and powerful the sea can be, yet also so peaceful and calming.", "As an AI language model, I have never gone to the beach or swam in the ocean, but based on what you said, I am sure it's a wonderful experience. The ocean is a place of great beauty and mystery, full of fascinating creatures and hidden treasures waiting to be discovered. It can be both a source of wonder and a reminder of the awesome power of nature." ]Once flattened, we have everything into one single sequence of tokens with “### Human” and “### Assistant” tags:

### Human: What percentage of the Earth's surface is covered by oceans?### Assistant: About 71% of the Earth's surface is covered by oceans###Human: Wow, that's a lot of water! No wonder we call it the blue planet### Assistant: Yes, it certainly is! The oceans play a vital role in regulating the Earth's climate and supporting life on our planet. And they're also a great source of food, energy, and recreation for us humans!### Human: Absolutely! I love visiting the beach and going for a swim in the ocean. It's amazing how vast and powerful the sea can be, yet also so peaceful and calming### Assistant: As an AI language model, I have never gone to the beach or swam in the ocean, but based on what you said, I am sure it's a wonderful experience. The ocean is a place of great beauty and mystery, full of fascinating creatures and hidden treasures waiting to be discovered. It can be both a source of wonder and a reminder of the awesome power of natureThis is the same format as timdettmers/openassistant-guanaco. This format can be directly used by TRL. You can find this version of ultrachat here:

To load the dataset, we do:

dataset = load_dataset("kaitchup/ultrachat-100k-flattened")Now, let’s load the model and prepare it for QLoRA:

#Quantization configuration

compute_dtype = getattr(torch, "bfloat16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=True,

)

model = AutoModelForCausalLM.from_pretrained(

model_name, quantization_config=bnb_config, device_map={"": 0}, attn_implementation="flash_attention_2"

)

model = prepare_model_for_kbit_training(model)

#Configure the pad token in the model

model.config.pad_token_id = tokenizer.pad_token_idReplace “bfloat16” with “float16“ and remove “attn_implementation="flash_attention_2", if you have an old GPU.

Next, we define the configuration of LoRA.

peft_config = LoraConfig(

lora_alpha=16,

lora_dropout=0.05,

r=16,

bias="none",

task_type="CAUSAL_LM",

target_modules= ['k_proj', 'q_proj', 'v_proj', 'o_proj', "gate_proj", "down_proj", "up_proj"]

)I usually set up r=lora_alpha=16 since I read the Platypus paper.

I also target as many modules as possible. Here, I chose all the “proj” modules but there are more. You can find them all by running “print(model)”. Note that adding more modules may improve the performance but it also adds trainable parameters that will consume more VRAM.

For training, I chose the following hyperparameters:

training_arguments = TrainingArguments(

output_dir="./v2_mistral7b_results",

evaluation_strategy="steps",

do_eval=True,

optim="paged_adamw_8bit",

per_device_train_batch_size=12,

gradient_accumulation_steps=2,

per_device_eval_batch_size=12,

log_level="debug",

logging_steps=100,

learning_rate=1e-4,

eval_steps=25,

#num_train_epochs=1,

max_steps=100,

save_steps=25,

warmup_steps=25,

lr_scheduler_type="linear",

)For this tutorial, I trained for only 100 steps. Since the examples are very long, training for one epoch would take more than 200 hours using a T4 GPU. If you use a V100 or an RTX 40xx*, you may reduce it to 100 hours.

I recommend at least 2,000 training steps to get a reasonably good model. It should take around two days of training with a T4 or one day with a more recent GPU.

Start the training with:

trainer = SFTTrainer(

model=model,

train_dataset=dataset['train'],

#eval_dataset=dataset['test'],

peft_config=peft_config,

dataset_text_field="text",

max_seq_length=1024,

tokenizer=tokenizer,

args=training_arguments,

)

trainer.train()If you have out-of-memory errors, reduce max_seq_length to 512 and per_device_train_batch_size/per_device_evak_batch_size. It can consume 8 GB, or less, of GPU RAM with lower values.

Here is the training log. It took almost 6 hours using the A100 of Google Colab):

Then, once training is done, test the trained adapter by running:

from transformers import GenerationConfig

model.config.use_cache = True

model = PeftModel.from_pretrained(model, "./results/checkpoint-100/")

def generate(instruction):

prompt = "### Human: "+instruction+"### Assistant: "

inputs = tokenizer(prompt, return_tensors="pt")

input_ids = inputs["input_ids"].cuda()

generation_output = model.generate(

input_ids=input_ids,

generation_config=GenerationConfig(pad_token_id=tokenizer.pad_token_id, temperature=1.0, top_p=1.0, top_k=50, num_beams=1),

return_dict_in_generate=True,

output_scores=True,

max_new_tokens=256

)

for seq in generation_output.sequences:

output = tokenizer.decode(seq)

print(output.split("### Assistant: ")[1].strip())

generate("Tell me about gravitation.")All this code is implemented in the notebook #22.

Quantization of Mistral 7B with AutoGPTQ and bitsandbytes NF4

With bitsandbytes NF4

To keep memory consumption low, we want to run quantized models.

If you have fine-tuned your own model with QLoRA and would like to quantize it, your best option is to load and quantize Mistral 7B with bitsandbytes nf4 as we did for QLoRA. Then, load your fine-tuned adapter on top of it. This is what we did in the last code sample in the previous section:

model = PeftModel.from_pretrained(model, "./results/checkpoint-100/")I have explored several other options, for instance merging the adapter to the base model, but none of them are optimal.

We could have used QA-LoRA to fine-tune a quantization-aware LoRA but the framework to do that doesn’t support yet Mistral 7B.

Mistral AI also released an instruction version of Mistral 7B. You can get it here instead of training your instruct Mistral 7B:

I use this model for the following quantization examples.

To load and quantize the model with bitsandbytes, you first need to install the following libraries:

pip install -q -U bitsandbytes

pip install -q -U transformers

pip install -q -U accelerateThen, run:

import torch

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

AutoTokenizer,

)

model_name = "mistralai/Mistral-7B-Instruct-v0.1"

#Tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name, use_fast=True)

tokenizer.pad_token = tokenizer.unk_token

tokenizer.pad_token_id = tokenizer.unk_token_id

tokenizer.padding_side = 'left'

#Quantization configuration

compute_dtype = getattr(torch, "float16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=True,

)

#Load the model and quantize it on the fly

model = AutoModelForCausalLM.from_pretrained(

model_name, quantization_config=bnb_config, device_map={"": 0}

)

For testing generation, you can use the generate function I defined in the previous section with the right prompt format used by Mistral Instruct:

from transformers import GenerationConfig

def generate(instruction):

prompt = "[INST] "+instruction+" [/INST]\n"

inputs = tokenizer(prompt, return_tensors="pt")

input_ids = inputs["input_ids"].cuda()

generation_output = model.generate(

input_ids=input_ids,

generation_config=GenerationConfig(pad_token_id=tokenizer.pad_token_id, temperature=1.0, top_p=1.0, top_k=50, num_beams=1),

return_dict_in_generate=True,

output_scores=True,

max_new_tokens=256

)

for seq in generation_output.sequences:

output = tokenizer.decode(seq)

print(output.split("[/INST]")[1].strip())

generate("Tell me about gravitation.")With AutoGPTQ

bitsandbytes nf4 is fast to quantize but slow for inference. Also currently we can’t serialize nf4 models.

AutoGPTQ is much slower for quantization but you only need to do it once. It’s also much faster than nf4 for decoding but keep in mind that models quantized with AutoGPTQ (INT4) are slightly worse than models quantized with nf4.

For Mistral 7B, we can follow the same procedure I used to quantize Llama 2.

We need the following libraries:

pip install -q -U bitsandbytes

pip install -q -U transformers

pip install -q -U accelerate

pip install -q -U optimum

pip install -q -U auto-gptqThen, import the following package:

import torch

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

GPTQConfig,

AutoTokenizer

)Then, the model will be quantized when we load it with “AutoModelForCausalLM.from_pretrained”.

model_name = "mistralai/Mistral-7B-Instruct-v0.1"

tokenizer = AutoTokenizer.from_pretrained(model_name, use_fast=True)

tokenizer.pad_token = tokenizer.unk_token

tokenizer.pad_token_id = tokenizer.unk_token_id

tokenizer.padding_side = 'left'

quantization_config = GPTQConfig(bits=4, dataset = "c4", tokenizer=tokenizer)

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto", quantization_config=quantization_config)Quantization is very costly in memory. You will need more than 24 GB of VRAM, e.g., the A100 of Google Colab Pro would work. I recommend saving the model once it’s loaded to make sure you won’t have to do it again.

All this quantization code is also available in notebook #23.

Note that TheBloke proposes Mistral Instruct 7B quantized with AutoGPTQ on the Hugging Face Hub:

That’s all for this article. In the next issue of The Kaitchup, we will see how to fine-tune Mistral 7B with Direct Preference Optimization (DPO) to make an instruct version of the model. As Hugging Face did for Zephyr 7B, I will use the dataset UltraFeedback (a custom version):

Hi Benjamin, I am facing trouble while testing the model after training 3 epochs for my custom dataset. I have followed same steps as you did.

While generation in test time, the code throws an error:

ValueError: You are attempting to perform batched generation with padding_side='right' this may lead to unexpected behaviour for Flash Attention version of Mistral. Make sure to call `tokenizer.padding_side = 'left'` before tokenizing the input.

How to fix this?

Hi Benjamin! I'm a newbie in quantization. Can I ask a very basic and a very general question? When I follow your instructions from this article and load a quantized model with

model = AutoModelForCausalLM.from_pretrained(

model_name, quantization_config=bnb_config

)

the loaded model has an expected size of 3.84GB, but number of model parameters are surprising to me:

Trainable parameters: 262410240

Total parameters: 3752071168

How come quantization reduced the total number of parameters from 7.2B to 3.7B? Shouldn't the total number of model parameters stay the same after quantization?