Platypus: Dataset Curation and Adapters for Better Large Language Models

Achieve low-cost state-of-the-art on your target tasks

Note: This is a free article written for The Kaitchup and also published by Towards AI.

Meta’s Llama 2 was released one month ago and many are working on fine-tuning it for specific tasks. In the same trend, Boston University proposes Platypus (Lee et al., 2023), Llama 2 fine-tuned with adapters and curated datasets.

Platypus is now (August 16th) at the first rank on the OpenLLM leaderboard.

The method proposed in this work is nothing really new. It relies on LoRa adapters and careful dataset curation. It’s nonetheless impressive in its demonstration that a new state-of-the-art performance can be achieved for a given task, using a well-curated in-domain dataset, for (almost) free thanks to specialized adapters.

In this article, I review and explain Platypus. I also show how you can achieve similar results using consumer hardware.

Note: When they worked on this project, QLoRa was not released yet (as mentioned by the authors in the paper). The computational cost reported in their paper can be significantly lowered with QLoRa. I’ll show you how towards the end of this article.

Curate your dataset for better LLM fine-tuning

The main goal of this work is to make accurate LLMs for specific domains/tasks.

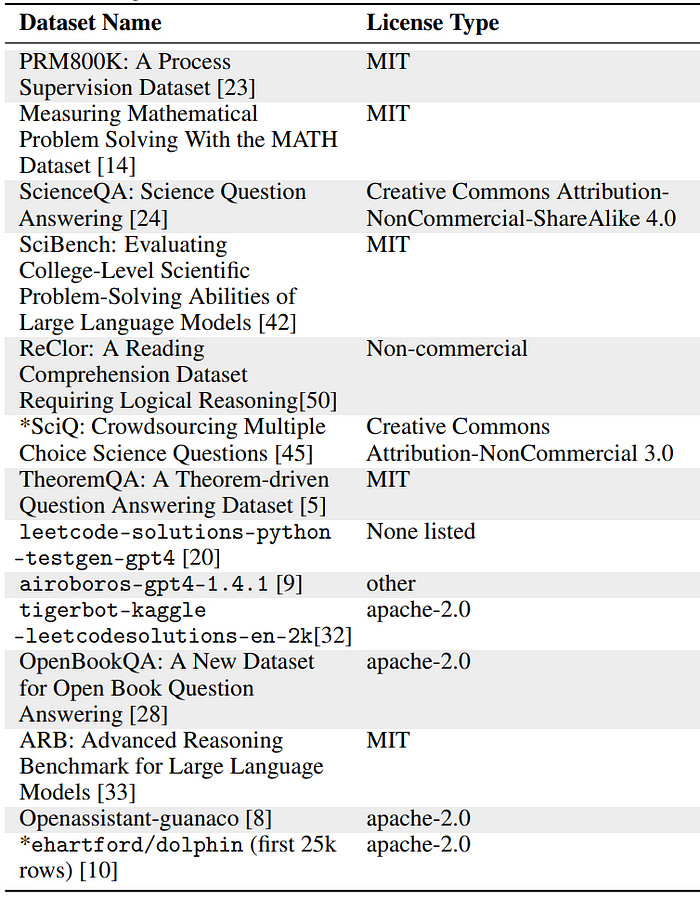

The authors’ first priority was to curate existing datasets to match their target domain, namely STEM. They assume that filtering datasets to remove irrelevant training examples (given the target domain), and examples that are nearly duplicated, would improve the accuracy of the model. It would also significantly reduce the size of the training data used for fine-tuning and consequently make fine-tuning more efficient.

Platypus is released as a family of chat models. The authors also gathered and filtered a collection of open-source instruction datasets.

For the deduplication and for promoting training example diversity, they performed these steps:

remove examples that are exactly copied more than once in the dataset

remove examples that have a cosine similarity higher than “80%” using SentenceTransformers embeddings. Note: I don’t understand what the authors mean by “80%”. Cosine similarity is a value between -1 and 1, it’s not a percentage. I would assume that they meant 0.8 as “80%”.

For the examples that were considered duplicates according to cosine similarly, they kept the example that is the most verbose.

The authors were also very careful to avoid data contamination. Data contamination is the problem in which examples of the evaluation data were included in the training data. When it happens, the LLM may over-perform on these examples making the evaluation inaccurate.

Usually, for LLM evaluation, data contamination is handled after training by removing the contaminated examples from the evaluation data. I demonstrated how this hurts the scientific credibility of an evaluation in several of my previous articles. See, for instance, my review of the evaluation of GPT-4.

The Decontaminated Evaluation of GPT-4

GPT-4 won’t be your lawyer anytime soon GPT-4 was announced by OpenAI in March with impressive demonstrations and outstanding claims. Most of these claims come from their own evaluation of GPT-4. OpenAI used many existing professional and academic exams for this evaluation.

What Boston University did is remarkable: They preserved the original evaluation data while filtering the training data. It makes their results comparable with previous work and facilitates the reproducibility of their evaluation in future work.

Specifically, they removed from the training data the examples whose questions also appear in the evaluation data. This step makes sure that the LLM won’t see these questions during training.

They have also removed all the examples containing a question that has a high cosine similarity with questions from the evaluation data.

Here are some examples of what they considered to be contamination.

Fine-tuning specialized adapters with LoRa

For fine-tuning, they simply used LoRa.

LoRa freezes the weights of the base LLM but adds low-rank tensors. Only the parameters of the added tensors are fine-tuned. Usually, they have only a few million parameters. It drastically reduces the computational cost of fine-tuning. LoRa performs as well as fine-tuning the entire base LLM, if you find the right hyperparameters.

Finding the right target modules for LoRa is not easy. Considering all the LLM’s modules is a good rule of thumb that often yields reasonable results.

In my previous article fine-tuning Llama 2, I found that only considering v_proj and q_proj performs well.

The authors of Platypus rather consider gate_proj, down_proj, and up_proj, following the work by He et al. (2022).

They set lora_alpha and lora_rank at 16. Having lora_rank=lora_alpha is a deliberate choice. This way, the weight of LoRa is equal to the weight of the original model. They don’t provide an ablation study showing the impact of this decision on their results.

Fine-tuning 13B Llama 2 with LoRa costs 5 hours using 1 A100 80GB and 22 hours for the 70B Llama 2 using 4 A100s 80GB. These costs look reasonable compared to what it would have cost without LoRa. Nonetheless, we will see that we can bring this cost further down in the remainder of this article, thanks to quantization.

Merging Adapters into Various Llama 2 Models

LoRa produces fine-tuned adapters. Platypus adapters can be merged into other models based on Llama 2.

In this work, they show that adapters consistently improve the performance of the model into which they are merged, on their target tasks. Note that it works only for the specific tasks for which the adapters have been fine-tuned. It demonstrates that fine-tuning adapters for very specific tasks is preferable and for a tiny cost, thanks to LoRa.

Fine-tune Like Platypus but with QLoRa

Note: The notebook for fine-tuning Llama 2 like Platypus is available on AI Notebook (#9).

If you read my tutorial on how to fine-tune Llama 2, you can follow the same steps.

In the Platypus paper, the authors used high-end GPUs (A100). Thanks to QLoRa, you will only need a GPU with at least 12 GB of VRAM (from the RTX 30XX family or more recent), e.g., it works fine on my NVIDIA RTX 3060 12 GB. It also works on a free instance of Google Colab.

The hyperparameters are different from the ones I used in my tutorial. You should change them as follows.

Set the LoraConfig:

peft_config = LoraConfig(

lora_alpha=16,

lora_dropout=0.05,

r=16,

bias="none",

task_type="CAUSAL_LM",

target_modules= ["gate_proj", "down_proj", "up_proj"]

)Set these training hyperparameters:

training_arguments = TrainingArguments(

output_dir="./results",

evaluation_strategy="steps",

do_eval=True,

per_device_train_batch_size=2,

gradient_accumulation_steps=8,

per_device_eval_batch_size=4,

log_level="debug",

save_steps=100,

logging_steps=50,

learning_rate=4e-4,

eval_steps=200,

fp16=True,

num_train_epochs=1,

warmup_steps=100,

lr_scheduler_type="cosine",

)They use the Alpaca data format for their fine-tuning datasets. Note that the format used shouldn’t significantly impact the final results.

Note: For the 70B version, they used a different learning rate: 3e-4.

Run a Quantized Platypus on Your Computer

You can also take an existing version of Platypus from Hugging Face Hub, and run it on your computer thanks to 4-bit on-the-fly quantization.

Note: The notebook for running quantized Platypus is available on the AI Notebooks page (#10).

You need these libraries to be installed:

pip install transformers accelerate bitsandbytesThen, run this code:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "garage-bAInd/Platypus2-13B"

prompt = "Tell me about gravity"

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto", load_in_4bit=True)

tokenizer = AutoTokenizer.from_pretrained(model_name, use_fast=True)

model_inputs = tokenizer(prompt, return_tensors="pt").to("cuda:0")

output = model.generate(**model_inputs)

print(tokenizer.decode(output[0], skip_special_tokens=True))Note that the Platypus models can’t be used for commercial purposes (cc-by-nc-sa-4.0).

Conclusion

In my opinion, Platypus is a good introduction to what the future of LLMs will look like. Big companies will stay in charge of developing base LLMs, while smaller actors will fine-tune adapters extremely specialized in solving particular problems.

Fine-tuning quantized models is so cheap that any individual now has the possibility to create LLMs for their specific needs, provided that there is a relevant instruction dataset available.