GPTQ or bitsandbytes: Which Quantization Method to Use for LLMs — Examples with Llama 2

Large language model quantization for affordable fine-tuning and inference on your computer

As large language models (LLM) got bigger with more and more parameters, new techniques to reduce their memory usage have also been proposed.

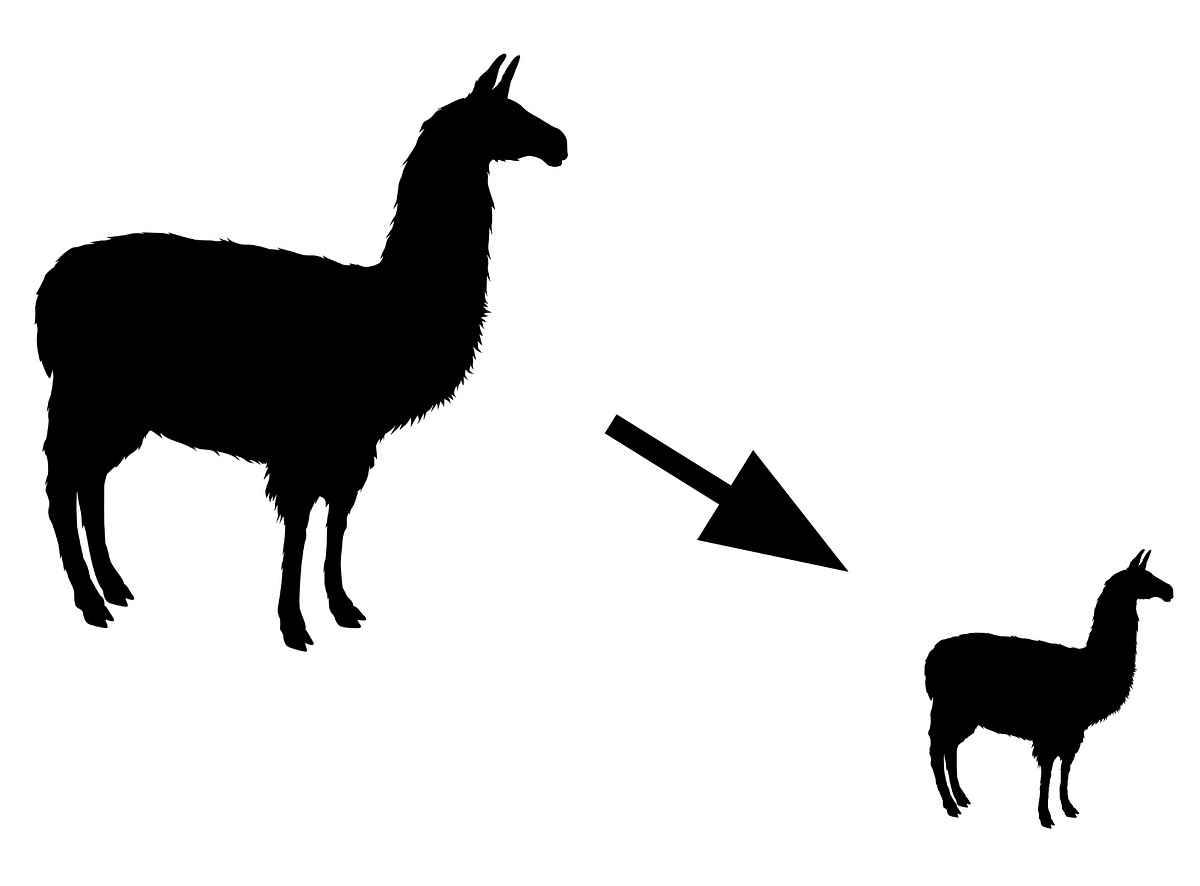

One of the most effective methods to reduce the model size in memory is quantization. You can see quantization as a compression technique for LLMs. In practice, the main goal of quantization is to lower the precision of the LLM’s weights, typically from 16-bit to 8-bit, 4-bit, or even 3-bit, with minimal performance degradation.

There are two popular quantization methods for LLMs: GPTQ and bitsandbytes.

In this article, I discuss what are the main differences between these two approaches. They both have their own advantages and disadvantages that make them suitable for different use cases. I present a comparison of their memory usage and inference speed using Llama 2. I also discuss their performance based on experiments from previous work.