Hi Everyone,

In this edition of The Weekly Kaitchup:

RWKV-6 "Finch" 7B: Attention-free and State-of-the-art 7B Model for Multilingual Tasks

DeepSeek-V2: A Huge 236B Model with Only 21B Active Parameters

Panza: Personalized LLM Email Assistant with Llama 3 8B

The Kaitchup has now 3,442 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks (60+) and more than 100 articles.

The yearly subscription is now 35% off. This promotion is available until tomorrow.

RWKV-6 "Finch" 7B: Attention-free and State-of-the-art 7B Model for Multilingual Tasks

The RWKV neural architecture is attention-free. It makes it much more efficient at inference than the transformer architecture whose attention computational cost grows quadratically with the sequence length.

The team behind RWKV regularly improves the architecture and releases new models. This is now the sixth version of RWKV and a 7B RWKV-6 has been released on the Hugging Face Hub:

BlinkDL/rwkv-6-world (Apache 2.0 license)

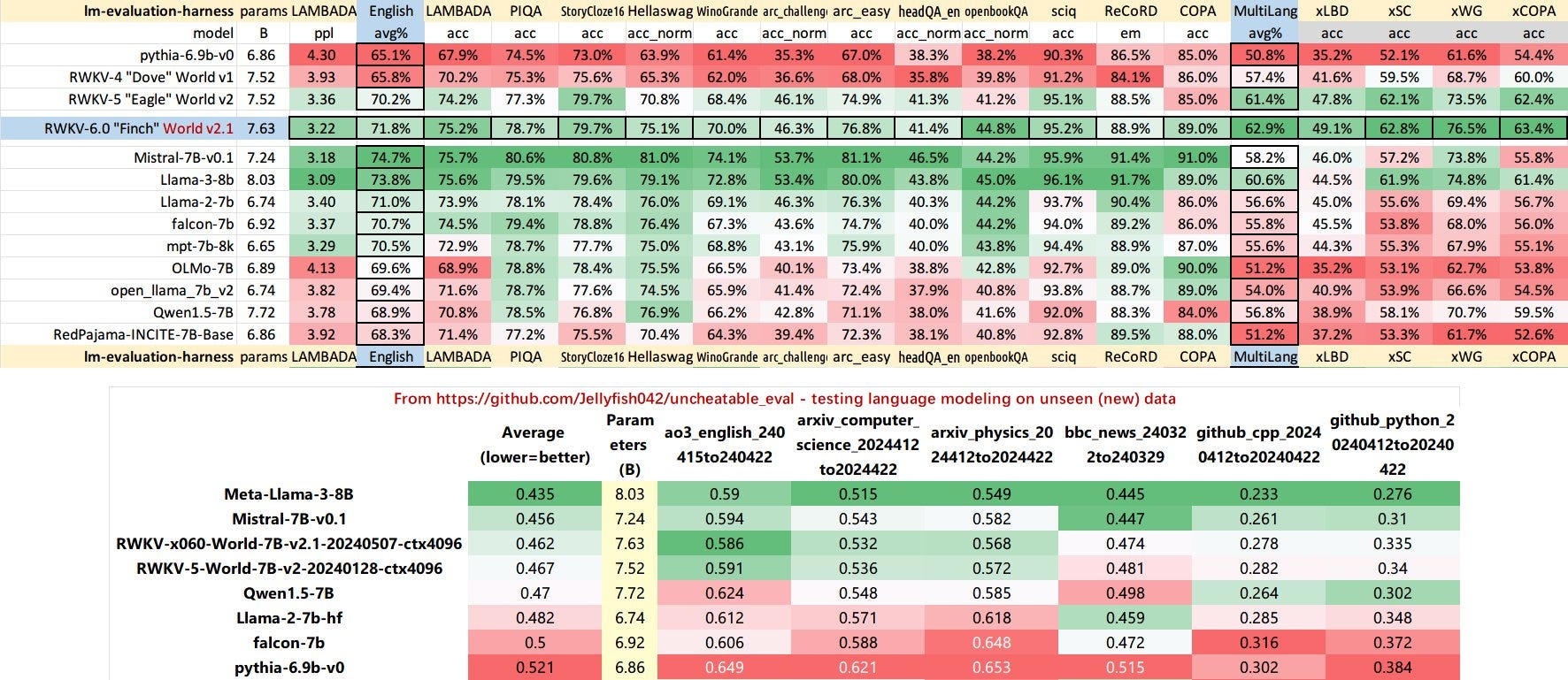

It supports more than 100 languages and has been pre-trained on 2.5T tokens. It makes it one of the best LLMs of this size for non-English languages, according to their own evaluation:

It seems better than Llama 3 8B. It is also significantly better than RWKV-5. However, for English tasks, it underperforms Mistral 7B and Llama 3 8B on most benchmarks, probably not because of the architecture but simply because it has been trained on much fewer English tokens.

I explained the RWKV architecture in this article for The Salt:

DeepSeek-V2: A Huge 236B Model with Only 21B Active Parameters

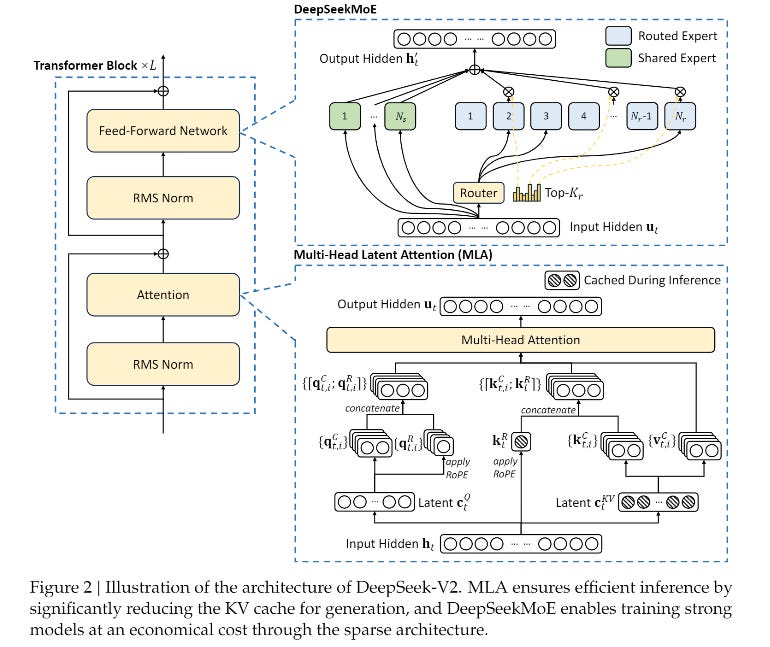

Since the release of Mixtral-8x7B, mixture-of-experts (MoE) LLMs have been shown to perform very well while being cheaper at inference compared to standard models of similar sizes. This is because not all the parameters of an MoE are active during inference. Only a subset of experts is effectively used. For instance, Mixtra-8x7B and Mixtral-8x22B only activate two experts. The decision to activate experts is taken by the router network.

With models like Mixtral-8x22B and DBRX, we now have very large MoEs with large experts.

DeepSeek AI released an even larger model, DeepSeek-V2, which has 236B parameters. It’s a huge model. DeepSeek-V2 has 160 experts (+2 shared experts) but only 6 experts are activated during inference. In other words, only 21B parameters are used.

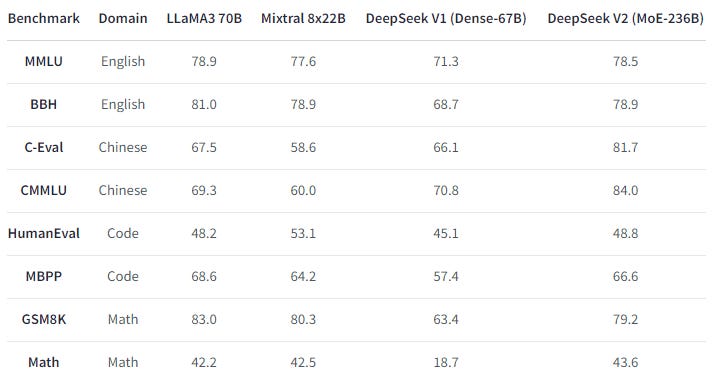

Despite using only 21B parameters, the model performs closely to Mixtral-8x22B and Llama 3 70B which are both more costly for inference:

However, since the model is huge, using it is much more challenging than Llama 3 70B. Even if only 21B is activated, we still need to load the entire model. It would nearly require 480 GB of memory. Offloading many experts to a slow memory, such as the CPU RAM or the hard drive, would be necessary to use DeepSeek-V2 on an affordable configuration.

The model is available on the Hugging Face Hub:

They published a technical report detailing the architecture and training of the model:

Panza: Personalized LLM Email Assistant with Llama 3 8B

ISTA DasLab, the same lab that created the AQLM method for quantization, released a new interesting project, Panza, for fine-tuning LLMs as email assistants:

GitHub: IST-DASLab/PanzaMail

Panza exploits a fine-tuned LLM integrated with a RAG system to produce contextually relevant emails.

The framework runs locally and doesn’t need to communicate with the Internet. The emails used for fine-tuning and RAG stay on the computer, locally.

It requires only a single GPU with 16-24 GB of memory. Training Panza is efficient, taking less than an hour for a dataset of around 1000 emails, and it can generate a new email in just a few seconds according to the authors.

The GitHub explains step by step how to set up the framework and train your own email assistant.

Evergreen Kaitchup

In this section of The Weekly Kaitchup, I mention which of the Kaitchup’s AI notebook(s) I have checked and updated, with a brief description of what I have done.

I have finalized my guide on hyperparameters and training arguments for fine-tuning LLMs:

The guide now shows examples to illustrate the impact of the most important hyperparameters. It uses TinyLlama so that you can easily reproduce the same experiments with a small GPU.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

This week in the Salt, I briefly reviewed:

⭐Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models

Extending Llama-3's Context Ten-Fold Overnight

Iterative Reasoning Preference Optimization

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!