Run a 7.7x Smaller Mixtral-8x7B on Your GPU with AQLM 2-bit Quantization

Costly quantization but cheap inference

Mixtral-8x7B is one of the best pre-trained LLMs that you can find. This model is a mixture of experts (MoE) with 46 billion parameters but uses only 14 billion parameters for inference. Yet, we still need to load the entire model in memory.

You would need more than 90 GB of GPU VRAM to load it, i.e., even a state-of-the-art GPU such as the H100 is not enough. Even 4-bit quantization still produces a model that is too large for a high-end consumer-grade GPU (e.g., the RTX 4090 24 GB).

We could offload experts of Mixtral-8x7B to the CPU RAM but, as we saw in a previous article, it significantly slows inference down.

Another alternative is to quantize the model to a lower precision. 3-bit and 2-bit quantizations are getting more accurate with recent research progress, especially for very large models such as Mixtral-8x7B.

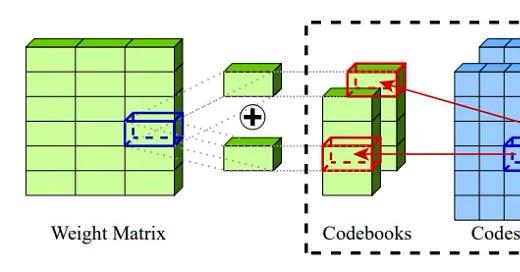

In this article, I present AQLM: A method for 2-bit quantization that has been applied to Mixtral to make it nearly 8x smaller. I briefly explain how AQLM works and demonstrate how to use Mixtral 2-bit. I also show how to quantize a model with AQLM, using TinyLlama as an example.

The following notebook shows how to run Mixtral 2-bit and quantize with AQLM: