Hi Everyone,

In this edition of The Weekly Kaitchup:

LoRA-the-Explorer: Pre-training LLMs from Scratch with LoRA

Accruate 1.5 bit LLMs

gpt-fast with Hugging Face Transformers

The Kaitchup has now 2,245 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks and articles. There is a 7-day trial that you can cancel anytime.

Evergreen Kaitchup

There are now nearly 50 notebooks listed on the AI Notebooks page of The Kaitchup. I think it’s a good time to start refreshing them regularly as most of them could be relevant for many more months if not years.

In this new section of The Weekly Kaitchup, I’ll mention which notebook I have checked and updated, with a brief description of what I have done.

Since Microsoft has recently applied a lot of modifications to Phi 2’s code, I have updated the following notebook to optimize it and make sure it is still working:

#35 Phi-2: Fine-tuning, quantization, and inference

To sum up, I removed Pytorch’s autocast for inference, updated the support of FlashAttention-2 (works only with Ampere GPUs and more recent GPUs), changed the target modules for fine-tuning with LoRA, gradient checkpointing no longer requires a special revision of the model, and small other modifications.

The article has also been updated to reflect these changes:

LoRA-the-Explorer: Pre-training LLMs from Scratch with LoRA

This is not the first time that a paper has proposed LoRA to pre-train LLMs from scratch. Last year, I presented ReLoRA:

LoRA-the-Explorer (LTE) uses a very different approach.

Training Neural Networks from Scratch with Parallel Low-Rank Adapters

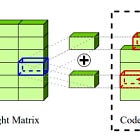

LTE is a method for collaborative model training. Initially, every device (i.e., GPU) is equipped with a unique set of LoRA parameters. As training progresses, each device works on a distinct segment of the dataset, utilizing a local optimizer for adjustments.

Periodically, after every T iterations, there's an exchange of LoRA parameters among the devices or with a central parameter server. These parameters are then averaged to update the main parameters of the model. The authors make here a parallel with the commit/merge process applied to git repositories where the repository would be the model and the commit the parameters updates. Following this, the refreshed main parameters are disseminated back to the devices, and the cycle iterates.

When using a high rank (r) and multiple heads, the method seems to perform on par with standard pre-training:

Accruate 1.5 bit LLMs

Last week, I presented AQLM which can quantize LLMs to 2-bit with a high precision.

This week Microsoft presented an impressive work training LLMs with 1.58 bit parameters.

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

The paper presents BitNet b1.58, a 1-bit version of LLMs, characterized by its ternary parameters {-1, 0, 1}. The model is as good as its FP16 counterparts in both perplexity and task-specific performance metrics, despite having the same model dimensions and training data volume.

BitNet b1.58 also offers substantial improvements in latency, memory usage, throughput, and energy efficiency.

What’s the catch?

This paper only shows examples of models with up to 3.9B parameters. Strangely, it seems that they have also trained models with up to 70B but only report on latency, memory usage, throughput, and energy efficiency for these larger models. What about their performance in terms of perplexity and accuracy in downstream tasks?

They didn’t release any code or models with the paper so we will have to wait for someone to independently reproduce their results.

gpt-fast with Hugging Face Transformers

In case you missed it, the PyTorch team released a project, named gpt-fast, accelerating inference for Llama 2 and Mixtral-8x7B models. It implements the following optimization:

Quantization with INT4 and INT8 (they also propose a pure PyTorch implementation of GPTQ in the same repository)

Speculative decoding

Tensor parallelism

The speedup is quite significant according to their benchmark:

MDK8888 has extended this project to make it compatible with Hugging Face Transformers:

GitHub: MDK8888/GPTFast

The Salt

In case you missed it, I published one new article in The Salt this week:

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!