ReLoRa: Pre-train a Large Language Model on Your GPU

LoRa but with multiple resets in a row

In 2021, Hu et al. proposed low-rank adapters (LoRa) for LLMs. This method significantly reduces the cost of fine-tuning large language models (LLMs) by only training a few added parameters (low-rank networks) while keeping the LLM’s original parameters (high-rank networks) frozen.

With LoRa, we still need an existing pre-trained model to fine-tune, i.e., it can’t pre-train a good LLM from scratch due to the low-rank restrictions. It leaves pre-training unaffordable for most individuals and organizations.

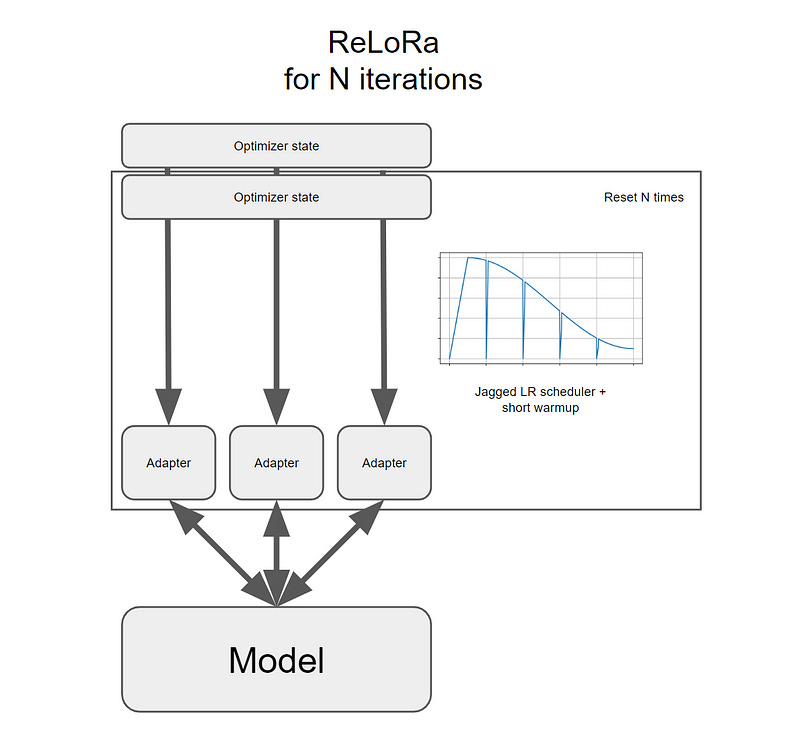

To reduce this cost, Lialin et al. (2023) propose ReLoRa. This is a modification of LoRa that allows pre-training LLMs from scratch.

In this article, I first explain how ReLoRa works. Then, I analyze and comment on the results presented in the scientific paper describing ReLoRa. In the last section, I show how to set up and run ReLoRa on your computer.

Note about the licenses: The scientific paper published on arXiv and describing ReLoRa is distributed under a CC BY 4.0 license. The source code of ReLoRa is published on GitHub and distributed under an Apache 2.0 license allowing commercial use.

ReLoRa: from low-rank to high-rank networks

To understand how ReLoRa works, we must first have a closer look at LoRa.

LoRA works by adding two different sets of new trainable parameters, A and B, which are merged back after training into the original frozen high-rank network of the pre-trained model.