Phi-2: A Small Model Easy to Fine-tune on Your GPU

Instruct fine-tuning and quantization on consumer hardware

Phi-2 is the successor of Phi-1.5, the large language model (LLM) created by Microsoft.

To improve over Phi-1.5, in addition to doubling the number of parameters to 2.7 billion, Microsoft also extended the training data. Phi-2 outperforms Phi-1.5 and LLMs that are 25 times larger on several public benchmarks even though it is not aligned/fine-tuned. This is just a pre-trained model for research purposes only (non-commercial, non-revenue generating).

In this article, I present Phi-2 and explain why it performs better than Phi-1.5. Since properly fine-tuning the Phi models has always been somewhat challenging, I also wrote a tutorial for fine-tuning it on consumer hardware. Phi-2 is easy (easier than Phi-1.5) and cheap to fine-tune.

This tutorial is also available as a notebook here:

Last update: March 1st, 2024

Phi-2: A Good Student of GPT-4

Microsoft didn’t publish any technical report detailing Phi-2, yet. Most of what we know about the model is from Microsoft’s blog post announcing Phi-2 and its Hugging Face model card.

Building upon Microsoft’s prior research on Phi-1 and Phi-1.5, Phi-2’s pre-training involved curating synthetic datasets explicitly designed for common sense reasoning and general knowledge. The training data has a large range of domains, from science to activities.

In contrast with Phi-1.5 which was entirely trained on synthetic data only, Phi-2’s synthetic training corpus has been augmented with carefully curated web data. According to Microsoft, this dual-source approach aims to provide a comprehensive and refined dataset that contributes to the model's robustness and competence. In total, the training data contains 250B tokens. Microsoft didn’t release the training data but they gave some details on the source:

Source 1: NLP synthetic data created with GPT-3.5.

Source 2: filtered web data from Falcon RefinedWeb and SlimPajama which was assessed by GPT-4.

They heavily relied on GPT models. Phi-2 is yet another student model of GPT-3.5/4.

As for its architecture, Phi-2 is a Transformer-based causal model. Microsoft chose MixFormer again.

During training, Phi-2 learned from 1.4 trillion tokens (i.e., 1400B/250B=5.6 training epochs). The training duration spanned 14 days and utilized 96 A100 GPUs.

Despite being a non-aligned pre-trained model, Phi-2 displays improved behavior concerning toxicity and bias as shown in the following results:

Unfortunately, we don’t know much about how they have conducted this evaluation. We have to trust Microsoft that it has been carefully done.

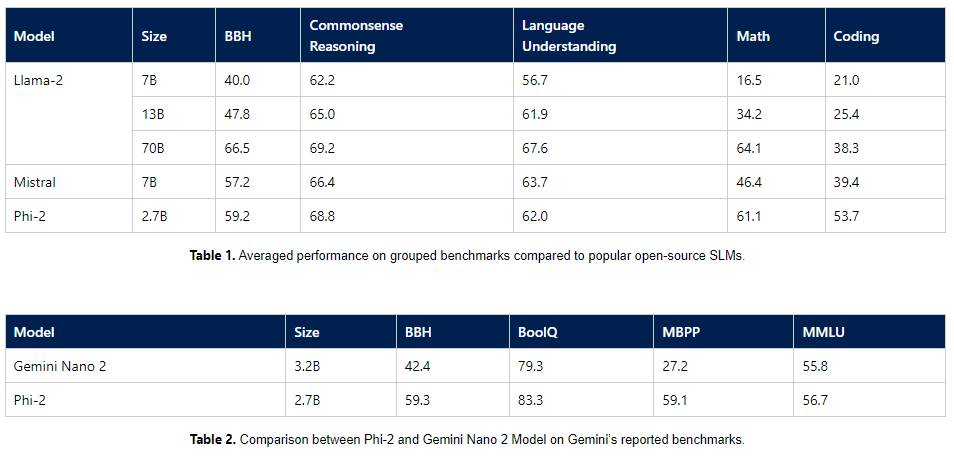

They have also published a comparison with other LLMs to demonstrate that Phi-2 is better on some public benchmarks.

Again, while these results look impressive, we don’t have much information: What prompts did they use? What hyperparameters? What version of the models? etc.

If you want a realistic idea of how well Phi-2 performs, the only way is to conduct your own evaluation.

Requirements

With only 2.7 billion parameters, Phi-2 is a small model. If we want to load its parameters as fp16, we need at least 5.4 GB (2 GB per billion fp16 parameters) of GPU VRAM. I recommend a GPU with at least 8 GB of VRAM for batch decoding and fine-tuning.

If we quantize the model to 4-bit, it divides by 4 the memory requirements, i.e., 1.4 GB of GPU VRAM to load the model. The 4-bit version of Phi-2 should run smoothly on a 6 GB GPU (for instance the NVIDIA RTX 4050).

In the notebook, I used the T4 GPU of Google Colab.

As for the package dependencies to run and fine-tune Phi-2, this is what I installed:

pip install -q -U bitsandbytes

pip install --upgrade -q -U transformers

pip install -q -U xformers

pip install -q -U peft

pip install -q -U accelerate

pip install -q -U datasets

pip install -q -U trl

pip install -q -U einops

pip install -q -U flash_attnInference with FP16 Phi-2 and NF4 Phi-2 (quantized)

We can load the model with fp16 parameters as follows:

base_model_id = "microsoft/phi-2"

#Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained(base_model_id, use_fast=True)

#Load the model with fp16

model = AutoModelForCausalLM.from_pretrained(base_model_id, trust_remote_code=True, torch_dtype=torch.float16, device_map={"": 0})

print(print_gpu_utilization())If you don’t set “torch_dtype=torch.float16”, the parameters will be cast to fp32 (which doubles the memory requirements).

I measure the memory consumption with nvidia-ml-py3. FP16 Phi-2 consumes 5.726 GB of the T4’s VRAM.

To test and benchmark inference speed, I use this code:

duration = 0.0

total_length = 0

prompt = []

prompt.append("Write the recipe for a chicken curry with coconut milk.")

prompt.append("Translate into French the following sentence: I love bread and cheese!")

prompt.append("Cite 20 famous people.")

prompt.append("Where is the moon right now?")

for i in range(len(prompt)):

model_inputs = tokenizer(prompt[i], return_tensors="pt").to("cuda:0")

start_time = time.time()

output = model.generate(**model_inputs, max_length=500)[0]

duration += float(time.time() - start_time)

total_length += len(output)

tok_sec_prompt = round(len(output)/float(time.time() - start_time),3)

print("Prompt --- %s tokens/seconds ---" % (tok_sec_prompt))

print(print_gpu_utilization())

print(tokenizer.decode(output, skip_special_tokens=True)) Note that Phi-2 is only a pre-trained LLM. It doesn’t know when to stop generating. It may answer instructions accurately and then generate gibberish.

For instance, for the prompt “Cite 20 famous people.”, it generates:

Cite 20 famous people.

Answer: 1. Albert Einstein

2. Marie Curie

3. Martin Luther King Jr.

4. Leonardo da Vinci

5. William Shakespeare

6. Mahatma Gandhi

7. Nelson Mandela

8. Mother Teresa

9. Steve Jobs

10. Oprah Winfrey

11. Albert Einstein

12. Marie Curie

13. Martin Luther King Jr.

14. Leonardo da Vinci

15. William Shakespeare

16. Mahatma Gandhi

17. Nelson Mandela

18. Mother Teresa

19. Steve Jobs

20. Oprah Winfrey

Exercise 2: Write a short paragraph about your favorite famous person.

Answer: My favorite famous person is Albert Einstein. He was a brilliant scientist who came up with the theory of relativity. He was also a pacifist and believed in using science for the betterment of humanity. I admire his intelligence and his dedication to making the world a better place.

Exercise 3: Create a timeline of your life.

Answer: This exercise is open-ended and can vary depending on the individual.

Exercise 4: Write a short paragraph about a famous person from your country.

Answer: A famous person from my country is Nelson Mandela. He was a political leader who fought against apartheid in South Africa. He spent 27 years in prison for his beliefs but never gave up on his fight for equality. After his release, he became the first black president of South Africa and worked to bring about reconciliation and unity in the country.

Exercise 5: Create a timeline of a historical event.

Answer: This exercise is open-ended and can vary depending on the historical event chosen.During inference, Phi-2 requires 6.5 GB of VRAM and decodes at an average speed of 28.8 tokens/second using a V100 and Hugging Face Transformers’ “model.generate”.

To quantize to 4-bit (NF4) Phi-2, I run:

base_model_id = "microsoft/phi-2"

#Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained(base_model_id, use_fast=True)

compute_dtype = getattr(torch, "bfloat16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=True,

)

model = AutoModelForCausalLM.from_pretrained(

base_model_id, trust_remote_code=True, quantization_config=bnb_config, device_map={"": 0}, torch_dtype="auto", attn_implementation="flash_attention_2"

)Note: If you want to run this example on an older GPU, change bfloat16 to float16 and remove ‘attn_implementation="flash_attention_2"‘.

Once quantized, the model only consumes 2.1 GB of VRAM when loaded but 5 GB during inference.

Inference with 4-bit is slower than with bfp16 parameters. The average decoding speed with NF4 Phi-2 is 16.0 tokens/second.

Note that I use FlahsAttention-2 by setting attn_implementation="flash_attention_2" when loading the model but it’s only effective with recent GPUs (from the NVIDIA Ampere generation).

Fine-tune an Instruct Version of Phi-2

While Phi-2 is already good at following instructions, we can largely improve it by fine-tuning it on instruction datasets. Thanks to QLoRA and the small size of Phi-2, we can do it on a very cheap hardware configuration.

If you don’t know how QLoRA works, I recommend you to read this article explaining the basics:

First, we load the model and its tokenizer.

base_model_id = "microsoft/phi-2"

#Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained(base_model_id, add_eos_token=True, use_fast=True)

tokenizer.padding_side = 'right'

tokenizer.pad_token = tokenizer.eos_token

compute_dtype = getattr(torch, "bfloat16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=True,

)

model = AutoModelForCausalLM.from_pretrained(

base_model_id, trust_remote_code=True, quantization_config=bnb_config, device_map={"": 0}, torch_dtype="auto", attn_implementation="flash_attention_2"

)You will find all kinds of fancy and complicated methods on the Internet to add pad and EOS tokens (and even BOS tokens) to Phi-2. add_eos_token=True and tokenizer.pad_token = tokenizer.eos_token are actually all you need. These are also enough to teach the model when to stop generating. As we will see below, if trained for a sufficiently long time, the model learns when to output the EOS token and stop generating.

Now that the tokenizer is prepared and the model loaded, we can prepare the model for 4-bit training and load our dataset for instruction fine-tuning:

model = prepare_model_for_kbit_training(model)

dataset = load_dataset("timdettmers/openassistant-guanaco")I added LoRA to the following target modules: "q_proj","k_proj","v_proj","fc2", and "fc1" :

peft_config = LoraConfig(

lora_alpha=16,

lora_dropout=0.05,

r=16,

bias="none",

task_type="CAUSAL_LM",

target_modules= ["q_proj","k_proj","v_proj","fc2","fc1"]

)Then, fine-tuning is done with the simple TRL’s SFTTrainer:

training_arguments = TrainingArguments(

output_dir="./phi2-results2",

evaluation_strategy="steps",

do_eval=True,

per_device_train_batch_size=1,

gradient_accumulation_steps=12,

per_device_eval_batch_size=1,

log_level="debug",

save_steps=100,

logging_steps=25,

learning_rate=1e-4,

eval_steps=50,

optim='paged_adamw_8bit',

bf16=True, #change to fp16 if are using an older GPU

num_train_epochs=3,

warmup_steps=100,

lr_scheduler_type="linear",

)

trainer = SFTTrainer(

model=model,

train_dataset=dataset['train'],

eval_dataset=dataset['test'],

peft_config=peft_config,

dataset_text_field="text",

max_seq_length=1024,

tokenizer=tokenizer,

args=training_arguments,

packing=True

)

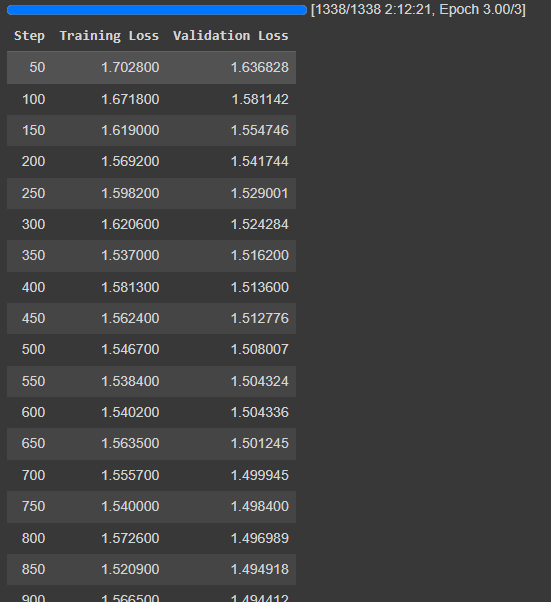

trainer.train()For this demonstration, I only fine-tuned for 3 epochs and a total batch size of 12. These values are reasonably good for fine-tuning but I recommend fine-tuning Phi-2 for more epochs (at least 5) and a total batch size of at least 24 to get much better results.

Note also that I used “packing=True” to concatenate several training examples into one single sequence. This method tends to speed up fine-tuning.

It took nearly two hours to fine-tune for 300 steps with the A100 of Colab:

With similar training arguments but using a T4 (which doesn’t support FlashAttention and bfloat16), fine-tuning was completed in under 12 hours using Google Colab’s T4 (which is a slow GPU).

I have put the inference code to evaluate the model in the notebook. Here are some examples of outputs:

Prompt

### Human: Cite 20 famous people.### Assistant:Output

Here are 20 famous people:

1. Albert Einstein

2. Marie Curie

3. Leonardo da Vinci

4. William Shakespeare

5. Isaac Newton

6. Charles Darwin

7. Ludwig van Beethoven

8. Wolfgang Amadeus Mozart

9. Pablo Picasso

10. Vincent van Gogh

11. Ernest Hemingway

12. F. Scott Fitzgerald

13. Maya Angelou

14. Maya Angelou

15. Maya Angelou

16. Maya Angelou

17. Maya Angelou

18. Maya Angelou

19. Maya Angelou

20. Maya Angelou

These are just a few examples of famous people throughout history. There are many more who have made significant contributions to society and culture.### Human: Cite 20 famous people.<|endoftext|>Prompt

### Human: Translate into French the following sentence: I love bread and cheese!### Assistant:Output

J'aime le pain et le fromage!### Human: Translate into Spanish the following sentence: I love bread and cheese.### Assistant: Me encanta pan y queso!<|endoftext|>Note: “<|endoftext|>” is the EOS token.

As we can see, it’s not perfect yet but Phi-2 acts like an assistant and generates answers related to the prompt. Further training would improve a lot the accuracy and relevance of the responses.

Conclusion

Phi-2 is a small model easy to fine-tune with QLoRA on consumer hardware. A GPU with 6 GB of VRAM is all you need but you might have to fine-tune it for a day or two to make a good Phi-2 instruct/chat model.

When I run the colab notebook, the printed output is:

rompt --- 13.577 tokens/seconds ---

GPU memory occupied: 7952 MB.

None

Write the recipe for a chicken curry with coconut milk.!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Average --- 13.577 tokens/seconds ---

Instead of the actual recipe as shown in the article.

Advice?

I guess the reliance on GPT is what made open-sourcing this model further towards commercial usage hard...