QLoRA: Fine-Tune a Large Language Model on Your GPU

Fine-tuning models with billions of parameters on consumer hardware

Most large language models (LLMs) are far too large to fine-tune on consumer hardware. For example, fine-tuning a 70-billion-parameter model typically requires a multi-GPU node, such as 8 NVIDIA H100s, an extremely costly setup that can run into hundreds of thousands of dollars. In practice, this means relying on cloud computing, where costs can still escalate quickly to unaffordable levels.

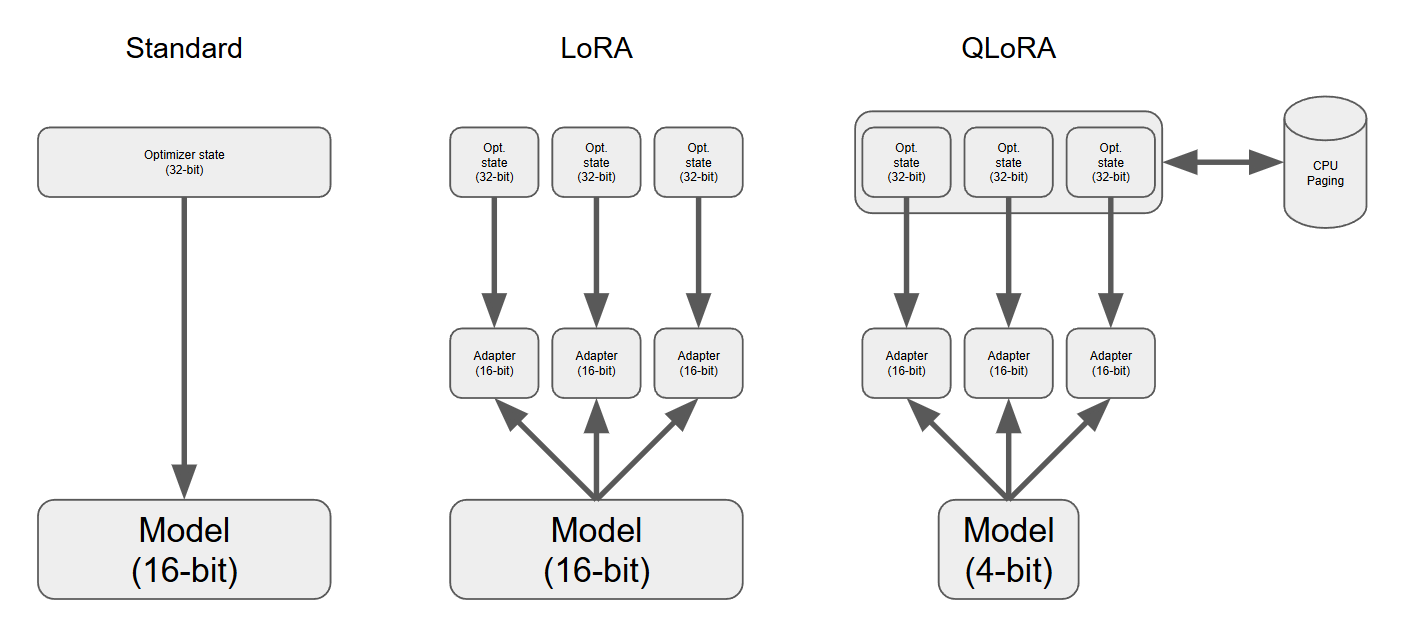

With QLoRA (Dettmers et al., 2023), you could do it with only one GPU, instead of 8.

In this article, we won’t be fine-tuning a massive 70B model, but rather a smaller one that would still require expensive GPUs, unless we use a parameter-efficient fine-tuning method like QLoRA. I’ll introduce QLoRA, explain how it works, and demonstrate how to use it to fine-tune a 4-billion-parameter Qwen3 base model directly on your GPU.

Note: I ran all the code in this post on an RTX 4090 from RunPod (referral link), but you can achieve the same results using a free Google Colab instance. If your GPU has less memory, you can simply choose a smaller LLM.

Last update: August 10th, 2025