Fine-tune Quantized Llama 2 on Your GPU with QA-LoRA

Perfectly merge your fine-tuned adapters with quantized LLMs

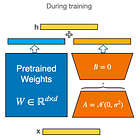

QA-LoRA is a new approach for fine-tuning “quantization-aware” LoRA on top of quantized LLMs. I wrote a review of QA-LoRA in this article:

Now that we know how it works, we will see in this tutorial how to fine-tune Llama 2, quantized with GPTQ, using QA-LoRA. I will also show you how to merge the fine-tuned adapter.

QA-LoRA is still a very young project. I had to correct the code (2 tiny corrections) to make it work for Llama 2. I released a patch and an adapter fine-tuned with QA-LoRA for Llama 2 quantized in 4-bit with AutoGPTQ.

Here is the notebook to reproduce my fine-tuning and merging using QA-LoRA:

Since we will experiment with LoRA and Llama 2 quantized with GPTQ, I recommend reading these 2 other articles before this one: