Hi Everyone,

In this edition of The Weekly Kaitchup:

Gemma 3n: The Matformer for On-Device Language Models

Devstral: A Very Good Open Model for Coding Tasks

Nemotron Nano 4B: Memory-Efficient Long Context and Reasoning

Gemma 3n: The Matformer for On-Device Language Models

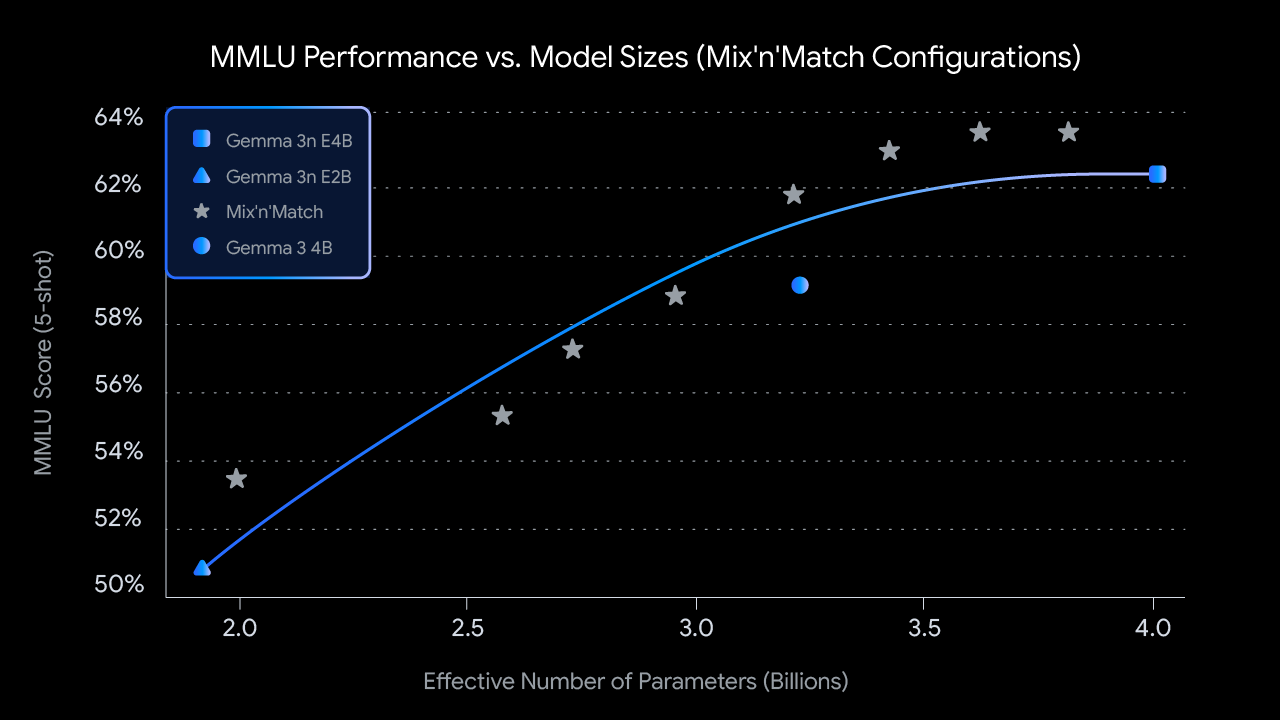

Gemma 3n are Google's new models designed primarily for efficient on-device use, such as smartphones, tablets, and laptops. It introduces architectural improvements through Matformer, optimized specifically for mobile and desktop hardware, co-developed with chipmakers like Qualcomm, MediaTek, and Samsung.

The main points:

Memory efficiency: Uses a technique called Per-Layer Embeddings (PLE) to reduce RAM requirements. Even though they technically have 5B and 8B parameters, the effective memory footprint is closer to that of smaller models (about 2–3GB RAM).

Multimodal support: They are “omni” input models, i.e., they accept audio, text, images, and video inputs. Audio features include speech recognition and translation.

Multilingual improvements: Performs better than previous versions of Gemma of similar sizes, especially in languages like Japanese, German, Korean, Spanish, and French.

These models serve as a preview for upcoming Google models, including Gemini Nano.

For now, the models are not in the “Hugging Face” format and can’t be tried easily.

Devstral: A Very Good Open Model for Coding Tasks

Devstral is a new open1 LLM specifically developed for software engineering tasks. Created by Mistral AI in collaboration with All Hands AI, it significantly improves performance on realistic coding problems, particularly addressing issues that typical LLMs struggle with, like handling context across large codebases or finding subtle bugs.

Devstral is evaluated on SWE-Bench Verified, a benchmark comprising real-world GitHub issues, and achieves a top score of 46.8%, surpassing existing open-source models by over 6 percentage points. Notably, Devstral even outperforms substantially larger models and several closed-source competitors (e.g., GPT-4.1-mini), when tested using comparable agent scaffolds, according to Mistral AI’s evaluation.

The model has 23.6B parameters and is available under the Apache 2.0 license:

Note: “Agentic” has become a marketing term. It has less and less meaning. Expect most LLMs to be released in the future to be “agentic”.

Nemotron Nano 4B: Memory-Efficient Long Context and Reasoning

NVIDIA's new Llama-3.1-Nemotron-Nano-4B-v1.1 model is a compressed and fine-tuned variant of their previous 8B reasoning model. The model's compression from 8B down to 4B parameters has been achieved through aggressive pruning and knowledge distillation techniques, using their Minitron method.

The use of supervised fine-tuning (SFT) specifically targeting math, code, reasoning, and tool usage, along with reinforcement policy optimization (RPO), indicates that the model is carefully tuned for instruction-following and reasoning-intensive workloads.

It has a large context length (128k tokens), which makes it suitable for use cases involving lengthy documents, detailed codebases, or sophisticated retrieval-augmented generation (RAG) tasks. Additionally, “reasoning” can be switched on and off, similarly to Qwen3.

If the benchmark scores truly reflect the capabilities of the model, it effectively makes the 8B version useless.

The model can run on small GPUs, with 12 GB of VRAM, or even less if quantized.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed the Qwen3 technical report:

I also reviewed in The Weekly Salt:

⭐AdaCoT: Pareto-Optimal Adaptive Chain-of-Thought Triggering via Reinforcement Learning

AdaptThink: Reasoning Models Can Learn When to Think

Thinkless: LLM Learns When to Think

Support The Kaitchup by becoming a Pro subscriber:

What You'll Get

Priority Support – Fast, dedicated assistance whenever you need it to fine-tune or optimize your LLM/VLM. I answer all your questions!

Lifetime Access to All the AI Toolboxes – Repositories containing Jupyter notebooks optimized for LLMs and providing implementation examples of AI applications.

Full Access to The Salt – Dive deeper into exclusive research content. Already a paid subscriber to The Salt? You’ll be refunded for the unused time!

Early Access to Research – Be the first to access groundbreaking studies and models by The Kaitchup.

30% Discount for Group Subscriptions – Perfect for teams and collaborators.

The Kaitchup’s Book – A comprehensive guide to LLM fine-tuning. Already bought it? You’ll be fully refunded!

All Benefits from Regular Kaitchup Subscriptions – Everything you already love, plus more. Already a paid subscriber? You’ll be refunded for the unused time!

How to Subscribe?

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!

Mistrail AI calls it “open-source”. It isn’t. They didn’t release the training pipeline and the training datasets.