Hi Everyone,

In this edition of The Weekly Kaitchup:

vLLM now supports LLM quantization with AWQ

A new approach to training LLM without a head for faster and better training

Intel’s quantized versions of Llama 2 in the ONNX format

The Kaitchup has now 585 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks and articles. There is a 7-day trial that you can cancel anytime.

If you are a monthly paid subscriber, consider switching to a yearly subscription to get a 17% discount (2 months free)!

vLLM now supports activation-aware quantization

vLLM is a project using PagedAttention (and various other optimizations) to significantly speed up the attention computation in Transformer models. In a previous article, I presented vLLM and showed how to use it to get 24x faster inference:

When I published this article, vLLM was still a very young project full of promises. Since then, it kept delivering and is now well-funded (a16z).

Their roadmap is very ambitious, but I’m especially eager to try their last release exploiting activation-aware quantization (AWQ).

I never wrote about AWQ in The Kaitchup but it’s a clever and intuitive quantization algorithm that is faster and better than GPTQ for inference. AWQ is not very popular yet since it’s younger than GPTQ and not well supported by other Python libraries such as Hugging Face transformers.

Using AWQ and vLLM means that you can have both fast and memory-efficient inference.

I’ll publish soon a tutorial on using AWQ with vLLM.

Headless LLM for faster and better training

A very impressive and surprising work by Nathan Godey, Éric de la Clergerie, and Benoît Sagot (Inria, Sorbonne Université):

Headless Language Models: Learning without Predicting with Contrastive Weight Tying

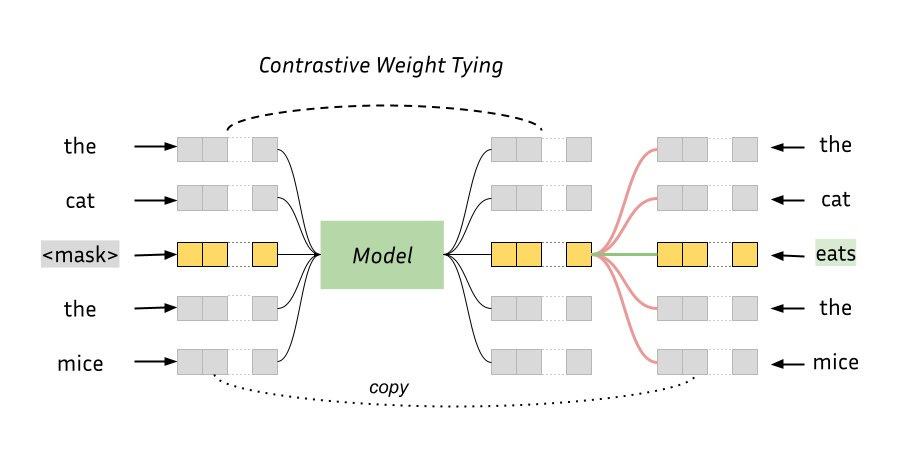

LLM such as BERT learns to predict masked input tokens. It ties the weights of the head and the input embeddings, as follows:

This work proposes to remove the language model head predicting tokens. Instead, the LLM learns to recover masked input embeddings.

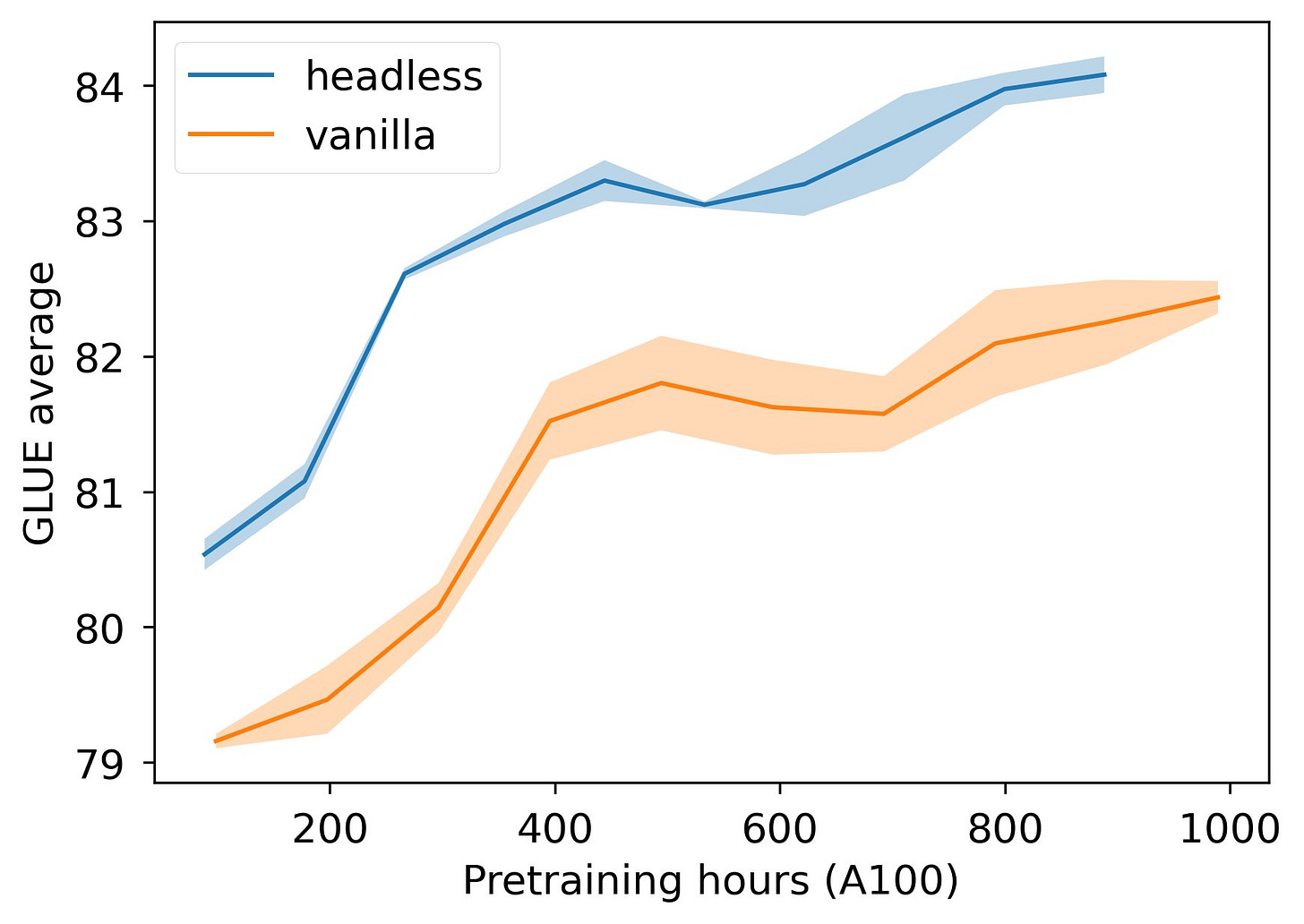

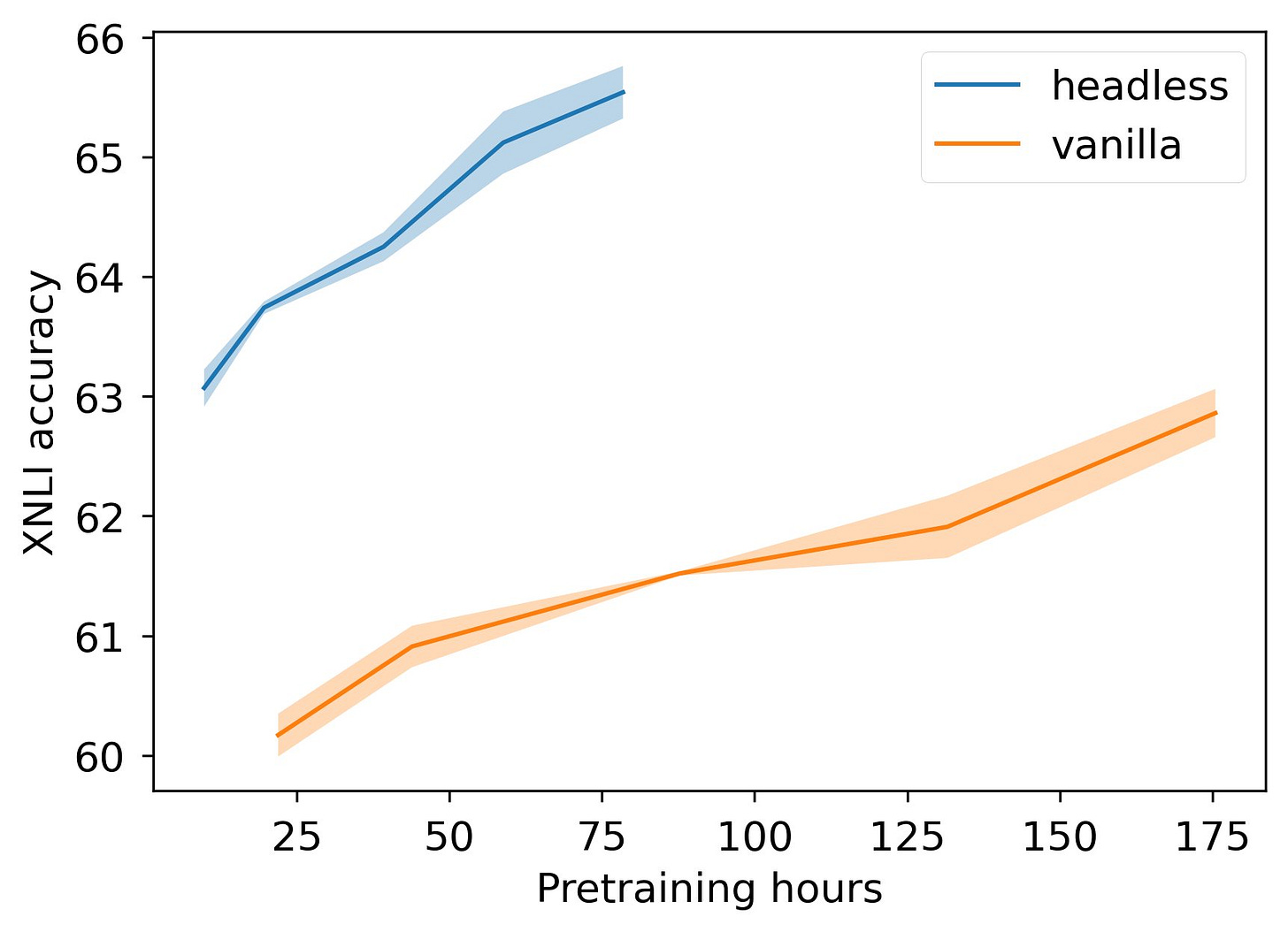

With this new training strategy, LLMs learn faster and are better. On the GLUE and XLNI tasks, the results presented by the authors are impressive:

Less pre-training hours means cheaper pre-training thanks to much faster training steps (15% to 60% faster). The authors also claim that it requires less memory.

If these results are confirmed by other research institutions, this could be our new standard to train LLMs. It works for both encoder-only and causal language models.

They have also released a model trained with this strategy:

They plan to release their code. I’ll try it and publish here my experiments with it.

Intel’s quantized versions of Llama 2

Intel quantized Llama 2 models to the ONNX format with a 4-bit (INT4) precision using their neural compressor.

It uses the RTN quantization algorithm.

The models are available on the Hugging Face hub:

Intel/Llama-2-7b-hf-onnx-int4: Compression from 26 GB (FP32) to 11 GB (INT4), i.e., 58% smaller

Intel/Llama-2-13b-hf-onnx-int4: Compression from 49 GB (FP32) to 21 GB (INT4), i.e., 57% smaller

Intel/Llama-2-70b-hf-onnx-int4: Compression from 257 GB (FP32) to 41 GB (INT4), i.e., 84% smaller

For Llama 2 70B, the compression rate is impressive. However, for the smaller versions, I recommend sticking to GPTQ (unless you need ONNX) which can achieve a much better compression rate. For instance, my version of Llama 2 7B quantized with GPTQ only weights 3.9 GB:

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!