Hi Everyone,

In this edition of The Weekly Kaitchup:

GRPO in TRL

Smaller SmolVLM

Coconut: “Thinking” without Wasting Tokens

GRPO in TRL

TRL is my go-to library to easily post-train LLMs. It implements all the state-of-the-art methods for post-training: supervised fine-tuning (SFT), DPO, SimPO, and ORPO, among many others.

It now also implements GRPO, the reinforcement learning method behind the success of DeepSeek-R1 and Qwen2.5.

In an article next week, we will see in detail how it works and check the cost of GRPO. I can already tell you: GRPO is extremely costly!

Meanwhile, here is some code snippet published by Hugging Face:

from datasets import load_dataset

from peft import LoraConfig

from trl import GRPOConfig, GRPOTrainer

# Load the dataset

dataset = load_dataset("trl-lib/tldr", split="train")

training_args = GRPOConfig(

output_dir="Qwen2-0.5B-GRPO",

learning_rate=1e-5,

logging_steps=10,

gradient_accumulation_steps=16,

max_completion_length=128,

)

trainer = GRPOTrainer(

model="Qwen/Qwen2-0.5B-Instruct",

reward_model="weqweasdas/RM-Gemma-2B",

args=training_args,

train_dataset=dataset,

peft_config=LoraConfig(task_type="CAUSAL_LM"),

)

trainer.train()To use it, install TRL from source.

Check also the documentation. It is very well written, with a good explanation of GRPO:

Smaller SmolVLM

Two new small-scale models have joined the SmolVLM lineup, with a 256M parameter version and a 500M parameter version that preserve respectable multimodal capabilities at a fraction of the size of earlier releases.

Hugging Face Collection: SmolVLM 256M & 500M

Both rely on a smaller SigLIP base patch-16/512 vision encoder instead of the heavier SigLIP 400M SO encoder, allowing them to handle images at higher resolution while reducing overall size. A few changes in tokenization methods, such as pixel shuffling and special sub-image tokens, help reduce the number of tokens when describing visual content.

Data mixes have also been revamped, placing greater focus on document understanding and image captioning while still covering visual reasoning, charts, and general instructions.

Integration is straightforward with support for popular libraries like transformers and MLX, and you can also take advantage of ONNX-compatible checkpoints for wider compatibility, including WebGPU demos.

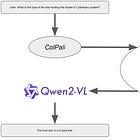

On top of this, the new “ColSmolVLM” has been released. You can use this model to make a multimodal RAG system:

Coconut: “Thinking” without Wasting Tokens

Last month, Meta published their Coconut paper:

Training Large Language Models to Reason in a Continuous Latent Space

This is what I wrote about it in The Salt:

Coconut modifies the reasoning process by using the model's hidden states as continuous inputs for subsequent steps, bypassing the need for explicit language generation. This approach allows for efficient reasoning, where multiple potential solutions are encoded simultaneously, enabling the model to explore and refine reasoning paths in a manner similar to breadth-first search.

Continuous thoughts improve decision-making by progressively narrowing down options, even without explicit training for this capability.

In other words, while reasoning LLMs like QwQ and DeepSeek-R1 may need to generate thousands of tokens to “think”, LLMs trained with Coconut don’t need to generate any tokens for the thinking step. They are much more efficient.

Meta released their implementation last week:

GitHub: facebookresearch/coconut (MIT license)

The current code only demonstrates training configurations for GPT-2, and it’s unclear how much effort would be needed to adapt it for newer models. Still, the Coconut approach shows promise for training more efficient LLMs, and it will be interesting to see whether it gains wider adoption as the field moves forward.

GPU Selection of the Week:

To get the prices of GPUs, I use Amazon.com. If the price of a GPU drops on Amazon, there is a high chance that it will also be lower at your favorite GPU provider. All the links in this section are Amazon affiliate links.

With NVIDIA's announcement of the RTX 50 series, all the RTX 4090/4080/4070 became unaffordable. Since an RTX 5060 wasn’t announced, the RTX 4060 remains at the same price.

RTX 4090 (24 GB): ASUS ROG Strix GeForce RTX™ 4090 BTF

RTX 4080 SUPER (16 GB): Inno3D GeForce RTX 4080 Super iChill Black

RTX 4070 Ti SUPER (16 GB): Zotac Gaming GEFORCE RTX 4070 Ti Super Solid OC

RTX 4060 Ti (16 GB): Zotac Gaming GeForce RTX 4060Ti AMP 16Go

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐Tensor Product Attention Is All You Need

Evaluating Sample Utility for Data Selection by Mimicking Model Weights

Towards Best Practices for Open Datasets for LLM Training

Support The Kaitchup by becoming a Pro subscriber:

What You'll Get

Priority Support – Fast, dedicated assistance whenever you need it to fine-tune or optimize your LLM/VLM. I answer all your questions!

Lifetime Access to All the AI Toolboxes – Repositories containing Jupyter notebooks optimized for LLMs and providing implementation examples of AI applications.

Full Access to The Salt – Dive deeper into exclusive research content. Already a paid subscriber to The Salt? You’ll be refunded for the unused time!

Early Access to Research – Be the first to access groundbreaking studies and models by The Kaitchup.

30% Discount for Group Subscriptions – Perfect for teams and collaborators.

The Kaitchup’s Book – A comprehensive guide to LLM fine-tuning. Already bought it? You’ll be fully refunded!

All Benefits from Regular Kaitchup Subscriptions – Everything you already love, plus more. Already a paid subscriber? You’ll be refunded for the unused time!

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!