The Weekly Kaitchup #63

Fixed Gradient Accumulation - New LLMs by NVIDIA, Zyphra, and Mistral AI

Hi Everyone,

In this edition of The Weekly Kaitchup:

Gradient Accumulation Fixed by Unsloth and Hugging Face

New LLMs: Llama 3.1 Nemotron 70B Instruct, Zamba2 7B, and Ministral 8B Instruct

The first chapter of The Kaitchup’s book is out. If you purchased the book, you have received it by email.

You can still purchase the book with a 30% discount here:

You can also get the book for free by subscribing to The Kaitchup Pro:

Gradient Accumulation Fixed by Unsloth and Hugging Face

I share my research online for moments like these.

Last week, I posted on X and Reddit some surprising results showing that gradient accumulation can significantly impair the training of language models. Initially, I suspected a logging glitch within the TRL framework, but it turned out to be a much deeper issue.

Daniel Han, one of the main authors of Unsloth, noticed my post on Reddit and managed to replicate the results with Unsloth. He spent the following days diagnosing and addressing the problem. His findings are well-explained in this blog post:

Bugs in LLM Training - Gradient Accumulation Fix

The core issue stems from how the results computed by the cross-entropy loss are used for gradient accumulation. Nearly all language models were affected. Note: This is not a PyTorch bug but rather a misuse of the loss for gradient accumulation. It’s unclear whether other deep learning frameworks, e.g., TensorFlow, are also affected.

Even models not explicitly using gradient accumulation but trained on multiple devices were impacted since gradients accumulate across devices before backpropagation.

This suggests we may have been training suboptimal models for some time.

Unsloth fixed its Trainer first, and then Hugging Face quickly addressed the problem as described here:

If you want to use the fixed gradient accumulation, install Transformers from source. The corrected version should also be available via pip very soon.

What is still unclear to me is the real impact of this issue. While it increases the training loss, it’s possible the effect is mitigated over long training runs or doesn’t significantly impact downstream tasks. However, it could also mean that future models will learn faster. I think we will find out very soon.

Next week, I plan to publish an article that explains this issue in plain English, with examples, and the impact of the corrected gradient accumulation.

Update

The article is here

New LLMs: Llama 3.1 Nemotron 70B Instruct, Zamba2 7B, and Ministral 8B Instruct

This week was particularly rich in new LLM releases.

Llama 3.1 Nemotron 70B Instruct

NVIDIA published Llama 3.1 Nemotron 70B Instruct:

nvidia/Llama-3.1-Nemotron-70B-Instruct-HF (Llama 3.1 license)

This model was trained with Reinforcement Learning from Human Feedback (RLHF), based on the original Llama 3.1 70B Instruct and exploiting Llama 3.1 Nemotron 70B Reward and HelpSteer2-Preference prompts. All these resources were published by NVIDIA with a permissive license.

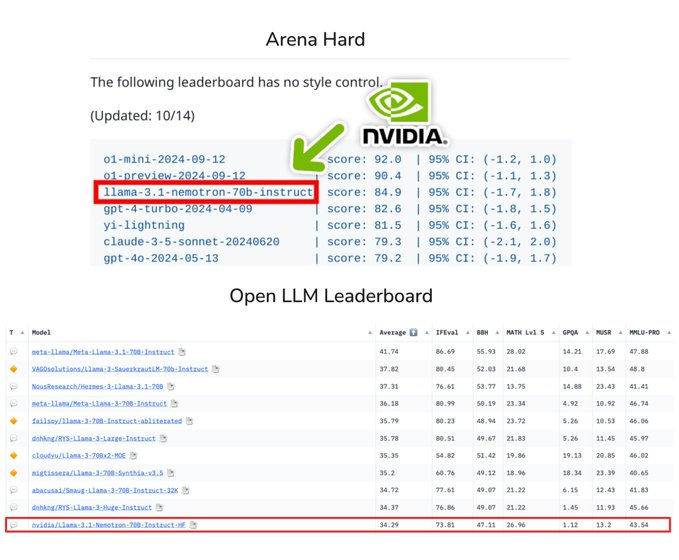

The model seems to achieve top scores on Arena Hard, AlpacaEval, and MT-Bench, i.e., benchmarks for chat models, very close to GPT-4o and Claude 3.5 Sonnet. However, third-party evaluations show that on standard benchmarks such as the ones used by the OpenLLM Leaderboard (MMLU-PRO, GPQA, etc), this model by NVIDIA significantly underperforms the original Llama 3.1 70B Instruct.

I think evaluating an instruct model on Arena Hard, AlpacaEval, and MT-Bench is correct. However, it would have been much clearer if NVIDIA had simultaneously published results on standard benchmarks and appropriately communicated them. This lack of clarity has left people uncertain about the model's true performance.

Zamba2 7B

Zyphra has added a new 7B model to the Zamba2 family.

Zyphra/Zamba2-7B (Apache 2.0 license)

Zamba2 is a hybrid language model combining state-space (Mamba) and transformer blocks, very similar to Jamba.

Its architecture exploits a Mamba backbone interleaved with shared transformer layers.

Zamba2-7B uses the Mistral v0.1 tokenizer and was pre-trained on 2 trillion tokens of web-sourced text and code data, followed by fine-tuning on 100 billion high-quality tokens.

Zyphra mentioned on the model card that this Hugging Face version of Zamba2-7B is temporary and may have limited compatibility with some Hugging Face tools and frameworks.

Ministral 8B Instruct

Mistral AI released a new 8B model, Ministral 8B. This is an instruct model and we don’t know whether they will release a base version.

Unfortunately, while the model is probably very good, it can be used only for research purposes. Mistral AI doesn’t open most of its models anymore.

If you are interested in this model for your research, it’s here:

According to Mistral AI evaluation, this model is better than Llama 3.1 8B but we don’t know how it compares with the best model of this class which is currently Qwen2.5 7B.

GPU Cost Tracker

This section keeps track, week after week, of the cost of GPUs. It only covers consumer GPUs, from middle-end, e.g., RTX 4060, to high-end, e.g., RTX 4090.

While consumer GPUs have much less memory than GPUs dedicated to AI, they are more cost-effective, by far, for inference with small batches and fine-tuning LLMs with up to ~35B parameters using PEFT methods.

To get the prices of GPUs, I use Amazon.com. If the price of a GPU drops on Amazon, there is a high chance that it will also be lower at your favorite GPU provider. All the links in this section are Amazon affiliate links.

GPU Selection of the Week:

RTX 4090 (24 GB): MSI Gaming GeForce RTX 4090

RTX 4080 SUPER (16 GB): GIGABYTE GeForce RTX 4080 Super WINDFORCE V2

RTX 4070 Ti SUPER (16 GB): MSI Gaming RTX 4070 Ti Super 16G AERO

RTX 4060 Ti (16 GB): PNY GeForce RTX™ 4060 Ti 16GB Verto™

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

This week, I reviewed:

⭐Differential Transformer

Falcon Mamba: The First Competitive Attention-free 7B Language Model

PrefixQuant: Static Quantization Beats Dynamic through Prefixed Outliers in LLMs

Pixtral 12B

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!