Hi Everyone,

In this edition of The Weekly Kaitchup:

Local-Gemma: Memory-efficient Inference with Gemma-2 by Hugging Face

xLAM: The Best LLMs for Function Calling?

MInference: Super Fast Pre-filling for Long Context LLMs

The Kaitchup will be one year old next week!

To celebrate this first anniversary, there is a 25% discount on the yearly subscription to The Kaitchup for the next two weeks.

If you are a free subscriber, consider upgrading to paid to access all the notebooks (80+) and more than 100 articles.

AI Notebooks and Articles Published this Week by The Kaitchup

Notebook: #83 Florence 2: Run a Vision-language Model on Your Computer

Notebook: #84 Fine-tune Llama 3 with Higher QLoRA Ranks (rsLoRA)

Local-Gemma: Memory-efficient Inference with Gemma 2 by Hugging Face

Hugging Face released Local-gemma, a framework built on top of Transformers and Bitsandbytes to run Gemma 2 locally.

It facilitates setting up a local instance of Gemma 2 with three memory presets trading off speed and accuracy for memory:

This is simply achieved by using two techniques for reducing GPU memory consumption:

4-bit quantization with bitsandbytes

Device map to offload parts of the model to the CPU

Moreover, local-gemma also presets different “mode” for inference depending on your target tasks: "chat", "factual" or "creative".

There is a CLI but you might prefer code for more flexibility (code example published by Hugging Face):

from local_gemma import LocalGemma2ForCausalLM

from transformers import AutoTokenizer

model = LocalGemma2ForCausalLM.from_pretrained("google/gemma-2-27b-it", preset="auto")

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2-27b-it")

messages = [

{"role": "user", "content": "What is your favourite condiment?"},

{"role": "assistant", "content": "Well, I'm quite partial to a good squeeze of fresh lemon juice. It adds just the right amount of zesty flavour to whatever I'm cooking up in the kitchen!"},

{"role": "user", "content": "Do you have mayonnaise recipes?"}

]

encodeds = tokenizer.apply_chat_template(messages, return_tensors="pt")

model_inputs = encodeds.to(model.device)

generated_ids = model.generate(model_inputs, max_new_tokens=1000, do_sample=True)

decoded_text = tokenizer.batch_decode(generated_ids)xLAM: The Best LLMs for Function Calling?

Function-calling agents are often limited by the quality of their training datasets.

Existing datasets are typically static and lack thorough verification, leading to potential inaccuracies and inefficiencies when fine-tuning models for real-world applications.

This issue becomes particularly problematic when models trained on specific APIs are suddenly required to handle new domains.

To address these challenges, Salesforce introduced APIGen, an automated pipeline designed to generate verifiable and diverse function-calling datasets. Each data point generated by APIGen underwent rigorous multi-stage verification, such as covering format, execution, and semantic accuracy.

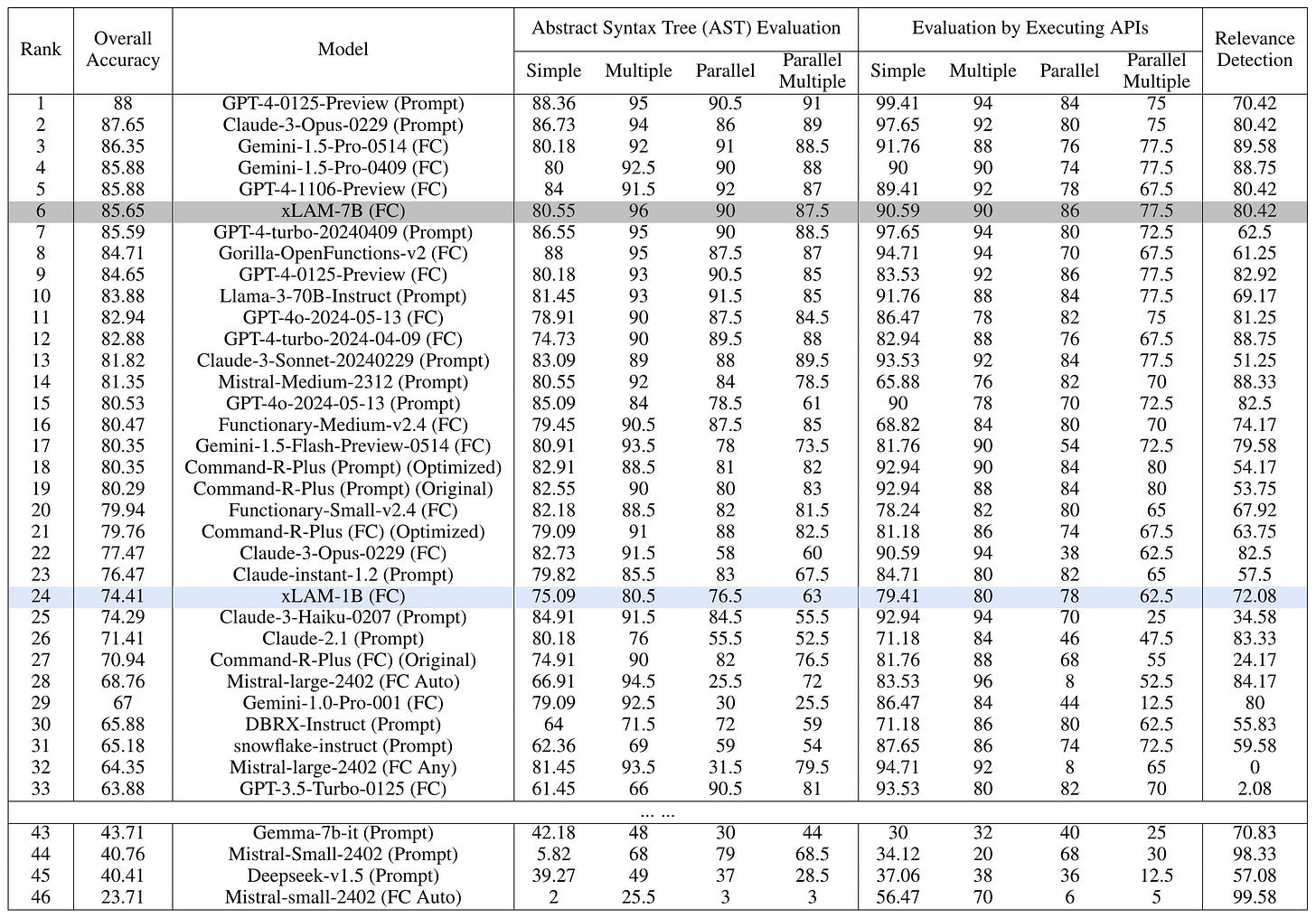

Using datasets generated by APIGen, Salesforce trained two LLMs, xLAM-1B and xLAM-7B, that seem to significantly outperform larger models for tasks requiring function calling.

Salesforce didn’t mention whether they will release xLAM, I guess not, but they released a dataset of 60k entries generated by APIGen.

It is possible to use this dataset to fine-tune your own local LLM for function calling. Mistral 7B v0.3 would be a good target for such fine-tuning.

MInference: Super Fast Pre-filling for Long Context LLMs

Microsoft released MInference, a technique that accelerates the initial stage of processing long-context LLMs, i.e., pre-filling, by up to 10 times for 1M token prompts.

GitHub: microsoft/MInference

MInference leverages the dynamic sparse nature of LLMs' attention, which includes certain static patterns, to accelerate the pre-filling process for long-context LLMs.

Initially, it determines, offline, which sparse pattern corresponds to each head. Then, it approximates the sparse index online and dynamically computes attention using optimized custom kernels.

It’s already compatible with Hugging Face Transformers and vLLM:

from vllm import LLM, SamplingParams

from minference import MInference

llm = LLM(model_name, max_num_seqs=1, enforce_eager=True, max_model_len=128000)

# Patch MInference Module

minference_patch = MInference("vllm", model_name)

llm = minference_patch(llm)

outputs = llm.generate(prompts, sampling_params)The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed the instruction pre-training method by Microsoft. I especially focused on the instruction synthesizer which I found performs very well. This is mainly thanks to an important manual work done by the authors to make many different templates for the generated instructions.

This week in the Salt, I also reviewed:

⭐LiveBench: A Challenging, Contamination-Free LLM Benchmark

Direct Preference Knowledge Distillation for Large Language Models

Towards Fast Multilingual LLM Inference: Speculative Decoding and Specialized Drafters

Unlocking Continual Learning Abilities in Language Models

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a discount for group subscriptions):

Have a nice weekend!