Hi Everyone,

In this edition of The Weekly Kaitchup:

Google Gemma 2: Larger and Better

Open LLM Leaderboard V2

MARS5: Small Text-to-speech Models for Accurate Voice Cloning

The Kaitchup has 4,146 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks (80+) and more than 100 articles.

AI Notebooks and Articles Published this Week by The Kaitchup

#81 Easy Fine-tuning with Axolotl -- Example with Llama 3

#82 Smaller LLMs with AutoRound Low-bit Quantization

Google Gemma 2: Larger and Better

Google released Gemma 2: Two new large language models with 9B and 27B parameters.

I particularly like the fact that they released a 27B version. This size is rare in the world of LLMs. Quantized to 4-bit, I expect the 27B version to only occupy 15 GB of memory. If you have a high-end consumer GPU (24 GB of memory), it still leaves 9 GB of memory for batch decoding and processing long sequences of tokens.

The main differences with the first version of Gemma are:

Sliding window attention to reduce the computational cost of the attention over long sequences

Prevents logits from growing excessively by scaling them to a fixed range, improving training.

Leverage a larger teacher model to train a smaller model (for the 9B model).

Model Merging: Combines two or more LLMs into a single new model. Note: I don’t know what they did here but it sounds interesting.

Moreover, Gemma 2 has been trained on many more tokens: 13T for the 27B version and 8T for the 9B version.

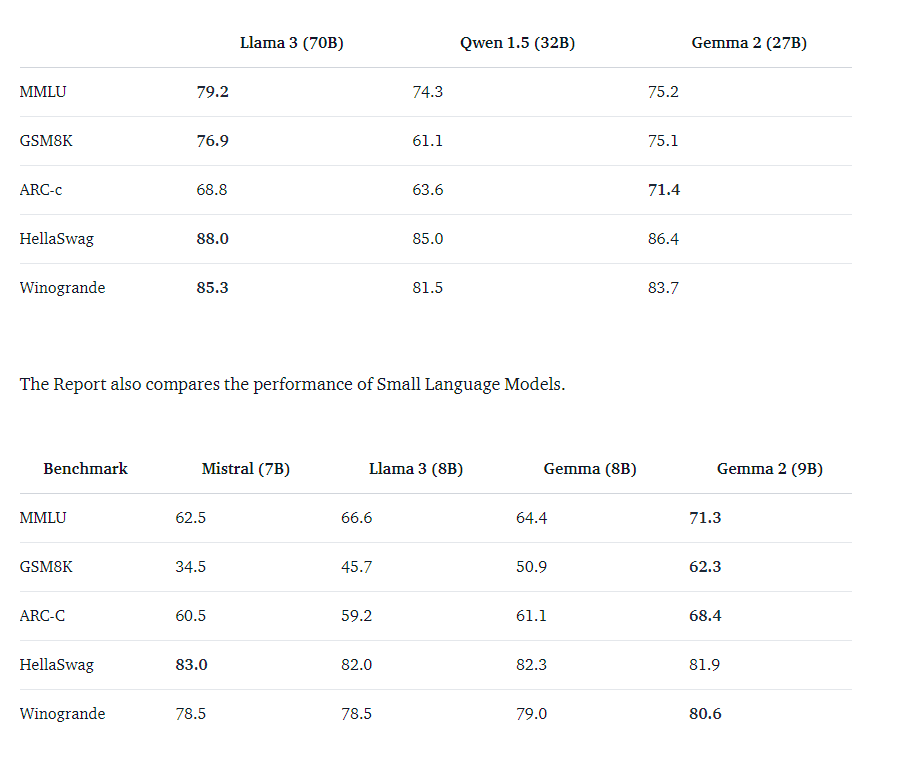

As for the performance on public benchmarks, they are good but without surprises:

A comparison with Qwen2 is missing. I expect Qwen2 7B to be significantly better on these benchmarks than Gemma 2 9B while being smaller. Google has submitted Gemma 2 to the new Open LLM leaderboard. The results will be interesting.

You can find Gemma 2 here (the models without “pytorch” in their name are compatible with HF Transformers):

Hopefully, the HF implementation will be less buggy than what it was for Gemma 1.

Blog post describing Gemma 2:

Gemma 2 is now available to researchers and developers

Open LLM Leaderboard V2

The Open LLM leaderboard by Hugging Face was one of the most popular leaderboards for LLMs. Many LLM makers relied on it to compare LLMs and claim better performance.

However, it became evident that the tasks used by the leaderboard were becoming too easy. The evaluation datasets are so popular that they might have contaminated the training data of recent LLMs or biased LLM developers to optimize performance specifically for these datasets.

Hugging Face refreshed the Open LLM leaderboard by replacing the evaluation tasks with much more difficult tasks.

IFEval: A dataset testing models' ability to follow explicit instructions, focusing on formatting rather than content.

BBH (Big Bench Hard): A subset of 23 challenging tasks from BigBench, evaluating language models using objective metrics on tasks like multistep arithmetic and language understanding, correlating well with human preferences.

MATH: A compilation of high-school level competition problems.

GPQA (Graduate-Level Google-Proof Q&A Benchmark): A challenging dataset with questions crafted by PhD-level experts, ensuring difficulty and factual accuracy, with restricted access to minimize data contamination.

MuSR (Multistep Soft Reasoning): A dataset of algorithmically generated complex problems requiring models to integrate reasoning with long-range context parsing.

MMLU-PRO (Massive Multitask Language Understanding - Professional): An improved version of MMLU with higher difficulty, reducing noise and increasing quality by presenting models with more choices and expert-reviewed questions.

For now, Qwen2 72B is ranked first on this new leaderboard, largely outperforming all the other models, including Llama 3, on all these tasks except IFEval.

Behind the leaderboard, they still use the Evaluation Harness. You can reproduce the same results on your computer.

MARS5: Small Text-to-speech Models for Accurate Voice Cloning

The authors of these models, CAMB.AI, present them like this on the GitHub webpage:

With just 5 seconds of audio and a snippet of text, MARS5 can generate speech even for prosodically hard and diverse scenarios like sports commentary, anime and more.

This sounds like a significant breakthrough, as text-to-speech (TTS) models usually require a lot of data, are larger models, or are not open.

MARS5 models are available on the Hugging Face Hub:

CAMB-AI/MARS5-TTS (AGPL-3.0 license)

You will also find a notebook in the HF hub repository for testing the models.

Their neural architecture is quite complex with auto-regressive and non-autoregressive components. The auto-regressive component is very similar to Mistral but is much smaller.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

This week in the Salt, I reviewed:

Tokenization Falling Short: The Curse of Tokenization

Mixture of Scales: Memory-Efficient Token-Adaptive Binarization for Large Language Models

⭐Instruction Pre-Training: Language Models are Supervised Multitask Learners

A Tale of Trust and Accuracy: Base vs. Instruct LLMs in RAG Systems

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a discount for group subscriptions):

Have a nice weekend!

paying 100$ for good jupyter notebook for gemma fine - tuning! 🙏 https://x.com/alexpolymath