Hi Everyone,

In The Weekly Kaitchup, I briefly comment on recent scientific papers, new frameworks, tips, open models/datasets, etc. for affordable and computationally efficient AI.

In this edition, we will see:

How to properly merge your adapters trained with QLoRA

A new huge dataset of high quality containing 3 trillion tokens

A method that creates LLM from a prompt (!)

Why bitsandbytes quantized models are slower than GPTQ models at inference time

If my experiments go well, expect to receive on Monday my first article using Microsoft’s DeepSpeed Chat to train instruct models.

The Kaitchup has now 399 subscribers. Thanks a lot for your support!

Merging QLoRA adapter with care

QLoRA fine-tunes and saves LoRA adapters. Then, you can just load the adapter on top of the base model whenever you need it, or merge it into the base model.

However, merging adapters trained with QLoRA is not as straightforward as it seems. If you directly merge the adapter to the base model, you may observe a significant drop in performance compared to what you observed during fine-tuning.

Where does this drop in performance come from?

QLoRA fine-tunes adapters on top of quantized models that are dequantized on the fly to a higher precision during fine-tuning. So the QLoRA adapter’s parameters are optimized for the dequantized model. If you merge the adapter to the original base model that is not the same as the dequantized model, it still works but the adapter was not fine-tuned for this model, hence a performance drop.

To avoid a performance drop, merging should be done as follows:

Quantize your model as you would do for QLoRA fine-tuning

Dequantize (or “convert”) the model to the same compute_dtype precision that was used during fine-tuning, typically bfloat16 or float16.

Merge the adapter to this dequantized model

Serialize

It works better because the merge is done on the same dequantized model used during fine-tuning.

To implement these steps, use this code by eugene-yh, jinyongyoo, and ChrisHayduk.

I’ll publish next week a notebook implementing, testing, and commenting on each step.

AI2 Dolma: 3 Trillion Token Open Corpus

The Allen Institute for AI released Dolma:

a dataset of 3 trillion tokens from a diverse mix of web content, academic publications, code, books, and encyclopedic materials.

It’s already available on the HF Hub but you can get it only if you have an Hugging Face account. It’s distributed with an ImpACT License Medium Risk (allowing commercial use).

The Allen Institute claims that it is the biggest open dataset.

Datasets of this size are usually used to pre-train LLMs from scratch. Considering the high quality of the data, according to the Allen Institute, it could be used to pre-train very good open LLMs.

You can find more details on how they created the dataset in this blog post.

Prompt2model

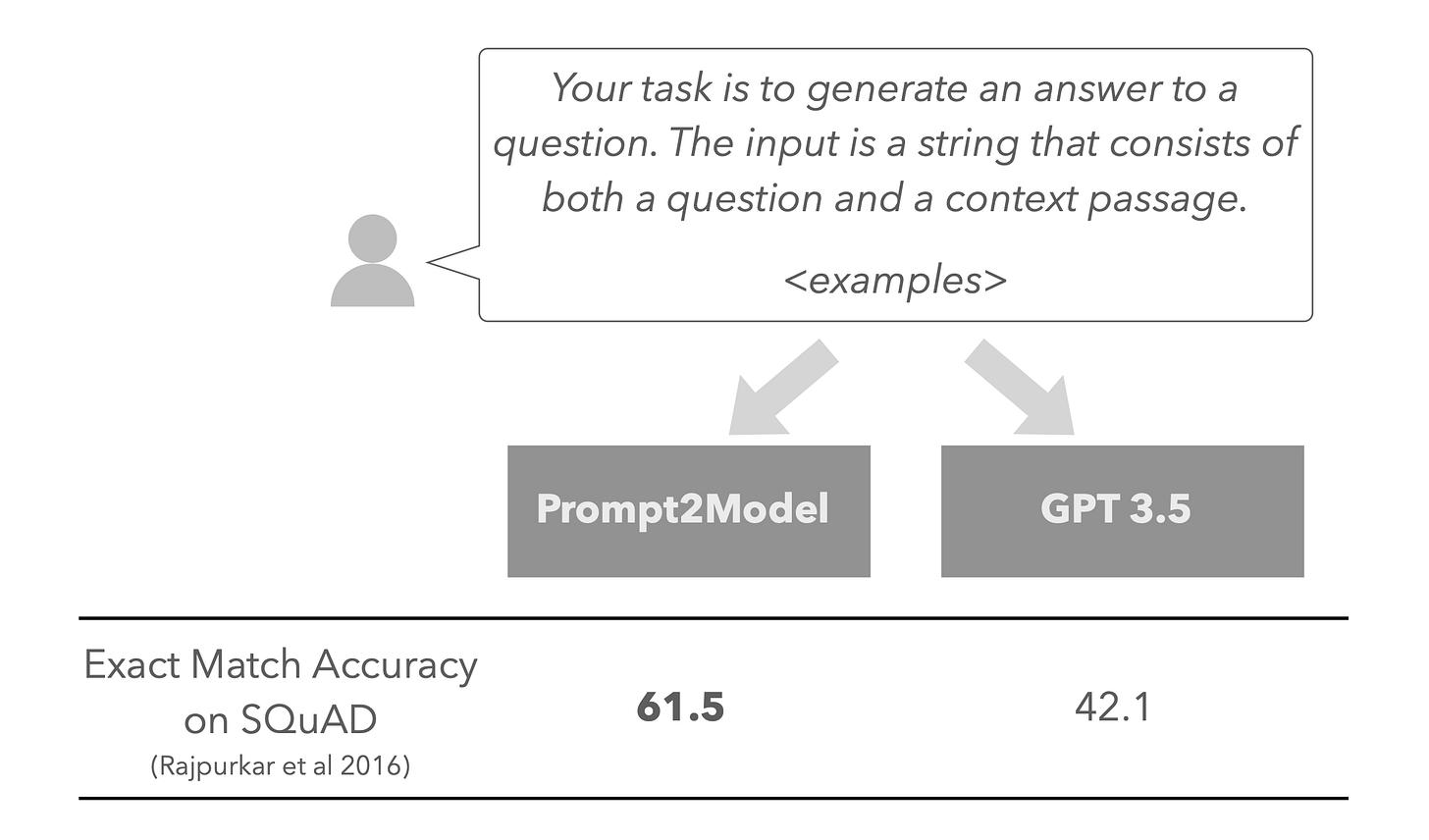

Prompt2Model (Viswanathan et al, 2023) trains small expert models using just a prompt as input. Training datasets and a base model are automatically retrieved given your prompt. Additional training data are also generated.

The chain of components required to achieve this is quite complex but the authors show that prompt2model generates expert models that are very cheap to build and run.

Here is another example:

It looks quite impressive.

This is a work by NeuLab (CMU) so you can expect it to be well-maintained and improved in the future.

The implementation of prompt2model is on GitHub (Apache 2.0 license). You will need an OpenAI API key to run it. They have also published a notebook running prompt2model on Google Colab.

Why bitsandbytes quantized models are slower than GPTQ models at inference time?

I was asked this question several times following the publication of my comparison between GPTQ and bitsandbytes.

I didn’t have to dive into the code to find the answer. The slow inference is a known issue by the authors of QLoRA who explain it here:

4-bit inference is slow. Currently, our 4-bit inference implementation is not yet integrated with the 4-bit matrix multiplication

This slow inference speed is probably only temporary. I would also guess that they are working on making nf4 models serializable.

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!