The Weekly Kaitchup #23

Open Phi-2 - Lightning Attention-2 - Mixtral paper - DPO vs. IPO vs. KTO

Hi Everyone,

In this edition of The Weekly Kaitchup:

An MIT license for Phi-2

Lightning Attention-2: The First Linear Implementation for Attention Computation

Mixtral-8x7B: The Technical Report

A Comparison Between KPO, DPO, and IPO

The Kaitchup has now 1,549 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks and articles. There is a 7-day trial that you can cancel anytime.

An MIT license for Phi-2

Microsoft has listened to the community and changed the license of Phi-2 to an MIT license. We can now use Phi-2 for commercial purposes.

Previous versions of Phi remain with a limited license for research purposes only.

Lightning Attention-2: The First Linear Implementation for Attention Computation

Linear attention computation means that we can process sequences of any length without sacrificing speed. However, current linear attention algorithms encounter challenges in causal settings, i.e., when we don’t know the next tokens.

To the best of my knowledge, lightning Attention-2 is the first linear implementation that works for causal LLMs.

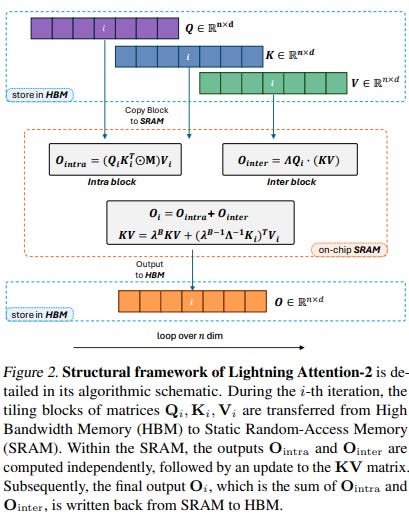

With a tiling approach, it manages intra-block and inter-block components separately, using conventional attention for intra-blocks and linear attention kernel tricks for inter-blocks. Like FlashAttention, Lightning Attention-2 aims at better exploiting the SRAM.

The authors illustrate it as follows:

This is implemented in Triton.

Lightning Attention-2 consistently maintains training and inference speed across various input sequence lengths, with a significant improvement over other attention mechanisms, including FlashAttention-2.

It is also slightly more memory-efficient than FlashAttention-2.

The details and mathematical proofs are published in this paper:

Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in Large Language Models

The authors will also release an implementation here (not available yet when I was writing this article).

Mixtral-8x7B: The Technical Report

Mistral AI published the technical report describing Mixtral-8x7B:

Unfortunately, it doesn't say much more than what we already know about the training of the model. However, I found the routing analysis (Section 5) interesting.

It shows that the router network exhibits some “structured syntactic behavior”. Consecutive tokens are often assigned to the same experts by the router network. It also exhibits an assignment pattern per domain and layer.

I explained Mixtral-8x7B in more detail here:

To run it on consumer hardware, we can offload some of the experts with mixtral-offloading:

A Comparison Between KPO, DPO, and IPO

It appears that Hugging Face is currently working on comparing different alignment algorithms.

They have trained teknium/OpenHermes-2.5-Mistral-7B, a Mistral 7B fine-tuned for code generation, on Intel/orca_dpo_pairs using 3 different preference optimization techniques:

Direct Preference Optimization (DPO): The most popular alignment method used by Hugging Face to train Zephyr.

Identity Preference Optimization (IPO): A method with better theoretical grounding and regularization than DPO.

Kahneman-Tversky Optimization (KTO): Another simpler method that removes the need for the training data to contain a rejected and a chosen output. I never wrote about it on The Kaitchup but you can find the paper here.

These 3 methods are all supported by TRL.

Hugging Face has already released several training checkpoints and plans to write a report (but I don’t know when it will be published):

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!