Hi Everyone,

In this edition of The Weekly Kaitchup:

SOLAR 10.7B: Adding Layers from Mistral 7B to Mistral 7B

OpenChat: Fine-tuning One of the Best Open LLMs without Preference Optimization

Notux 8x7B-v1: DPO on top of DPO

The Kaitchup has now 1,380 subscribers. Thanks a lot for your support!

The yearly subscription to The Kaitchup is 20% off for all new subscribers! It’s 33% cheaper than subscribing monthly. This coupon is available until the 1st of January:

Note: If you are already a monthly paid subscriber, this coupon might not work. If you want to switch to a yearly subscription and get the discount, reply to this email and I’ll generate a new coupon for you.

SOLAR 10.7B: Adding Layers from Mistral 7B to Mistral 7B

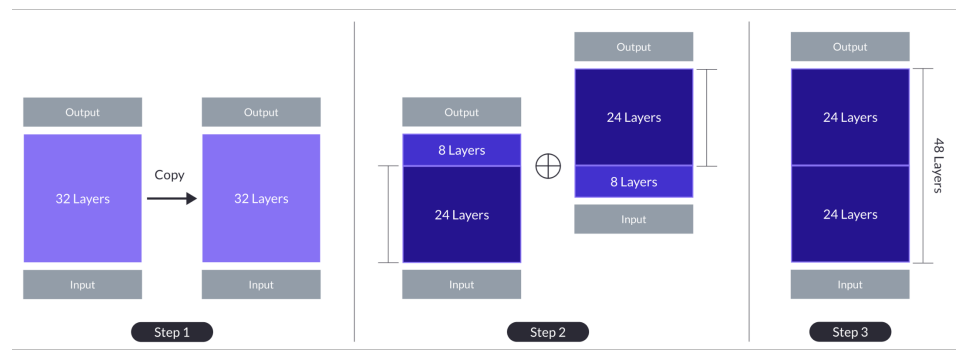

Upstage AI proposes a new and interesting way to augment the depth of an LLM: Take an LLM, copy it, remove some layers from both copies and then combine what remains into a single LLM.

They demonstrate how to do it for an LLM with the Llama 2 architecture and experiment with Mistral 7B (which has the same architecture as Llama 2).

In practice, they remove the top 8 layers from one copy of Mistral 7B, the bottom 8 layers from the other copy, and merge the remaining layers. The resulting model, Solar, has 48 layers (10.7B parameters).

Unlike a mixture of expert models, this approach doesn’t require a gating network. Solar is only a bigger model but still runs on consumer hardware with 4-bit quantization (20 GB of VRAM would be enough).

The new base model obtained after the combination is not as good as the original model (Mistral 7B). The authors had to continue pre-training the new model to outperform Mistral 7B.

Then, they fine-tuned it on an instruction dataset and aligned it with DPO.

The final model, SOLAR 10.7B instruct, outperforms most recent LLMs on a selection of benchmarks:

Nonetheless, if you are interested in using the model, I recommend running your own evaluation on your own selection of benchmarks to better assess the impact of the decoding hyperparameters and prompts.

The model is available on the Hugging Face hub:

upstage/SOLAR-10.7B-v1.0 (Apache 2.0 license)

And the technical report is on arXiv:

SOLAR 10.7B: Scaling Large Language Models with Simple yet Effective Depth Up-Scaling

OpenChat: Fine-tuning One of the Best Open LLMs without Preference Optimization

OpenChat is yet another LLM achieving ChatGPT (GPT-3.5) level performance.

This performance can be partly attributed to its base model, Mistral 7B. It is another confirmation that Mistral 7B is currently one of the best base models of this size to fine-tune chat models.

The originality of OpenChat comes from the novel reinforcement learning technique used to align the model, C-RLFT. In contrast with preference optimization methods, such as RLHF or DPO, used by recent chat models, C-RLFT doesn’t exploit preference labels but instead exploits a label corresponding to the data source.

More precisely, the data is gathered from various sources like GPT-4 and GPT-3.5 conversations, with coarse-grained rewards assigned based on the quality of the data source (e.g., GPT-4=1.0, GPT-3.5=0.1). A class-conditioned dataset is then constructed by augmenting data with source class labels, such as structuring conversations as "User: {QUESTION}GPT4 Assistant: {RESPONSE}." The large language model (LLM) is subsequently trained using C-RLFT, with a focus on regularizing the class-conditioned references to optimize the policy.

In other words, with C-RLFT, we don’t need to request a model or humans to label the data quality. This method only needs to know the data source.

C-RLFT effectively distinguishes between expert (e.g., GPT-4) and sub-optimal data (e.g., generated by GPT-3.5), showcasing its adaptability to various data qualities. According to their results, the use of mixed-quality data from different sources proves to be a sufficient strategy, allowing for a relative value reward label that differentiates between classes of data sources.

This approach outperforms simply fine-tuning on expert data alone so it can replace the “SFT” step and doesn’t require any further training steps.

Nonetheless, I would be curious to check whether a second alignment step, using DPO or IPO, can further improve the results.

Details about the model are published in this article:

OpenChat: Advancing Open-source Language Models with Mixed-Quality Data

The model itself is available on the Hugging Face hub:

openchat/openchat-3.5-1210 (Apache 2.0 license)

Notux 8x7B-v1: DPO on top of DPO

While OpenChat doesn’t use preference optimization, Argilla followed a totally different path by performing a new DPO training for Mixtral-8x7B-Instruct-v0.1, which has already been trained with DPO by Mistral AI:

For DPO training, they used a cleaner version of UltraFeedback:

We don’t know yet how well it performs but I think it’s an interesting public attempt to reapply DPO to an LLM already trained with DPO. The results will be insightful.

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!