Hi Everyone,

In this edition of The Weekly Kaitchup:

Gemini Pro vs. GPT-3.5: Another Evaluation, Another Conclusion

Apple’s Efficient Inference with Flash Memory

The Kaitchup has now 1,340 subscribers. Thanks a lot for your support!

For the year-end, the yearly subscription to The Kaitchup is 20% off! It’s 33% cheaper than subscribing monthly:

Gemini Pro vs. GPT-3.5: Another Evaluation, Another Conclusion

When Google announced Gemini, they presented an evaluation showing that Gemini Pro, the best version of Gemini currently available through Google’s API, significantly outperforms GPT-3.5 on some benchmarks.

We don’t know much about this evaluation. Many parameters, such as the prompts and the decoding hyperparameters, have not been disclosed but we know that they have a huge influence on the final results.

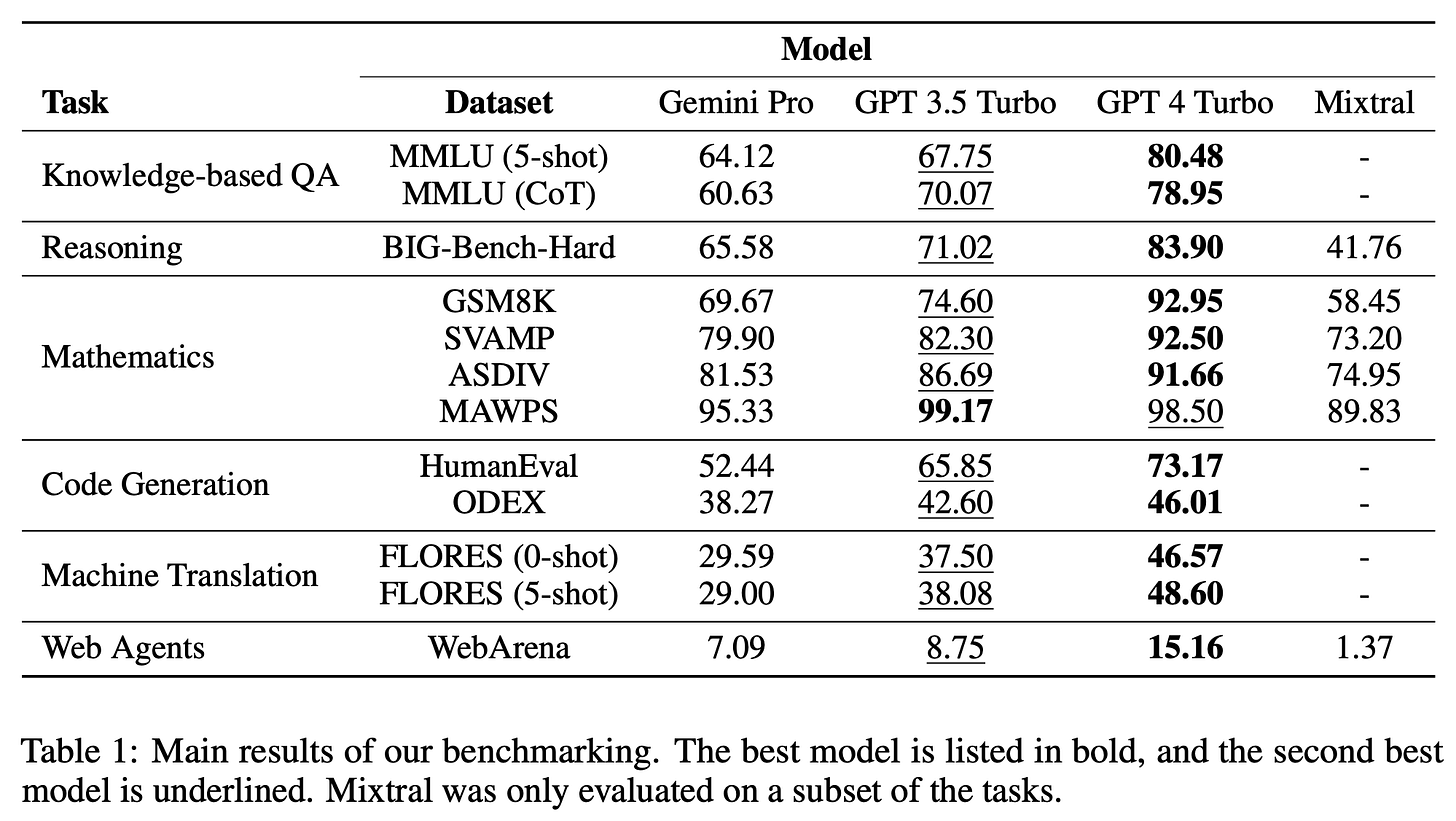

To better understand how Gemini is better than GPT models, the NeuLab of CMU performed a new evaluation on a much larger number of tasks:

Knowledge-based question answering (MMLU)

Reasoning (BIG-Bench Hard)

Math (GSM8k, SVAMP, ASDIV, MAWPS)

Code generation (HumanEval, ODEX)

Translation (FLORES)

Web Instruction Following (WebArena)

They ran Gemini Pro, GPT-3.5 Turbo, GPT-4 Turbo, and Mixtral on these benchmarks using the same prompts and the same decoding hyperparameters for all of them.

Here are the results:

They are very different from what Google has presented. With this evaluation, GPT-3.5 appears much better than Gemini Pro.

Mixtral seems very far from reaching the performance of Gemini Pro and the GPT models for all the benchmarks. One of the founders of Mistral AI publicly reached out to NeuLab (on X) to better understand why the results look so bad. It seems that NeuLab used a custom version of Mixtral instead of the official model. They plan to rerun the evaluation with Mistral’s API.

Update: A few hours after I published this, Graham Neubig released a new evaluation showing different results. For Mixtral, they used the API and they also modified the prompts for some of the benchmarks to use the same ones used by Google to evaluate Gemini. Same models, different prompts, and, indeed, totally different results:

Note that these results do not show that Gemini Pro or Mixtral are bad or worse than GPT-3.5.

They show that many parameters strongly impact the results of an evaluation. For other prompt templates or hyperparameters, Mixtral and Gemini can perform much better as shown in their respective evaluations made by their creators.

For instance, CMU used some of the evaluation tasks that Google also used for the evaluation of Gemini. For HumanEval, Google reports a score of 67.7 for Gemini Pro while CMU found 52.44: same task, same model, but different hyperparameters hence different results.

The takeaway: If you want to know the performance of a model, run your own evaluation.

Apple’s Efficient Inference with Flash Memory

Apple published an interesting work using flash memory for inference with LLMs.

LLM in a flash: Efficient Large Language Model Inference with Limited Memory

The proposed method involves the development of an inference cost model that aligns with the behavior of flash memory, guiding optimization efforts in two crucial domains: minimizing the volume of data transferred from flash and enhancing the reading of data in larger, more contiguous chunks.

This is achieved thanks to a first technique, referred to as "windowing," which strategically reduces data transfer by reusing previously activated neurons (during inference). The second technique, "row-column bundling," capitalizes on the sequential data access strengths of flash memory, thereby increasing the size of data chunks read from flash memory.

It results in a notable 4-5x and 20-25x increase in inference speed compared to naive loading approaches on CPU and GPU, respectively. However, most of this acceleration seems to vanish with batch decoding.

Now, the big question is: what Apple will do with this technology?

This is mainly targeting devices with a lot of flash memory, e.g., smartphones. Fast inference with LLMs on iPhone seems right around the corner.

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!