Hi Everyone,

In this edition of The Weekly Kaitchup:

Major update of PEFT

Serving Thousands of Concurrent LoRA Adapters

Minimum Bayes Risk Decoding with Transformers

The Kaitchup has now 1,005 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks and articles. There is a 7-day trial that you can cancel anytime.

If you are a monthly paid subscriber, switch to a yearly subscription to get a 17% discount (2 months free)!

A Major Update for PEFT

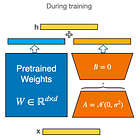

PEFT is a library implementing methods for parameter-efficient fine-tuning such as LoRA.

This week Hugging Face released PEFT 0.6.0. You can find what changes in this version here:

The most interesting changes in my opinion:

Allow merging of LoRA weights when using 4bit and 8bit quantization (bitsandbytes)

Merging LoRA weights was only possible with fp16 or fp32 models. Now, you can directly merge your adapter fine-tuned with QLoRA directly into the base model quantized with NF4. While it’s a convenient functionality, I don’t recommend it. I tried this new version of PEFT and confirmed that merging LoRA adapters into a 4-bit model performs significantly worse than keeping the adapter loaded on top of the model. It happens because LoRA adapters have a small amount of fp16 parameters that we compress during merging into a low-precision data type.

There are other solutions to do a better merge but none of them are perfect:

Increase the speed of adapter layer initialization: This should be most notable when creating a PEFT LoRA model on top of a large base model

This will save us precious seconds.

For some adapters like LoRA, it is now possible to activate multiple adapters at the same time

That’s an experimental feature. I’m curious to see what we can do with it. It seems that it was mainly proposed for image generation. I couldn’t find examples for text generation yet.

S-LoRA: Serving Thousands of Concurrent LoRA Adapters

Fine-tuning multiple LoRA adapters for different tasks and domains is straightforward and cheap. But then, at inference time, how do we manage these adapters?

Let’s imagine that we have a user who needs the model to do a particular task A, and then another task B. We have an adapter fine-tuned to perform A and another one fine-tuned to perform B.

Switching between A and B can take some time, especially for large models. We have to unload the current adapter and then load the new one. For some applications, this delay might not be acceptable.

With S-LoRA, we can now serve many adapters concurrently:

S-LoRA: Serving Thousands of Concurrent LoRA Adapters

S-LoRA stores all adapters in the main memory (CPU RAM). Then, they are transferred to the GPU memory when needed. The problem here is that by transferring adapters in and out of the GPU, we fragment the VRAM, i.e., the system needs more time to read and write the VRAM. To reduce this fragmentation, S-LoRA uses a “Unified Paging”:

Unified Paging uses a unified memory pool to manage dynamic adapter weights with different ranks and KV cache tensors with varying sequence lengths.

You can find an implementation of S-LoRA here:

https://github.com/S-LoRA/S-LoRA (Apache 2.0 License)

Minimum Bayes Risk Decoding with Transformers

Standard inference returns the most probable outputs according to the model. Instead, Minimum Bayes Risk (MBR) decoding works as follows (for the case of sampling-based MBR):

Generate several samples with the model.

Score each sample against the other samples using a metric, for instance, ROUGE, BLEU, or BLEURT.

Return the sample with the highest score.

You can see it as a method of searching for the most consensual output according to a metric.

It’s a technique commonly used in some applications such as machine translation. It is known to yield better results than standard inference.

Now, we can do MBR decoding with Hugging Face Transformers thanks to the work by the University of Zurich (they’ve worked a lot on MBR for machine translation):

You can use MBR decoding for any language generation tasks and with any of the metrics implemented by Hugging Face’s evaluate.

Here is an example using Llama 2:

from mbr import MBR, MBRGenerationConfig

from transformers import pipeline

model = MBR(LlamaForCausalLM).from_pretrained(...)

mbr_config = MBRGenerationConfig(

num_samples=10,

metric="chrf",

)

generator = pipeline("text-generation", model=model, tokenizer=tokenizer)

output = generator("Hello,", mbr_config=mbr_config, tokenizer=tokenizer)It would work very well with my Llama 2 models for translation (I recommend using the metric “COMET”).

What To Read On Substack

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!