Hi Everyone,

The Kaitchup turns 2! 🎉

In its second year, growth has been steady and linear, with a growing number of highly engaged readers, especially from major tech companies like Google, Amazon, NVIDIA, AMD, Microsoft, Dell, Tencent, Huawei, Samsung, Apple, and many more.1

Startups, particularly in AI, are also tuning in in greater numbers, which is awesome to see.

📌 One quick note: Many of you at these companies seem to be subscribing individually, often paying your subscription out of pocket. But you may be able to expense it! Most companies (and even universities) have professional development or learning budgets for exactly this kind of thing. Talk to your manager, and consider bundling subscriptions with your team.

Thanks for being part of this journey. Year three is going to be even better!

What's Ahead for Year 3 of The Kaitchup

Over the past year, I’ve mainly focused on post-training and quantizing LLMs.

Moving on from Quantization (in The Kaitchup)

In Year 3, I’ll be dialing back coverage of quantization in The Kaitchup. These articles consistently perform less well compared to others, and the current landscape makes it harder to bring fresh value:

The GGUF wave has largely standardized things, leaving little room for alternatives.

GGUF is now well-supported, highly accurate, and frankly "good enough" for most use cases.

As a result, quantization content feels too niche for The Kaitchup's broader audience.

But since I’m deeply interested in the topic, I’ll continue exploring quantization (the “scientific” side) over in The Salt.2

Also, I’ve stopped publishing new quantized models myself. It’s no longer needed, I feel. Unsloth is doing an amazing job. They often release high-quality quantized versions of new models on day one. If you're not into GGUF or bitsandbytes, check out OPEA (including people behind AutoRound), who regularly publish state-of-the-art quantized models in AWQ and GPTQ formats.

A Mess of Quantized Models But No Benchmarks

We now have tons of quantized models across different bitwidths, formats, and tools. Choosing between them has become confusing. And here’s the big issue (which few seem to talk about):

👉 Most quantized models are released without any evaluation.

There’s a mad rush to be the first to publish a GGUF or GPTQ version as soon as a new model drops, but that often means no one checks how well they actually perform. These models are often “downloaded” thousands of times.

Know this:

Quantized models can fail badly in some use cases: non-English languages, long context lengths, etc. And there’s often no way to know without proper evaluation.

I want to help fix that.

I’m working on a public leaderboard for quantized models. It won’t answer every question, but it helps me understand the LLM landscape, and I believe it’ll help you too. Expect more details next week.

What’s Next for The Kaitchup

You’ll see:

Fewer end-to-end notebooks (Unsloth and Hugging Face now excel here).

More in-depth breakdowns of algorithms and key code snippets.

Also, I’ll likely send fewer emails but pack more into each edition of The Weekly Kaitchup.

Not great for SEO, but better for readers like you.

“By the way, where’s your book??”

Yes! I’m still working on it! Right now, I’m deep into the fourth chapter, which focuses on fine-tuning quantized LLMs.

I’ve rewritten it several times to keep up with the nonstop stream of new techniques… but I’ve finally decided to focus on what actually works in practice, rather than trying to cover every shiny new method (interesting, but it could be a completely separate book on its own…).

The plan is to finish the chapter this summer, before September. After this chapter, I’ll make a full review and update from Chapters 1 to 3.

Thanks so much for your patience and all the kind messages. They really keep me going!

Moving on to The Weekly Kaitchup’s news.

The Good Surprise of the Week: LiquidAI Releasing Open Models!

T5Gemma: A Comeback of the Encoder-Decoder?

SmolLM3 by Hugging Face

The Good Surprise of the Week: LiquidAI Releasing Open Models!

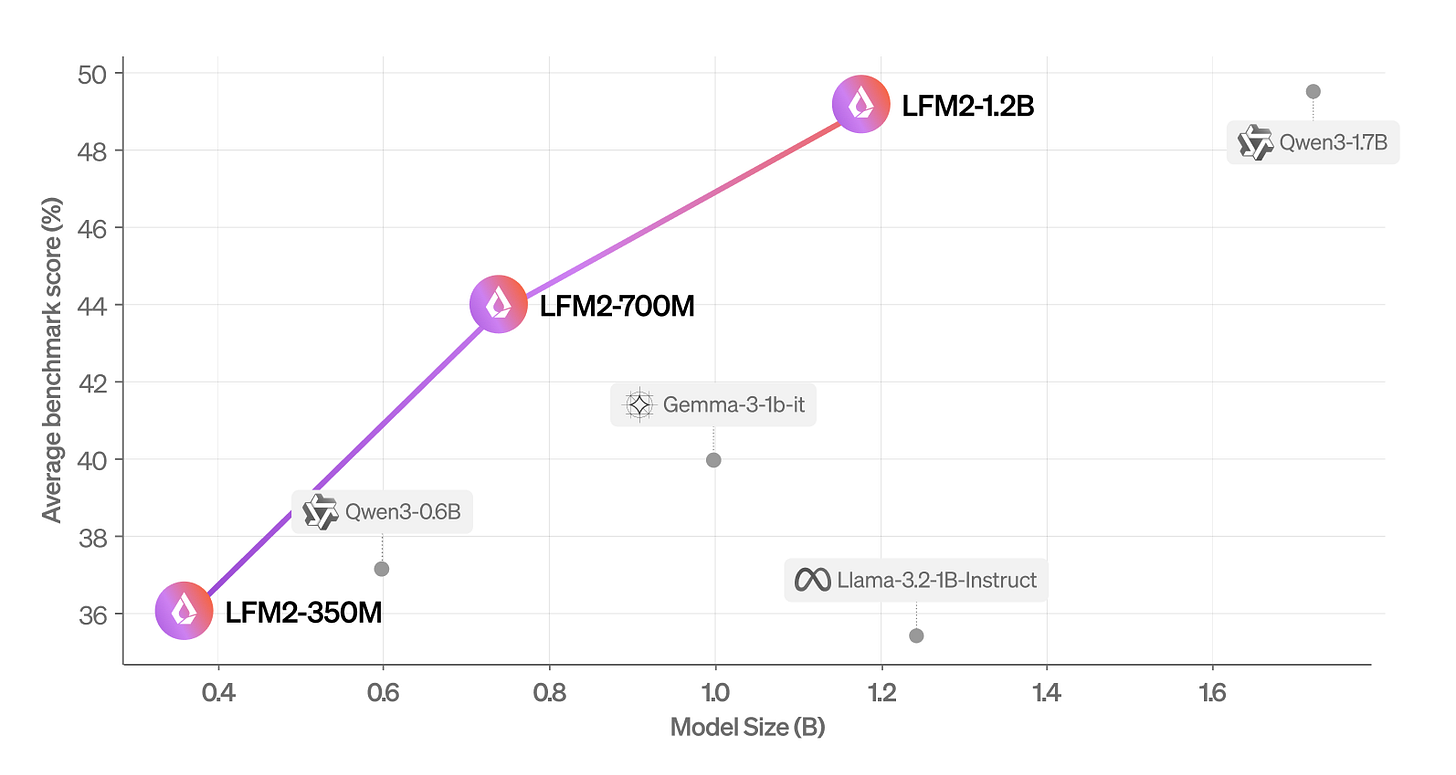

Liquid AI is building models optimized for inference efficiency, using a hybrid architecture that blends convolutional layers with self-attention. This design significantly improves inference speed, especially on edge devices, while still delivering strong general performance.

The flagship of this latest release is LFM2-1.2B, accompanied by 700M and 350M variants. It combines 10 convolutional layers with 6 attention layers, supports a 32k context window, and runs efficiently across CPUs, GPUs, and NPUs.

Trained on 10 trillion tokens from the web, code, and multilingual sources, it also supports structured tool use and uses a ChatML-style interface.

Despite its modest size and hybrid design, LFM2-1.2B outperforms models like Qwen3-0.6B and Llama3.2-1B, and comes surprisingly close to Qwen3-1.7B on benchmarks like MMLU, GSM8K, and the newly released IFBench.

The models are available on Hugging Face, along with fine-tuning notebooks in the model cards. I plan to explore them further and may write a deeper dive soon.

Hugging Face: 💧 LFM2

The Strange Release of the Week: A Comeback of the Encoder-Decoder? But Why?

T5Gemma is a new family of encoder-decoder models that revisits the classic seq2seq architecture by adapting pre-trained decoder-only Gemma 2 models. Using a flexible method called model adaptation, Google initializes both the encoder and decoder with decoder-only weights, then continues pretraining using UL2 or PrefixLM objectives.

This setup enables configurable architectures, such as pairing a large encoder with a smaller decoder, to better optimize inference efficiency and model quality, especially for input-heavy tasks like summarization and question answering.

T5Gemma shows results often matching or outperforming its decoder-only counterparts on various benchmarks. However, some caveats remain: the models may have been exposed to new pretraining data not used in Gemma 2, and variants like T5Gemma 9B-9B effectively double the parameter count of the Gemma 2 9B baseline.

Still, the adaptation approach is compelling, and the performance improvements, especially after instruction tuning, make this a development worth watching.

Hugging Face: T5Gemma

The models are not impressive IMO, but at least we know what happens if we go back to encoder-decoder. I see it as a very good research work.

SmolLM3 by Hugging Face

I had been noticing more and more commits mentioning “SmolLM3” in Hugging Face’s repositories, and this week, they finally released it.

Hugging Face: SmolLM3

SmolLM3 is a fully open 3B language model (3.08B parameters) designed for best performance while being efficient. A goal similar to what Mistral AI wants to do with Mistral Small: not the best, but good enough and very fast.

It outperforms other 3B models like Llama 3.2 and Qwen 2.5, and stays competitive with larger 4B models.

The model supports 128k context, six languages, and offers both reasoning and non-reasoning modes, like Qwen3, via a dual-mode instruction setup. It’s built entirely on public datasets with a transparent training pipeline (very similar to practices seen in AI2).

The training setup includes 11T tokens across three pre-training stages, mixing web, code, and math data, followed by mid-training for long context and reasoning. Architectural changes like Grouped Query Attention and NoPE improve both inference efficiency and long context handling. Performance was further improved via supervised finetuning and alignment, and losses in long-context tasks were mitigated through model merging.

HF has made huge progress since I covered the first SmolLM, almost one year ago:

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐ Should We Still Pretrain Encoders with Masked Language Modeling?

Does Math Reasoning Improve General LLM Capabilities? Understanding Transferability of LLM Reasoning

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% discount for group subscriptions):

Have a nice weekend!

To extract these company names from my subscriber list, I relied on the domain names of the subscribers’ email addresses.

Of course, I’ll still write about quantization in The Kaitchup, but much less often, and only to cover very substantial progress or impressive frameworks. For instance, expect articles covering the FP4 wave.