The Recipe for Extremely Accurate and Cheap Quantization of 70B+ LLMs

Cost and accuracy for quantizing large models to 4-bit and 2-bit

Quantizing large language models (LLMs), such as Llama 3.1 70B and Qwen2.5 72B, can significantly reduce their size with minimal performance loss. Once quantized, these models can run on lower-end GPUs, drastically cutting inference costs.

However, the quantization process can be resource-intensive and expensive, especially if you must search for good quantization hyperparameters. Depending on the algorithm used, the process might take days (e.g., with AQLM) or only a few hours (e.g., GPTQ), and the GPU memory requirements can vary significantly. Without careful planning, quantization costs can easily exceed $100 for the largest models.

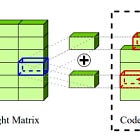

In this article, I’ll demonstrate how to successfully quantize a 70B LLM (such as Llama 3.1 and Qwen2.5 72B) to both 4-bit and 2-bit precision for under $10. This recipe uses AutoRound, a state-of-the-art, open source, and fast quantization framework developed by Intel. AutoRound efficiently uses CPU RAM and requires minimal GPU memory, making it both cost-effective and accessible. The resulting models retain 99.4% and 88.3% of the original performance when quantized to 4-bit and 2-bit, respectively.

You can try and reproduce this quantization, or apply it to your own models, directly in this notebook: