Start Here: Learn How to Adapt LLMs to Your Tasks and Budget

Fine-Tuning, Inference, Quantization, Datasets Processing and Generation, and Evaluation

If you’re new to LLMs or just want to know whether The Kaitchup is for you, start with the articles below. They’re categorized for a smooth learning path and cover the core techniques for adapting LLMs to your data and hardware at low cost while preserving quality.

You’ll find hands-on tutorials for fine-tuning and running models on your own GPU. All tutorials and notebooks are regularly updated to match current releases of PyTorch, Transformers, and popular models.

Each article begins with a short overview of what you’ll learn and when to use it.

Fine-Tuning LLMs

Did you know that LoRA fine-tuning can be as good as full fine-tuning? Not only can it be done on consumer GPUs for most LLMs, but it is also very simple. Here are a few articles showing you how to do it right:

Tests how LoRA holds up beyond toy setups, including large ranks, dataset sizes, and adapter placement.

Walks through QLoRA’s recipe, 4-bit base model + frozen weights + trainable low-rank adapters, to enable SFT on a single consumer GPU. Covers setup, memory budget, and expected quality/cost trade-offs.

Adds AutoRound to the QLoRA stack to improve quantization quality and stability. Shows how to configure it and where it reliably beats vanilla QLoRA for the same hardware budget.

Compares SFT behavior and outcomes between Qwen3 base and reasoning variants. Details data choices, hyperparameters, and where each model type is preferable.

Local Inference

Compares serving stacks across throughput, latency, model coverage, and operational complexity. Provides guidance on when to pick vLLM (server inference, scaling) vs. Ollama (local/dev, simple deployment).

Shows how to host several adapters side-by-side on one base model with vLLM. Explains routing, template configuration, and pitfalls when swapping adapters at request time.

Step-by-step deployment of LoRA adapters using Ollama’s model files and CLI.

Practical tuning guide for GGUF inference.

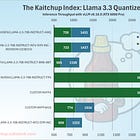

Quantization: Compress LLMs to Run the Largest Models on Your Computer

Explains how to quantize to GGUF with imatrix/K-quants. Includes commands, expected speed/accuracy, and when lower bit-widths are acceptable.

Benchmarks Qwen3 across low-bit settings, highlighting where accuracy holds and where it drops. Offers configuration tips for stable 4-bit and experimental 2-bit runs.

Summarizes NVFP4’s kernel/format advantages on Blackwell-class GPUs. Presents throughput gains and parity targets versus existing 4-bit formats.

Datasets

Describes a controlled pipeline to generate persona-driven dialogues for SFT.

Uses GLM-Z1 to generate chain-of-thought style data with budget controls. Details sampling settings, verification passes, and dataset assembly for reasoning tasks.

Preference Optimization and Reinforcement Learning (RL)

Compares full-parameter DPO with LoRA-based DPO on cost, stability, and final preference metrics. Shows where LoRA matches full training and where it needs rank/LR adjustments.

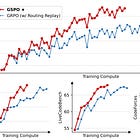

Introduces GRPO, its objective, and differences from PPO-style RLHF. Provides a runnable setup and guidance on rewards, sampling, and stability.

Evaluates GSPO against GRPO, especially on MoE architectures. Focuses on stability, efficiency, and why per-expert dynamics change the training recipe.