Optimum-Benchmark: How Fast and Memory-Efficient Is Your LLM?

Benchmarking quantization methods for Mistral 7B

When building applications with large language models (LLMs), knowing their running costs is critical. To optimize this cost, we must know precisely the hardware requirement for a given LLM. In other words, we need to know at least how much memory and the type of memory it will need, how fast the model is during inference, and several other performance-related metrics to evaluate its operational characteristics.

With optimum-benchmark, a framework developed by Hugging Face, we can generate a thorough assessment of the model's overall efficiency using key indicators such as memory usage, inference latency, and throughput.

In this article, I present optimum-benchmark and review its main features. Then, we will see how to use it to benchmark LLMs’ performance. For demonstration, I will especially focus on benchmarking quantization methods applied to Mistral 7B.

My notebook running optimum-benchmark for Mistral 7B is available here:

Optimum-benchmark

For a given LLM, your hardware configuration may not have enough memory for inference/training. And if it has enough memory, you want to know in advance, e.g., before deployment, how fast the model is on your machine.

With optimum-benchmark, we can have an accurate picture of how fast and memory-efficient an LLM will be for a given hardware configuration. The framework can report on the following:

Memory usage

Latency for generation

Throughput for generation

There are many more metrics that you can find on the GitHub page but these are the most useful ones in my opinion. We will see how to run these metrics in the following section.

Another important feature of optimum-benchmark is that it can benchmark LLMs quantized with various schemes, such as GPTQ and bitsandbytes NF4.

We can specify what we want to evaluate through a simple command line or with a YAML configuration file. I rather recommend the use of configuration files since it makes it easier to rerun the same benchmark later.

Here is an annotated example for benchmarking Mistral 7B for inference:

defaults:

- backend: pytorch # default backend

- launcher: process

- benchmark: inference # we will monitor the inference

- experiment # inheriting from experiment config

- _self_ # for hydra 1.1 compatibility

- override hydra/job_logging: colorlog # colorful logging

- override hydra/hydra_logging: colorlog # colorful logging

hydra:

run:

dir: experiments/${experiment_name} #The results will be reported in this directory. Note that "experiment_name" refers to the configuration field name "experiment_name" below

sweep:

dir: experiments/${experiment_name}

job:

chdir: true

env_set: #These are environment variable that you may want to set before running the benchmark

CUDA_VISIBLE_DEVICES: 0

CUDA_DEVICE_ORDER: PCI_BUS_ID

sweeper:

params:

benchmark.input_shapes.batch_size: 1,2,4,8,16 #we will try all these batch sizes

experiment_name: fp16-batch_size(${benchmark.input_shapes.batch_size})-sequence_length(${benchmark.input_shapes.sequence_length})-new_tokens(${benchmark.new_tokens})

model: mistralai/Mistral-7B-v0.1 #The model that we want to evaluate. It can be from the Hugging Face Hub or local directory

device: cuda #Which device to use for the benchmark. We will use CUDA, i.e., the GPU

backend:

torch_dtype: float16 #The model will be loaded with fp16

benchmark:

memory: true #We will monitor the memory usage

warmup_runs: 10 #Before the monitoring starts, the inference will be run 10 times for warming up

new_tokens: 1000 #Inference will generate 1000 tokens

input_shapes:

sequence_length: 512 #Prompt will have 512 tokensA configuration file can be quite long. We will see in the next section how to run it and how to change it to benchmark a quantized LLM.

Benchmarking Mistral-7B with Optimum-Benchmark

Optimum-benchmark can be installed from source as follows:

python -m pip install git+https://github.com/huggingface/optimum-benchmark.gitThen, since we will also evaluate Mistral-7B quantized with AWQ, GPTQ, and NF4, we also need to install the following:

pip install bitsandbytes #for NF$

pip install auto-gptq #for GPTQ

pip install autoawq #for AWQIf you are using Google Colab, you will need the last version of Transformers:

pip install --upgrade transformersBenchmarking FP16 Mistral 7B

For this first run of optimum-benchmark, we will benchmark Mistral 7B loaded with fp16. We will use the configuration I defined in the previous section. I copied it into a file named “mistral_7b_ob.yaml” and put it in the current directory.

To run this configuration, we simply need to call optimum-benchmark with this command line:

optimum-benchmark --config-dir ./ --config-name mistral_7b_ob --multirunThe arguments are:

config-dir: The directory containing the configuration file.

config-name: The name of the configuration file (without the .yaml extension).

multirun: We must set this one to tell optimum-benchmark that we will run several configurations, in this case, different batch sizes as indicated in the “sweeper” section of the configuration file.

Since the model is not quantized, we will need a lot of GPU VRAM. I used the A100 of Google Colab but an NVIDIA RTX with 24 GB of VRAM would also work fine.

It takes 13 minutes and it should print something similar to this:

It creates an “experiments” directory containing one subdirectory for each batch size benchmarked. Inside these subdirectories, there is one CSV file containing the benchmarking data: Memory consumption, throughput, latency, etc.

Benchmarking Mistral 7B Quantized with BNB’s NF4

To benchmark a quantized model, we need to modify the “backend” section of the configuration file. To benchmark NF4 quantization, we must add:

quantization_scheme: bnb

quantization_config:

load_in_4bit: true

bnb_4bit_compute_dtype: float16I have also changed in the experiment_name to “bnb-batch_size(${benchmark.input_shapes.batch_size})-sequence_length(${benchmark.input_shapes.sequence_length})-new_tokens(${benchmark.new_tokens})”.

I saved this new configuration in a new file named “mistral_7b_bnb_ob.yaml” in the same directory as “mistral_7b_ob.yaml”.

Then, we can now run optimum-benchmark for this new configuration:

optimum-benchmark --config-dir ./ --config-name mistral_7b_bnb_ob --multirunIt will create new subdirectories bnb-batch_size* in “experiments” containing the performance results.

We can exploit all these results to create a performance comparison between different LLM configurations.

FP16 vs. BNB’s NF4 vs. AWQ vs. GTPQ with Optimum-Benchmark

Let’s say that we want to decide what quantization algorithm to use for Mistral 7B. We have plenty of options such as GPTQ, AWQ, and BNB’s NF4. Note: Optimum-benchmark also supports ExLlamaV2.

We could do as I did in this article where I manually compared memory usage and inference speed for GPTQ against BNB’s NF4:

However, some metrics are difficult to accurately measure manually without a proper framework, such as memory peak consumption or latency.

With optimum-benchmark, we can benchmark several quantization methods and draw plots comparing them through different metrics.

Let’s try it!

Note: For the following experiments, a GPU with 16 GB of VRAM would be enough.

Benchmarking Mistral 7B Quantized with BNB’s NF4 and Double Quantization

In the previous section, we have already benchmarked FP16 and BNB’s NF4 for Mistral-7B. Let’s also run BNB’s NF4 with double quantization. We only need to add “bnb_4bit_use_double_quant: true” to “quantization_config” in the configuration file:

backend:

torch_dtype: float16 #The model will be loaded with fp16

quantization_scheme: bnb

quantization_config:

load_in_4bit: true

bnb_4bit_compute_dtype: float16

bnb_4bit_use_double_quant: trueNote: Don’t forget to change “experiment_name” to avoid erasing previous benchmarking.

Benchmarking Mistral 7B Quantized with GPTQ

GPTQ is directly supported by Hugging Face Transformers. It makes the benchmarking of GPTQ models with optimum-benchmark very straightforward.

We simply need to indicate the name of the GPTQ model we want to evaluate. For instance, I picked flozi00/Mistral-7B-v0.1-4bit-autogptq. Note: I tried with older GPTQ models, such as TheBloke/Mistral-7B-v0.1-GPTQ, but there is a random bug in Transformers triggered by batch inference. I recommend using models quantized with Transformers/AutoGPTQ from November 2023.

In our first configuration file used to benchmark FP16 Mistral 7B, we only have to replace:

model: mistralai/Mistral-7B-v0.1with

model: flozi00/Mistral-7B-v0.1-4bit-autogptqBenchmarking Mistral 7B Quantized with AWQ

Hugging Face Transformers supports AWQ model. I will evaluate my own Mistral 7B quantized with AWQ: kaitchup/Mistral-7B-awq-4bit.

We must create another configuration to replace:

model: mistralai/Mistral-7B-v0.1with

model: kaitchup/Mistral-7B-awq-4bitPlotting the results

Once we ran all the configurations, we obtained many subdirectories in “experiments” for different quantization configurations and batch sizes.

I will draw plots comparing the results for each batch size. For this, I used the script already prepared by optimum-benchmark:

Note: This script can be easily adapted to other benchmarking configurations.

It takes as an argument the directory containing all the configurations to compare. Run it with:

python report.py -e experimentsIt will produce one plot for each metric.

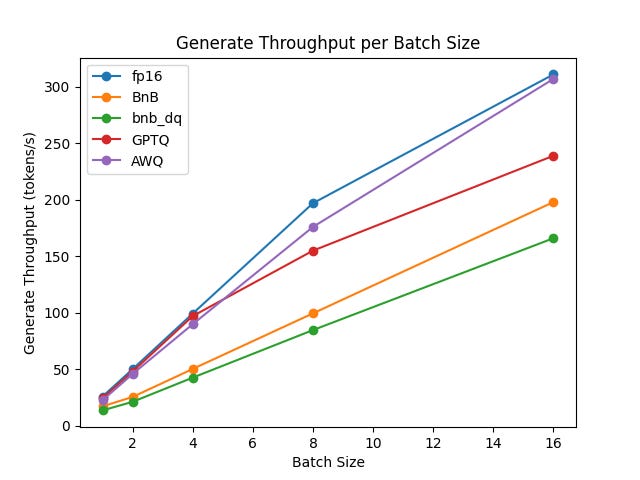

GPTQ is as fast as fp16 (no quantization) and AWQ for small batch sizes. For larger batches, GPTQ seems to lose some of its efficiency while AWQ still performs closely to fp16.

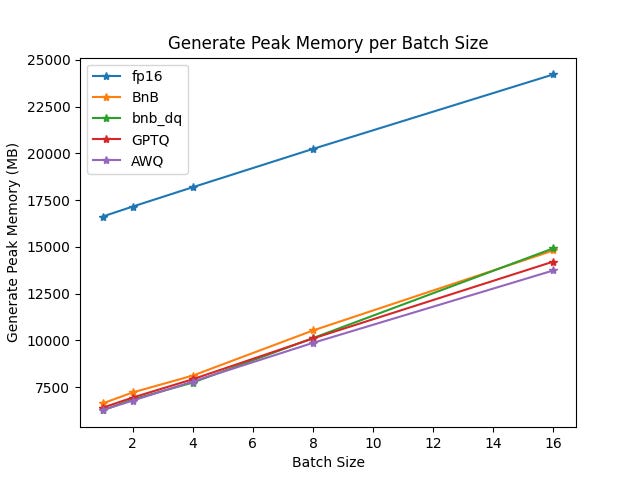

The difference in peak memory is marginal between the different quantization methods. The difference between quantized and non-quantized models remains stable given the batch sizes (around 10 GB).

Double quantization for bnb only reduces memory consumption by 0.5 GB while it significantly impacts throughput for larger batches as shown in the first plot.

As for the latency, all runs performed closely, except for AWQ which experienced a high latency linearly increasing given the batch size.

Conclusion

Optimum-benchmark is a very powerful benchmarking framework. To the best of my knowledge, this is the most complete open-source tool to benchmark LLMs. I will continue using it in my next articles.

Keep in mind that, in this article, I only explore a very tiny portion of what we can do with optimum-benchmark. I also wanted to include a section about benchmarking training but it would have made an article way too long. I will probably go in-depth about benchmarking PEFT methods in another article. Meanwhile, I put an example of a QLoRA benchmark in the notebook if you are interested.

You will also find many more examples of configurations in the repository of optimum-benchmark:

Seems experiment has not been supported by Optimum-Benchmark. Do you have updated version of code, such as test in vLLM, RoCm, thank you.