Fine-tune LLMs on Your CPU with QLoRA

Finally a tutorial that doesn't require CUDA

QLoRA is now the default method for fine-tuning large language models (LLM) on consumer hardware. For instance, with QLoRA, we only need 8 GB of GPU VRAM to fine-tune Mistral 7B and Llama 2 7B while a standard fine-tuning would require at least 24 GB of VRAM.

QLoRA reduces memory consumption thanks to 4-bit quantization. This is usually performed with the bitsandbytes package which optimizes the quantization and QLoRA fine-tuning on GPU.

Is QLoRA fine-tuning not possible with the CPU?

As a part of their work on the extension of Hugging Face’s Transformers, Intel has optimized QLoRA fine-tuning to make it possible on the CPU.

In this article, I show how to use Intel’s extension for Transformers for fine-tuning Mistral 7B using only your CPU. I have experimented with it on the old and slow Google Colab’s CPU. We will see that while fine-tuning with an old CPU is indeed possible, we need a powerful and recent CPU to complete fine-tuning in a reasonable time.

I have implemented a notebook for demonstration in Google Colab. It’s available here:

QLoRA with a CPU: How Is It Possible?

QLoRA fine-tunes a LoRA adapter on top of a base LLM quantized with the NormalFloat4 (NF4) data type. Only the parameters of the added LoRA adapter are trained. In other words, instead of updating the billions of parameters of the base LLM, QLoRA only updates the millions of parameters of the adapter.

I recommend reading the following article for a more extensive explanation of how QLoRA works:

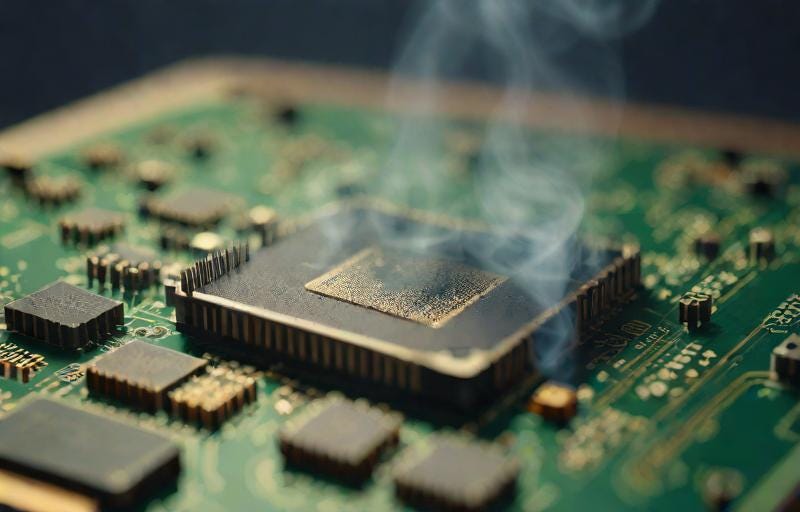

Yet, even with the reduced number of trainable parameters, efficient QLoRA fine-tuning is challenging on a CPU. CPUs don’t natively support the NF4 data type.