Hi Everyone,

In this edition of The Weekly Kaitchup:

Reinforcement Learning with Spurious Rewards: Does It Really Work?

Thinking of Using a Quantized Model for Long Context? Think Again

Reinforcement Learning with Spurious Rewards: Does It Really Work?

A new study has generated significant attention on social media this week:

Spurious Rewards: Rethinking Training Signals in RLVR

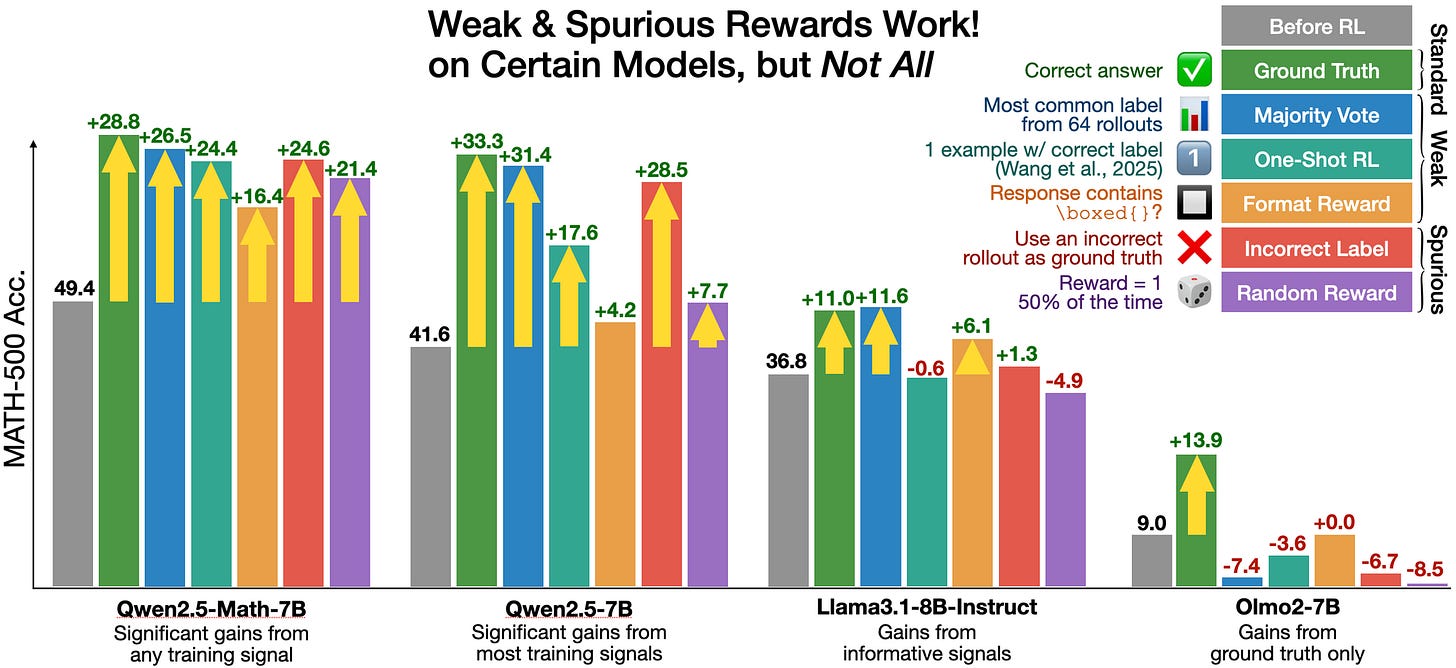

The findings are very surprising. They show that Reinforcement Learning with Verifiable Rewards (RLVR—a technique also used in GRPO) can deliver large apparent gains on Qwen-Math models even when the “reward” is nonsensical.

Giving Qwen-2.5-Math-7B rewards for nothing more than printing \boxed{} answers, for generating incorrect answers, or for a coin-flip random bit, still raises MATH-500 accuracy by 16-25 percentage points, almost as much as using ground-truth solutions. The same experiments on Llama 3 and OLMo models do little or even hurt performance.

Tracing the cause, the authors find that Qwen-Math already solves many problems by writing and mentally “executing” Python code; RLVR, whatever the reward, simply pushes the model to use that latent code-reasoning mode more often (from 65 % of responses to > 90 %). Because GRPO’s clipping term biases updates toward tokens that were already probable under the rollout policy, even random rewards concentrate probability mass on the model’s existing strategy instead of teaching new ones. When the clipping is removed, random-reward training stops helping.

The work warns that recent “weak-supervision” RLVR successes, such as majority-vote rewards or one-shot RL, may be artefacts of Qwen’s pre-training rather than generally reliable methods.

Well…

If we look at the reported gains, especially for Qwen2.5 models, they are huge. When you have such very surprising gains, it’s necessary to double-check the baseline (the grey bar in the picture above).

A follow-up “audit” re-ran the baselines used in seven widely cited “RL-improves-reasoning” papers, including this Spurious-Rewards study, and found that almost all of their reported gains vanish once the starting models are evaluated properly.

Incorrect Baseline Evaluations Call into Question Recent LLM-RL Claims

They found that the authors of these studies had scored their baselines with sub-optimal prompts, low temperatures, or brittle answer-parsing rules that mis-marked many correct solutions as wrong.

The authors of this “audit” re-evaluated the same checkpoints with the official Qwen decoding settings or the Sober Reasoning harness, the supposedly dramatic jumps (for example, a claimed 49 → 70 % jump on MATH-500 using random reward) shrank to only a few percentage points, and in several cases the RL-trained models actually underperformed the correctly measured baselines.

In effect, the RL procedures were mostly repairing evaluation artefacts, forcing the model to emit the right format or raising the temperature (!), rather than teaching new reasoning skills.

To clarify: The baseline model used a low temperature for inference, while the reinforcement learning (RL) model used a higher temperature. In other words, at least two experimental parameters (the model and an inference hyperparameter) were changed simultaneously, a common but problematic practice in machine learning research. As a result, it's impossible to isolate which factor contributed to the observed performance gains, or whether the improvement arose from the combination of both. In this case, the gains appear to stem from the higher temperature rather than from weak RL signals to train model.

They conclude that recent excitement over “reward-free” or “one-shot” RL methods rests on shaky evidence.

My opinion

For months, Twitter/X threads and pre-prints have painted an almost magical picture: “one random bit of reward and your model masters math!”, and the community (myself included) was inclined to believe it because the plots looked compelling and the papers moved fast. What the re-evaluation shows is not that these RL ideas are worthless, but that evaluation hygiene can easily swamp genuine signal.

Blame haste, not malice. Most of these “errors”, greedy decoding, legacy answer parsers, ignoring vendor-recommended temps, are the sort you make when a result looks exciting at 2 a.m. and you rush to share it before someone else scoops you. They’re fixable.

The findings don’t kill weak-signal RL, they just reset the scoreboard. It’s entirely plausible that entropy minimisation or majority-vote RL buys a few reliable points once baselines are correct. Those few points might still matter in production.

Thinking of Using a Quantized Model for Long Context? Think Again

When a new language model is released, the community rushes to create quantized variants, typically GGUF files compressed to 4-bit precision or even lower. These lightweight models are rarely subjected to rigorous evaluation, yet they see heavy use; many users forget they are running a downgraded version rather than the original.

4-bit quantization “usually works,” but its impact on tasks that require long contexts, such as RAG, document summarization, coding, and similar workloads, has been largely unexplored.

A new study released this week by researchers from UMass Amherst, Microsoft, and the University of Maryland shows that these widely used 4-bit quantized models can significantly underperform their full-precision counterparts on precisely those long-context tasks.

Does quantization affect models’ performance on long-context tasks?

Bitsandbytes 4-bit quantization, the scheme widely used in QLoRA fine-tuning, appears especially vulnerable, showing a noticeable performance drop.

What this means in practice

If your 4-bit model struggles on long-context tasks despite the original model’s strong claims, the quantization is probably to blame.

The problem is probably even worse for non-English long inputs; prior work shows multilingual performance degrades more severely under quantization.

For long-context workloads, heavy quantization often makes little sense: the VRAM you save on model weights is trivial compared with the memory consumed by the KV cache, which can be orders of magnitude larger than the model itself.

In short, lightweight quantized models are convenient, but they can’t yet match full-precision models when you need reliable performance on extended contexts.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I also reviewed in The Weekly Salt:

⭐Quartet: Native FP4 Training Can Be Optimal for Large Language Models

Shifting AI Efficiency From Model-Centric to Data-Centric Compression

Learning to Reason without External Rewards

Synthetic Data RL: Task Definition Is All You Need

Support The Kaitchup by becoming a Pro subscriber:

What You'll Get

Priority Support – Fast, dedicated assistance whenever you need it to fine-tune or optimize your LLM/VLM. I answer all your questions!

Lifetime Access to All the AI Toolboxes – Repositories containing Jupyter notebooks optimized for LLMs and providing implementation examples of AI applications.

Full Access to The Salt – Dive deeper into exclusive research content. Already a paid subscriber to The Salt? You’ll be refunded for the unused time!

Early Access to Research – Be the first to access groundbreaking studies and models by The Kaitchup.

30% Discount for Group Subscriptions – Perfect for teams and collaborators.

The Kaitchup’s Book – A comprehensive guide to LLM fine-tuning. Already bought it? You’ll be fully refunded!

All Benefits from Regular Kaitchup Subscriptions – Everything you already love, plus more. Already a paid subscriber? You’ll be refunded for the unused time!

How to Subscribe?

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!