Hi Everyone,

In this edition of The Weekly Kaitchup:

New Models: Phi-4 Reasoning, Qwen2.5 Omni 3B, and OLMo 2 1B

LLM Leaderboards Are Having a Bad Time

New Models: Phi-4 Reasoning, Qwen2.5 Omni 3B, and OLMo 2 1B

Phi-4 Reasoning

Microsoft’s new reasoning models are based on Phi-4 Mini and Phi-4 14B. While the 14B variant isn’t exactly lightweight, as it can’t run unmodified on a 32 GB GPU like the RTX 5090 without significant compromises to its reasoning performance, it becomes much more accessible once quantized to 8-bit or 4-bit.

The Phi-4 Reasoning model was trained without reinforcement learning, whereas the Plus version includes a brief RL phase, which provides a noticeable performance boost.

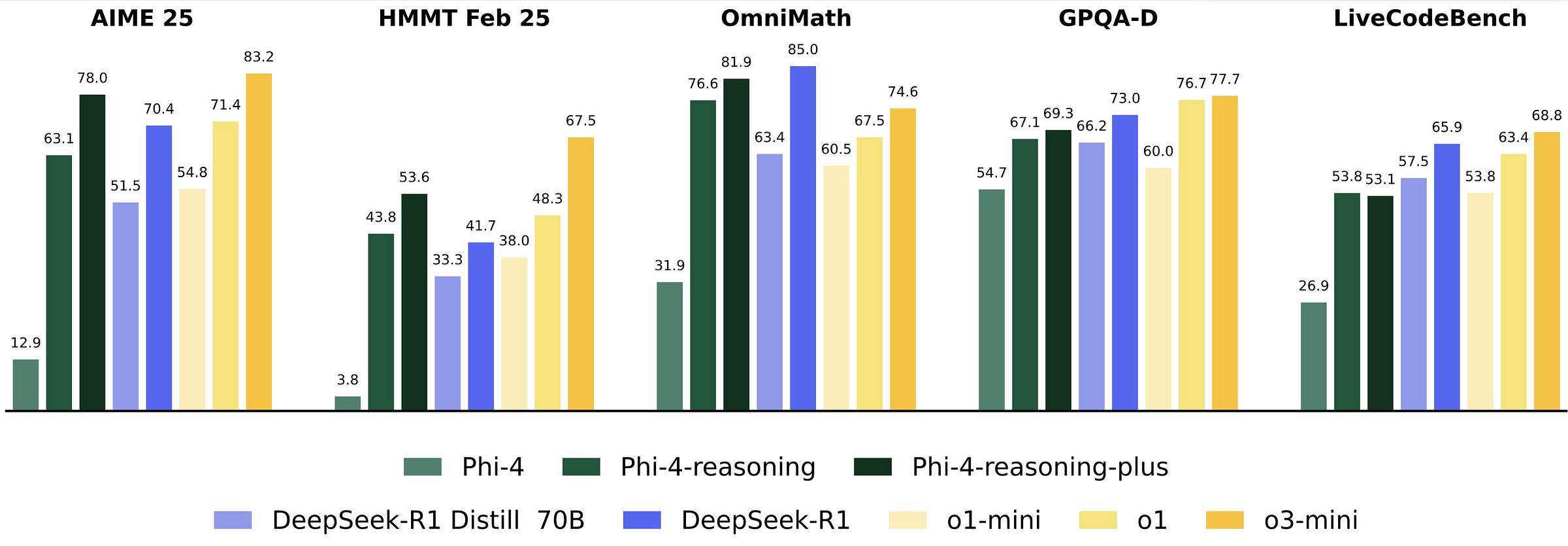

Benchmark results suggest the models perform well:

In the technical report (a very good one), we learn that Microsoft trained the model using a randomly sampled subset of 6,000 problems drawn from a larger curated dataset. Despite the lack of data selection optimization, RL appeared to reinforce certain reasoning behaviors, leading to more focused and confident output distributions.

A notable technical issue arose when the model began generating responses longer than 32,000 tokens, well beyond its trained context window. These outputs had to be clipped during RL, indicating some degree of generalization outside the model’s expected limits. Microsoft also explored the relationship between response length and accuracy. Extremely long responses (top 25% in length) often correlated with incorrect answers, likely due to overgeneration when the model was uncertain. In contrast, a higher average response length generally aligned with better accuracy.

The model also tended to overanalyze simple prompts and include unnecessary self-evaluation behaviors commonly observed in reasoning models. Multi-turn interactions were not a focus of the study, so the model’s performance in dialogue settings remains an open question. Still, the findings suggest that even relatively minimal RL can meaningfully shape a model’s reasoning behavior.

The models are available under the MIT license here:

Hugging Face: microsoft/Phi-4-reasoning

Qwen2.5 Omni 3B

Qwen3 wasn’t enough?

The Qwen team also released this week a smaller version of their “Omni” model:

Hugging Face: Qwen/Qwen2.5-Omni-3B

Note: The names of the Omni models can be misleading, as they refer only to the parameter count of the base language models. They do not include the parameters of the multimodal encoders. For example, Omni-3B actually has 5.5B total parameters, and the previously released Omni 7B has 10.7B parameters.

These models are multimodal LLMs that take vision, audio, and language inputs and produce outputs in audio or language. The latest version is optimized for efficiency and can run on consumer-grade hardware.

OLMo 2 1B

According to AI2, OLMo 2 1B (which actually has 1.5B parameters) outperforms other models in its class, such as Gemma 3 1B and Llama 3.2 1B. It may well be the strongest 1B-class model released so far. However, it's still unclear how it compares to Qwen3 0.6B and 1.7B, both of which have also shown exceptional performance relative to their size.

Hugging Face: allenai/OLMo-2-0425-1B-Instruct

The model was pretrained on 4 trillion tokens of high-quality data using the same methods applied to larger OLMo 2 models (7B, 13B, 32B), and intermediate checkpoints are made available every 1,000 steps. Post-training follows the Tülu 3 pipeline, combining instruction tuning with distillation, on-policy preference tuning, and reinforcement learning with verifiable rewards (RLVR) to improve benchmark performance.

Leaderboards Are Having a Bad Time

A new paper exposing the policies of the Chatbot Arena is making a lot of noise:

This 68-page report, based on five months of work, was produced by leading institutions including Cohere, Princeton University, Stanford University, the University of Waterloo, the Massachusetts Institute of Technology, AI2, and the University of Washington.

Benchmarks have long played a key role in shaping progress in machine learning, and Chatbot Arena emerged as a valuable tool by enabling dynamic, crowd-sourced comparisons of generative AI models. However, the study highlights that a small group of proprietary providers, such as OpenAI, Google, and Meta, have been given preferential treatment. This includes access to private testing, higher sampling rates, and significantly more user feedback, all of which create conditions that encourage overfitting to Arena-specific dynamics rather than improving general model quality. In effect, these providers can test multiple model variants and selectively release the best-performing one, allowing them to claim benchmark superiority even if the model may not generalize well to real-world tasks. A recent example of this is Meta’s Llama 4.

It's important to note that none of these practices were entirely hidden. Chatbot Arena maintainers have publicly documented their policies for over a year (at least), and Meta openly stated that the Llama 4 submitted to the Arena was a different version specifically optimized for “conversationality”.

Still, the broader implications of these practices are often underestimated, and their existence remains relatively unknown/misunderstood to the general public.

The authors conducted a large-scale analysis of 2 million model battles involving 243 models from 42 providers. Their findings show that current policies allow favored providers to test multiple model variants privately, then release only the best-performing version to the public leaderboard, effectively inflating scores. Additionally, access to data and sampling opportunities are heavily skewed toward proprietary models, giving them a clear advantage over open-weight and open-source alternatives.

The paper ends with five actionable recommendations to restore trust in the leaderboard: prohibit score retraction, limit private variants, apply model removals uniformly, implement fair sampling, and publicly disclose all deprecations.

That said, I think these issues will be hard to fully resolve. No matter how policies evolve, leaderboards are likely to remain susceptible to gaming. This is one of the fundamental limitations of using public benchmarks for competitive evaluation, and one of the reasons why Hugging Face discontinued its very popular OpenLLM Leaderboard.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐Pre-DPO: Improving Data Utilization in Direct Preference Optimization Using a Guiding Reference Model

BitNet v2: Native 4-bit Activations with Hadamard Transformation for 1-bit LLMs

TTRL: Test-Time Reinforcement Learning

Support The Kaitchup by becoming a Pro subscriber:

What You'll Get

Priority Support – Fast, dedicated assistance whenever you need it to fine-tune or optimize your LLM/VLM. I answer all your questions!

Lifetime Access to All the AI Toolboxes – Repositories containing Jupyter notebooks optimized for LLMs and providing implementation examples of AI applications.

Full Access to The Salt – Dive deeper into exclusive research content. Already a paid subscriber to The Salt? You’ll be refunded for the unused time!

Early Access to Research – Be the first to access groundbreaking studies and models by The Kaitchup.

30% Discount for Group Subscriptions – Perfect for teams and collaborators.

The Kaitchup’s Book – A comprehensive guide to LLM fine-tuning. Already bought it? You’ll be fully refunded!

All Benefits from Regular Kaitchup Subscriptions – Everything you already love, plus more. Already a paid subscriber? You’ll be refunded for the unused time!

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!