Hi Everyone,

In this edition of The Weekly Kaitchup:

SGLang and vLLM Fighting Over Meaningless Numbers

PyTorch 2.7: Blackwell Officially Supported

LoRA Fine-Tuning to Make Reasoning Models

Notebook Update

SGLang and vLLM Fighting Over Meaningless Numbers

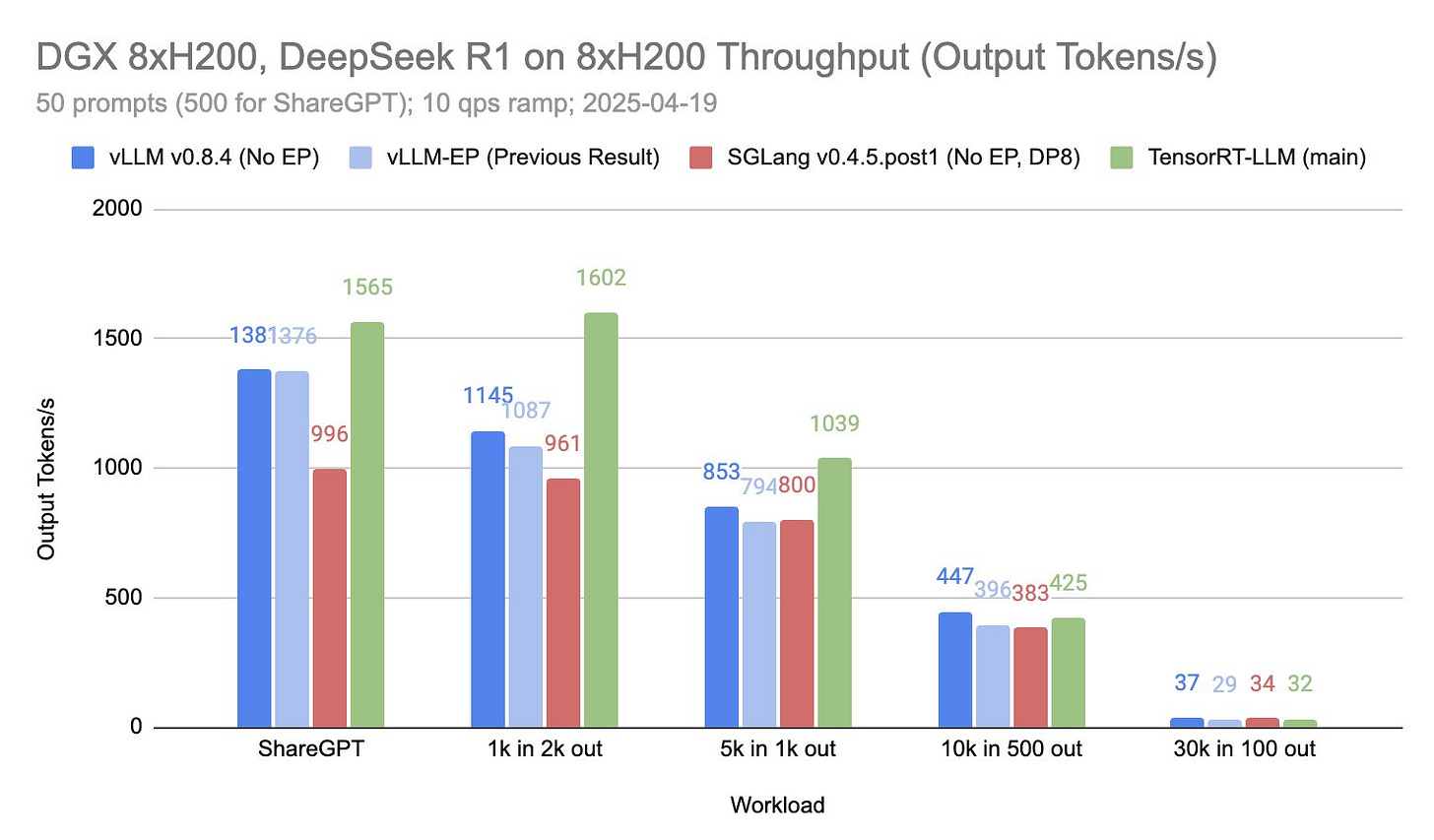

Just a week ago, the vLLM team shared some impressive benchmark results, highlighting vLLM’s performance advantage over SGLang, which you could see as one of their main “competitors”:

To which SGLang people replied very aggressively (IMO):

and showing numbers looking significantly different from the numbers posted by vLLM:

The vLLM team answered with new numbers after updating a few things:

showing much better results than SGLang, but now, both look completely outclassed by TensorRT-LLM.

What a waste of time and reputation for both.

This comparison seems valid only for an extremely specific setup — a Nebius DGX node with 8x H200 GPUs, running DeepSeek-R1 with small batches (50 prompts). I’d bet the results look very different with just 1 prompt or 1000 prompts.

Even if these results did generalize across different models, batch sizes, and GPU setups, the performance gap isn’t meaningful enough to matter, especially for longer sequences.

So, should we just switch to TensorRT-LLM as the results suggest?

Maybe, but only if you’re running a similar high-end setup. The truth is, these benchmarks are highly hardware-dependent, and your actual performance will vary a lot depending on the LLM, GPU, and workload you're running.

My advice: benchmark it yourself, with your expected production setup.

As for me:

I stick with vLLM, it’s reliable, efficient, and meets all my needs.

I’m sure SGLang is solid too, but I’ve never felt the need to switch.

And TensorRT-LLM? Impressive, yes, but way too complicated for my use case.

Still, if you’re planning for a heavy production load, it’s worth a look.

PyTorch 2.7: Finally, Blackwell is Officially Supported

Until now, if you had an RTX 50xx GPU based on the Blackwell architecture, say, an RTX 5090, you had to rely on a PyTorch nightly build just to get things working with CUDA 12.8. That meant dealing with the usual instability that comes with nightlies: potential bugs, regressions, and inconsistent behavior.

With PyTorch 2.7, that’s no longer necessary. You can now use a stable release with full support for Blackwell GPUs and CUDA 12.8. I’ll be testing this soon and will update the configuration section of the following article to show how to set up the RTX 5090 for both training and inference using PyTorch 2.7:

Beyond Blackwell support, PyTorch 2.7 brings other meaningful updates. Notably, the torch.compile system continues to improve. If you haven’t tried it yet, it’s worth exploring. It’s an easy way to speed up inference and reduce memory usage without much code change.

On the LLM side, the FlexAttention stack has also advanced, with improved first-token processing and better throughput on x86 CPUs via micro-GEMM templating. The new APIs are inference-ready and integrate cleanly with torch.compile. There’s also a Context Parallel API for more control over attention backend selection during runtime.

LoRA Fine-Tuning to Make Reasoning Models

While supervised fine-tuning (SFT) on reasoning traces from stronger models is a common way to build reasoning-capable language models, it relies heavily on high-quality demonstrations and often results in shallow imitation rather than true reasoning.

Reinforcement learning (RL) offers a promising alternative: it enables models to explore reasoning paths guided by reward signals. But in practice, it’s computationally expensive and challenging to scale.

Can we train language models to reason using RL, without blowing the compute budget?

A new paper studies this in detail:

Tina: Tiny Reasoning Models via LoRA

The authors skip large models and instead build on a compact 1.5B parameter model, DeepSeek-R1-Distill-Qwen-1.5B, which already shows decent reasoning ability thanks to its distillation background. The goal is to isolate how much RL alone can improve reasoning. To keep things lightweight, they apply LoRA during RL, training only a small number of parameters instead of the full model. This approach drastically reduces cost and makes it easier to run experiments even on limited hardware. We did something similar with GRPO, but using reasoning traces produced by stronger models:

The result is the Tina family (Tiny Reasoning Models via LoRA).

Hugging Face: Tina - LoRA-based RL Reasoning

Despite their small size, these models perform competitively with full-model fine-tuned baselines, achieving up to a 20% reasoning performance gain and strong accuracy on math benchmarks like AIME24.

The authors assume that LoRA helps models quickly adapt their reasoning format during RL without overwriting the base model’s knowledge. They also share their code here:

GitHub: shangshang-wang/Tina

Notebook Update

Since launching The Kaitchup, I’ve made it a priority to keep all notebooks up-to-date and compatible with the latest versions of essential packages, whether it’s TRL, Transformers, or PyTorch. For a long time, this approach worked great. You could open any of the 150 notebooks, and things would run smoothly, or at worst, require minimal tweaks.

Unfortunately, that’s no longer the case.

The way Hugging Face is rolling out updates has changed. Updates to Transformers or TRL can now introduce breaking changes without warnings. A recent example: an update broke support for fine-tuning Qwen2.5 using FlashAttention2 and right-padding. That setup simply doesn’t work anymore. As a result, several Qwen2.5 fine-tuning notebooks are currently broken. Hugging Face is aware, and a fix is on the way. It also delayed my update of the Qwen2.5 Toolbox.

And this isn't a one-off. Every TRL update seems to introduce new bugs or incompatibilities. For instance, you could previously run GRPO on a single GPU using vLLM, but that’s no longer possible with the latest version.

These constant changes have made it clear: my current policy isn’t sustainable. I can’t realistically recheck and update over 150 notebooks every time a package is updated.

🛠️ So here’s the new plan:

Going forward, I’ll include a list of exact package versions used the last time the notebook was successfully run. You’ll find this list at the bottom of each new notebook. Over time, I’ll also work on adding version info to existing ones.

Some of you suggested creating a Docker image, and I agree, it’s a good solution. But in practice, it’s a big time investment, and I suspect many of you are using platforms like Google Colab, where Docker support is limited or nonexistent.

This new strategy should work as it keeps things transparent and reproducible, without overcomplicating the setup for the majority of users.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐70% Size, 100% Accuracy: Lossless LLM Compression for Efficient GPU Inference via Dynamic-Length Float

Could Thinking Multilingually Empower LLM Reasoning?

SFT or RL? An Early Investigation into Training R1-Like Reasoning Large Vision-Language Models

Support The Kaitchup by becoming a Pro subscriber:

What You'll Get

Priority Support – Fast, dedicated assistance whenever you need it to fine-tune or optimize your LLM/VLM. I answer all your questions!

Lifetime Access to All the AI Toolboxes – Repositories containing Jupyter notebooks optimized for LLMs and providing implementation examples of AI applications.

Full Access to The Salt – Dive deeper into exclusive research content. Already a paid subscriber to The Salt? You’ll be refunded for the unused time!

Early Access to Research – Be the first to access groundbreaking studies and models by The Kaitchup.

30% Discount for Group Subscriptions – Perfect for teams and collaborators.

The Kaitchup’s Book – A comprehensive guide to LLM fine-tuning. Already bought it? You’ll be fully refunded!

All Benefits from Regular Kaitchup Subscriptions – Everything you already love, plus more. Already a paid subscriber? You’ll be refunded for the unused time!

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!