Hi Everyone,

In this edition of The Weekly Kaitchup:

New Nemotron Hybrid Models

The “1-bit” LLM by Microsoft Is Finally Here

New Nemotron Hybrid Models

NVIDIA dropped another set of impressive open models this week — and this time, they’re hybrid.

The new Nemotron-H series combines Mamba and Transformer layers to tackle a growing problem in large models: inference speed, especially for long-context reasoning. By replacing many of the traditional self-attention layers with Mamba layers, Nemotron-H reduces the memory and compute overhead during generation, thanks to Mamba’s constant-time scaling with sequence length.

Hybrid architectures aren’t a new idea — models like Jamba and Zamba have already shown the potential of mixing sequence models with attention. But with Nemotron-H, NVIDIA is pushing this approach at scale.

The 56B variant of Nemotron-H was pre-trained from scratch on 20 trillion tokens using FP8 precision with per-tensor scaling, making it one of the largest public FP8 training runs to date.

To make the model practical for GPUs like the RTX 5090, NVIDIA distilled it down to a 47B version. It retains nearly the same accuracy while supporting inference over million-token contexts in FP4. Yes, FP4 is here, and it looks ready to take over from our usual INT4 setups like AWQ or GPTQ. I’m curious to see how quickly this data type becomes mainstream with the Blackwell GPUs.

The 8B version was also trained on a massive token horizon (15T tokens) and used in a controlled comparison between hybrid and pure Transformer architectures. It matched or exceeded the accuracy of baseline models on math, coding, and commonsense tasks, and did so with significantly better inference throughput. With additional supervised fine-tuning and reward-based optimization, it performs on par with top-tier 8B instruct models, including for long-context tasks up to 128k tokens.

Accuracy-wise, the models are competitive, and NVIDIA's evaluations are solid; they re-ran most comparisons themselves instead of relying on sometimes-inconsistent numbers from prior reports.

Still, the real strength of these models is inference speed. That’s the whole point of going hybrid. They’re built to handle long contexts efficiently.

For the 56B model, they benchmarked throughput against larger baselines, which, naturally, are slower due to size. But what’s more interesting is the 8B variant: it outperforms Qwen2.5 7B in inference speed, despite being slightly larger.

The blog post is full of interesting details. I recommend reading it:

Nemotron-H: A Family of Accurate, Efficient Hybrid Mamba-Transformer Models

The models are available here and supported by vLLM:

Hugging Face: Nemotron-H

The “1-bit” LLM by Microsoft Is Finally Here

We’ve been waiting a long time for this, and it’s finally here: Microsoft’s much-anticipated “1-bit LLM.” Technically, it’s a ternary model with 1.58 bits per weight.

Hugging Face: microsoft/bitnet-b1.58-2B-4T

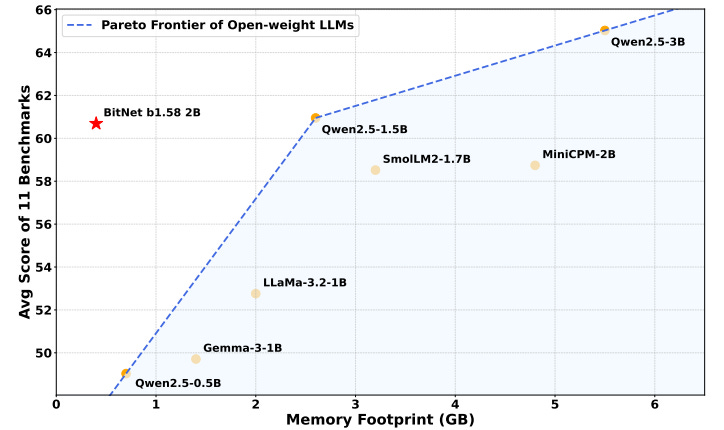

BitNet b1.58 2B4T is a 2-billion-parameter, natively 1-bit quantized LLM. Unlike typical post-training quantized models, this one was trained from scratch using 1.58-bit ternary weights and 8-bit activations, showing that extremely low-bit precision can still match the performance of full-precision models when done correctly.

The model uses a Transformer architecture modified with BitLinear layers, along with Rotary Positional Embeddings, squared ReLU (ReLU²), and sublayer normalization (without bias terms). It was trained on a large 4-trillion-token corpus of public text, code, and synthetic math data. The quantization is handled with absmean (weights) and absmax (activations) quantizers during training.

Multiple versions are released: packed 1.58-bit weights for inference, BF16 master weights for fine-tuning, and GGUF format for CPU-friendly inference.

It runs with Transformers, but, as mentioned by Microsoft in the model card, Transformers is not optimized for this type of model. However, you can get a very good optimization by using bitnet.cpp that we already studied in this article:

More details in this technical report:

BitNet b1.58 2B4T Technical Report

It has the memory footprint of a 300M 16-bit parameter model, while performing on par with Qwen2.5 1.5B!

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐ModernBERT or DeBERTaV3? Examining Architecture and Data Influence on Transformer Encoder Models Performance

Self-Steering Language Models

Iterative Self-Training for Code Generation via Reinforced Re-Ranking

Support The Kaitchup by becoming a Pro subscriber:

What You'll Get

Priority Support – Fast, dedicated assistance whenever you need it to fine-tune or optimize your LLM/VLM. I answer all your questions!

Lifetime Access to All the AI Toolboxes – Repositories containing Jupyter notebooks optimized for LLMs and providing implementation examples of AI applications.

Full Access to The Salt – Dive deeper into exclusive research content. Already a paid subscriber to The Salt? You’ll be refunded for the unused time!

Early Access to Research – Be the first to access groundbreaking studies and models by The Kaitchup.

30% Discount for Group Subscriptions – Perfect for teams and collaborators.

The Kaitchup’s Book – A comprehensive guide to LLM fine-tuning. Already bought it? You’ll be fully refunded!

All Benefits from Regular Kaitchup Subscriptions – Everything you already love, plus more. Already a paid subscriber? You’ll be refunded for the unused time!

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!