Hi Everyone,

In this edition of The Weekly Kaitchup:

DeepSeek’s #OpenSourceWeek

olmOCR: A PDF to Text Converter That Actually Works!

Phi-4 Mini and Multimodal

DeepSeek’s #OpenSourceWeek

This week, DeepSeek showed how far ahead they are by sharing some of the technology behind DeepSeek-R1. They released something new each day, giving a look into their work.

Here is a summary:

FlashMLA: The MLA decoding kernel for Hopper GPUs, with optimized performance for variable-length sequences. With support for BF16 precision and a paged KV cache with a block size of 64. They claim it achieves 3000 GB/s in memory bandwidth and is compute-bound at 580 TFLOPS on the H800 (the GPU used by DeepSeek to train V3 and R1). vLLM is implementing it.

from flash_mla import get_mla_metadata, flash_mla_with_kvcache

tile_scheduler_metadata, num_splits = get_mla_metadata(cache_seqlens, s_q * h_q // h_kv, h_kv)

for i in range(num_layers):

...

o_i, lse_i = flash_mla_with_kvcache(

q_i, kvcache_i, block_table, cache_seqlens, dv,

tile_scheduler_metadata, num_splits, causal=True,

)

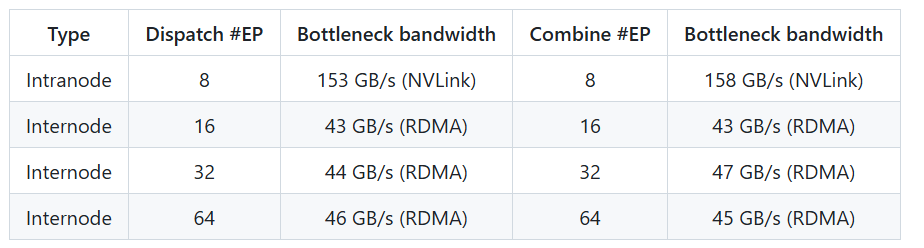

...DeepEP: DeepSeek R1’s architecture uses DeepSeekMoE. This communication library is built for MoE model training and inference, optimizing all-to-all communication with support for both intranode and internode connections using NVLink and RDMA. It includes high-throughput kernels for training and inference prefilling, low-latency kernels for inference decoding, and native FP8 dispatch support. Flexible GPU resource control allows computation and communication to run in parallel.

DeepGEMM: The FP8 GEMM library supports both dense and MoE GEMMs, enabling V3/R1 training and inference. It achieves up to 1350+ FP8 TFLOPS on Hopper GPUs while remaining lightweight, with no heavy dependencies. The library is fully Just-In-Time compiled, with core logic in around 300 lines, yet it outperforms expert-tuned kernels across most matrix sizes. It supports both dense layouts and two MoE layouts, providing flexibility for different workloads.

DualPipe and EPLB: In expert parallelism (EP), different experts are assigned to GPUs, and workload imbalance can occur. DeepSeek-V3 addresses this with a redundant experts strategy, duplicating heavily loaded experts and assigning them heuristically for balance. Group-limited expert routing further reduces inter-node data traffic by placing experts from the same group on the same node when possible. The open-sourced

eplb.pyalgorithm computes balanced expert replication and placement. They also introduced DualPipe, a bidirectional pipeline parallelism algorithm that fully overlaps forward and backward computation-communication phases while reducing pipeline bubbles.

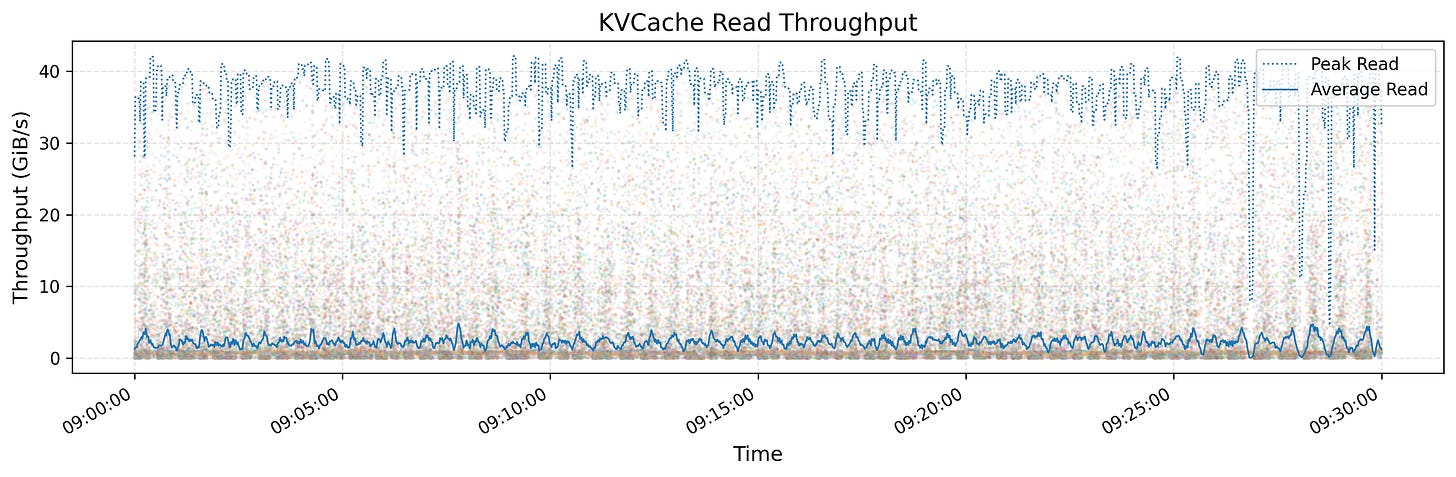

Fire-Flyer File System (3FS): A high-performance distributed file system for AI workloads. It leverages SSDs and RDMA networks for shared storage, simplifying distributed application development. It supports data preparation, random access dataloaders, high-throughput checkpointing, and a KVCache for inference.

It's now clear that DeepSeek AI wasn't exaggerating when they said training R1 cost only a few million dollars in compute. Their libraries and kernels likely played a big role in keeping costs down.

This also explains how they can offer R1 and V3 through their API at such a low cost. And it's safe to assume they haven't shared everything, keeping some advantages for themselves.

The impact of these releases for the AI community is obvious—each of these repositories has already gained thousands of stars!

olmOCR: A PDF to Text Converter That Actually Works!

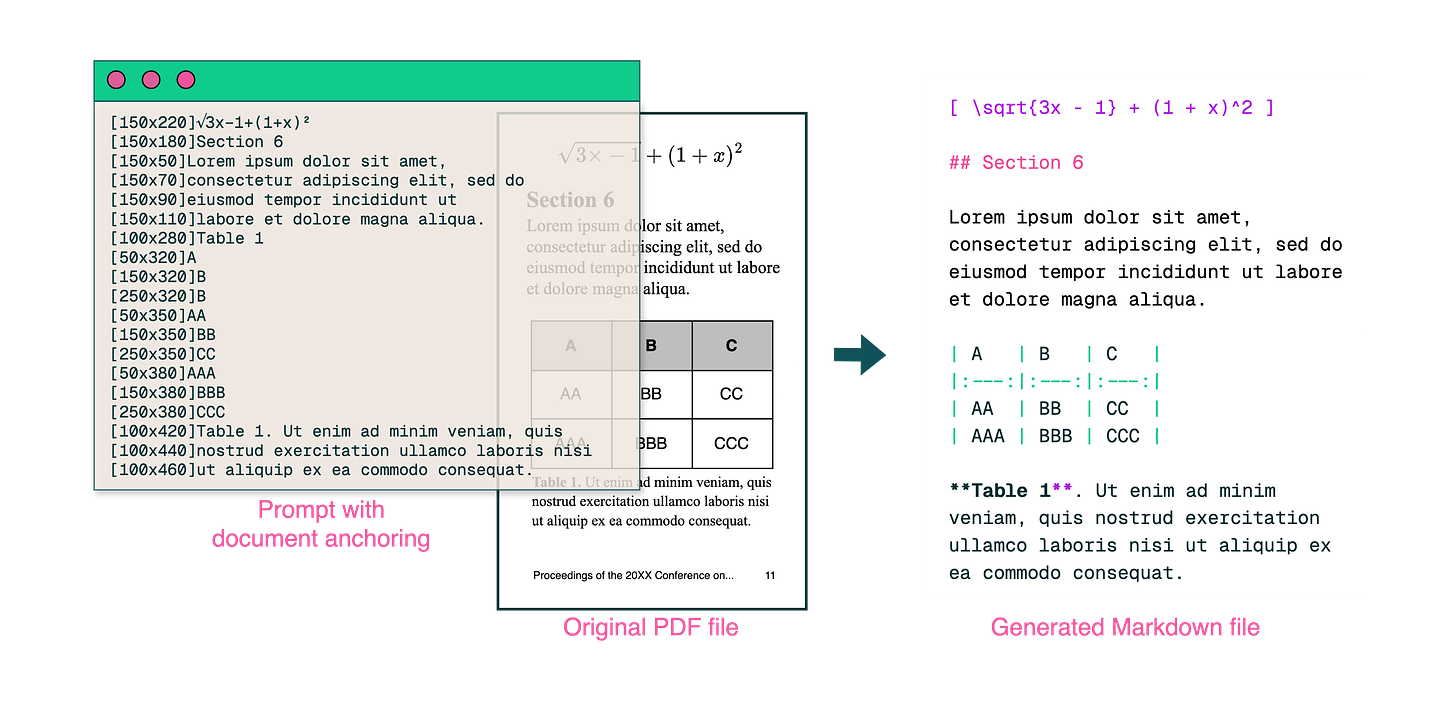

olmOCR is an LLM trained by AI2 for converting PDFs and document images into structured text. It’s fine-tuned on 250,000 pages from various sources, handles both digital and scanned documents, and outputs Markdown-formatted text with support for equations, tables, and handwriting. olmOCR works with SGLang and vLLM inference engines, scales across multiple GPUs, and includes heuristics for handling common parsing errors.

It is built Qwen2-VL-7B-Instruct.

olmOCR uses document anchoring to improve extraction quality by leveraging existing text and metadata from PDFs.

Evaluations by the authors show it performs better than other OCR tools, with an ELO score above 1800. It was preferred in 61.3% of comparisons against Marker, 58.6% against GOT-OCR, and 71.4% against MinerU.

If you own a large database of PDFs that you want to use for fine-tuning LLMs, try this model to convert them into clean, structured token sequences.

The model is here:

source: Efficient PDF Text Extraction with Vision Language Models

Phi-4 Mini and Multimodal

This week we also got new Phi-4 models. We will review them next week (or maybe this weekend as I’ve almost finished writing the article).

Meanwhile, you can find the quantized models here:

I won’t quantize the multimodal version due to the originality of the architecture (mixture of LoRA adapters) which makes it very challenging to accurately quantize.

GPU Selection of the Week:

To get the prices of GPUs, I use Amazon.com. If the price of a GPU drops on Amazon, there is a high chance that it will also be lower at your favorite GPU provider. All the links in this section are Amazon affiliate links.

NVIDIA RTX 50XX GPUs are officially released but already sold out, as expected. I won’t track their prices until I can find them at a “reasonable” price.

Even the 40xx series is unaffordable now.

RTX 4090 (24 GB): None at a reasonable price.

RTX 4080 SUPER (16 GB): None at a reasonable price.

RTX 4070 Ti SUPER (16 GB): None at a reasonable price.

RTX 4060 Ti (16 GB): None at a reasonable price.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐How to Get Your LLM to Generate Challenging Problems for Evaluation

Ignore the KL Penalty! Boosting Exploration on Critical Tokens to Enhance RL Fine-Tuning

You Do Not Fully Utilize Transformer's Representation Capacity

Support The Kaitchup by becoming a Pro subscriber:

What You'll Get

Priority Support – Fast, dedicated assistance whenever you need it to fine-tune or optimize your LLM/VLM. I answer all your questions!

Lifetime Access to All the AI Toolboxes – Repositories containing Jupyter notebooks optimized for LLMs and providing implementation examples of AI applications.

Full Access to The Salt – Dive deeper into exclusive research content. Already a paid subscriber to The Salt? You’ll be refunded for the unused time!

Early Access to Research – Be the first to access groundbreaking studies and models by The Kaitchup.

30% Discount for Group Subscriptions – Perfect for teams and collaborators.

The Kaitchup’s Book – A comprehensive guide to LLM fine-tuning. Already bought it? You’ll be fully refunded!

All Benefits from Regular Kaitchup Subscriptions – Everything you already love, plus more. Already a paid subscriber? You’ll be refunded for the unused time!

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!