Hi Everyone,

In this edition of The Weekly Kaitchup:

Qwen2.5 Coder 32B: The Best LLM for Coding Tasks?

More Training Tokens => More Difficult to Quantize!

I've also updated the Qwen2.5 toolbox repository, adding comments to most notebooks and introducing two new ones for DPO full training and ORPO with LoRA.

Next week, I'll be releasing the Llama 3 toolbox.

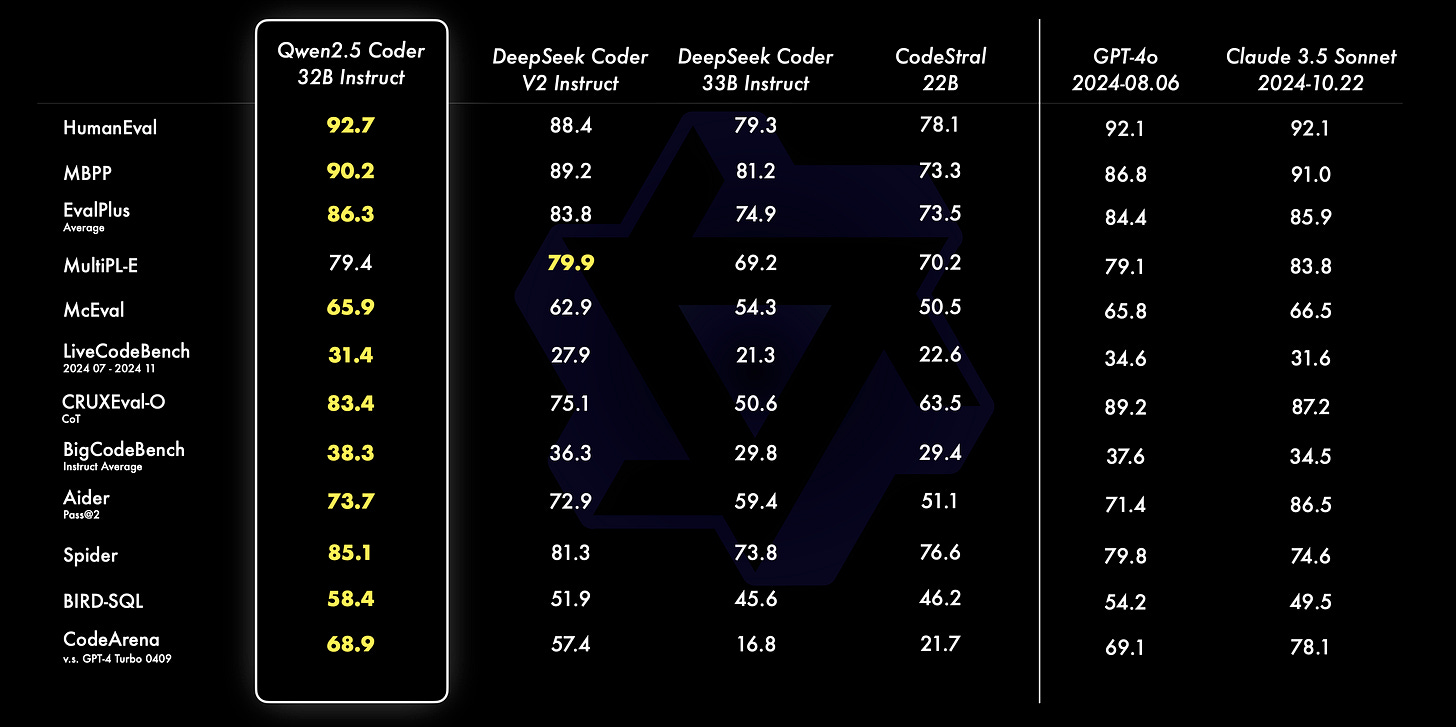

Qwen2.5 Coder 32B: The Best LLM for Coding Tasks?

Qwen2.5-Coder is a series of code-specific LLMs available in six sizes, ranging from 0.5 to 32 billion parameters. The 32B version has been released this week.

Hugging Face Collection: Qwen2.5-Coder

The model is trained on a large dataset of 5.5 trillion tokens that includes source code, text-code grounding, and synthetic data.

It supports long-context lengths up to 128,000 tokens and, according to evaluations published by the Qwen team, it outperforms other open models and GPT-4o in coding tasks:

Some details of the architecture of the 32B model:

Architecture: transformers with RoPE, SwiGLU, RMSNorm, and Attention QKV bias

Number of Parameters: 32.5B

Number of Parameters (Non-Embedding): 31.0B

Number of Layers: 64

Number of Attention Heads (GQA): 40 for Q and 8 for K

Vocabulary size: 152064

The model is distributed with an Apache 2.0 license.

I recommend reading the technical report, especially the sections discussing the training data, post-training, and training policy:

Qwen2.5-Coder Technical Report

The model card shows how to run the model with Hugging Face Transformers.

More Training Tokens => More Difficult to Quantize!

A few days after the release of Llama 3, in April 2024, we observed that the models were more difficult to quantize, e.g., with GPTQ, than Llama 2.

One hypothesis to explain this was that Llama 3 was trained on such a large volume of tokens that each weight carried significantly more information. As a result, reducing their precision would have a greater impact.

This assumption appears to be correct: training a model on more data makes it more challenging to quantize accurately.

In a new paper, "Scaling Laws for Precision", CMU, Stanford, Databricks, Harvard, and MIT (a lot of big names! ) examine the challenges associated with quantizing LLMs when they are extensively trained on large datasets.

The authors formulate scaling laws that predict how model performance degrades when weights, activations, or attention mechanisms are quantized at various precisions during both pre-training and post-training phases. The study finds that overtraining models on large datasets can increase their sensitivity to quantization during inference, potentially leading to decreased performance.

This degradation follows a power-law relationship with the token-to-parameter ratio observed during pre-training, indicating a critical data size beyond which additional training may be detrimental if the model is intended for quantized deployment. The authors also introduce the concept of "effective parameter count" to explain how reducing precision affects a model's capacity, noting that while weights can be trained in low precision without significant issues, activations and key-value caches are more sensitive.

Note that this study is limited to small models (up to 1.7 billion parameters) and GPTQ and AWQ quantization. While Llama 3 is indeed more difficult to quantize with GPTQ, bitsandbytes performed much better. It is possible that it is some specific quantization techniques that are significantly impacted by the number of training tokens.

GPU Cost Tracker

This section keeps track, week after week, of the cost of GPUs. It only covers consumer GPUs, from middle-end, e.g., RTX 4060, to high-end, e.g., RTX 4090.

While consumer GPUs have much less memory than GPUs dedicated to AI, they are more cost-effective, by far, for inference with small batches and fine-tuning LLMs with up to ~35B parameters using PEFT methods.

To get the prices of GPUs, I use Amazon.com. If the price of a GPU drops on Amazon, there is a high chance that it will also be lower at your favorite GPU provider. All the links in this section are Amazon affiliate links.

GPU Selection of the Week:

RTX 4090 (24 GB): GIGABYTE GV-N4090AERO OC-24GD GeForce RTX 4090 AERO

RTX 4080 SUPER (16 GB): GIGABYTE GeForce RTX 4080 Super WINDFORCE V2

RTX 4070 Ti SUPER (16 GB): GIGABYTE GeForce RTX 4070 Ti Super WINDFORCE

RTX 4060 Ti (16 GB): Asus Dual GeForce RTX™ 4060 Ti EVO OC Edition 16GB GDDR6

No change since last week!

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

This week, I reviewed:

⭐BitNet a4.8: 4-bit Activations for 1-bit LLMs

Self-Consistency Preference Optimization

"Give Me BF16 or Give Me Death"? Accuracy-Performance Trade-Offs in LLM Quantization

NeuZip: Memory-Efficient Training and Inference with Dynamic Compression of Neural Networks

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!