Hi Everyone,

In this edition of The Weekly Kaitchup:

Llama 3.2: Small and Vision Language Models

Molmo: Open Multimodal Models

My book “LLMs on a Budget” is available for pre-order. Get all the details and a 50% discount here:

I’m planning to publish the first chapter around the 15th of October.

I’m also launching my "LLM Services". If you would like an LLM to be fine-tuned on your data, quantized, or evaluated, contact me!

You can find details about the tasks I accept in this Trello board:

Subscribe to The Kaitchup Pro and get a 20% discount for all services.

The Kaitchup has now more than 5000 subscribers. If you are a free subscriber, consider upgrading to paid to access all the notebooks (100+) and more than 150 articles.

Note: Consider switching to a yearly subscription if you are a monthly subscriber. It is 38% cheaper.

Llama 3.2: Small and Vision Language Models

The much-anticipated multimodal Llama models announced in April 2024 have finally arrived!

Hugging Face Collection: Llama 3.2

The Llama 3.2 Vision models, available in 11B and 90B parameters, can process prompts combining text and images, similar to other multimodal models like Qwen2-VL, Pixtral, and Phi-3.5 Vision. To incorporate image input, Meta trained adapter weights that integrate a pre-trained image encoder into the language model using cross-attention layers.

The training started with Llama 3.1, where image adapters and encoders were added, followed by pretraining on extensive noisy image-text datasets, and later fine-tuning on high-quality in-domain data. Post-training involved steps like supervised fine-tuning, rejection sampling, and preference optimization, with synthetic data generation and safety mitigation to refine the model.

In other words, Llama 3.2 Vision is Llama 3.1 70B with a vision module on top of it. The LLM hasn’t been specialized in image-related text generation such as generating captioning, OCR, etc.

For those who read my recent review of Qwen2-VL (published in The Salt), you'll notice that Llama 3.2 Vision's architecture and training process is relatively simpler. It seems that Llama 3.2 Vision, especially the 90B model, underperforms compared to Qwen2-VL, with the latter's 72B model showing better results on public benchmarks. Meta hasn't released direct comparisons between the two models yet, and I'm waiting for independent fair benchmarks to provide a clearer picture of the vision capabilities of the models.

Artificial Analysis benchmarked the models for text tasks and found that the vision models performed the same as the text models, confirming that Meta didn’t modify the LLM weights.

For now, if you’re already using Qwen2-VL, there doesn’t seem to be a strong reason to switch to Llama 3.2 Vision.

Additionally, if you're based in Europe like I am, access to Llama 3.2 Vision is restricted. When I try to access it on Hugging Face, I see this message:

While using a VPN or accessing it from another Hugging Face repository might be possible, I’d rather avoid risking future license issues with Meta. Given that the Vision models don’t seem to surpass Qwen2-VL, I’ve decided not to write any dedicated articles on Llama 3.2 Vision.

Instead, I’ll focus on the new Llama 3.2 1B and 3B models, released alongside Llama 3.2 Vision. Although these aren’t multimodal, they seem to be the best-performing models in their respective categories. Stay tuned for a detailed review and fine-tuning guide next week! What Meta did to make these two small models is very interesting.

Molmo: Open Multimodal Models

In addition to Llama 3.2 Vision, we also got Molmo by Allen AI.

Hugging Face Collection: Molmo

Molmo is a new family of multimodal AI models that competes with proprietary systems across a range of academic benchmarks and human evaluations.

The model architecture consists of four main components:

Pre-Processor: Converts the input image into multiple scaled, cropped images.

ViT Image Encoder: Maps these images into vision tokens using a Vision Transformer (ViT).

Connector: Uses a Multi-Layer Perceptron (MLP) to project and pool vision tokens, matching them to the language model's input dimensions.

Decoder-Only Transformer LLM: Generates responses based on the processed image and text input.

Molmo was trained using an open pipeline without relying on proprietary models. Starting with pre-trained vision encoders like CLIP and language models, Molmo used for training the PixMo dataset—a collection of high-quality, human-annotated image captions and pointing data obtained through speech-based descriptions.

Molmo's models rank competitively in evaluations on 11 academic benchmarks and in human preference assessments, with the Molmo 72B achieving high scores. It seems to outperform Qwen2-VL on average, for the benchmarks that they selected.

As usual with Allen AI, everything is open: training data, evaluation pipeline, etc. Everything can be understood and reproduced!

source: Molmo and PixMo: Open Weights and Open Data for State-of-the-Art Multimodal Models

GPU Cost Tracker

This section keeps track, week after week, of the cost of GPUs. It only covers consumer GPUs, from middle-end, e.g., RTX 4060, to high-end, e.g., RTX 4090.

While consumer GPUs have much less memory than GPUs dedicated to AI, they are more cost-effective, by far, for inference with small batches and fine-tuning LLMs with up to ~35B parameters using PEFT methods.

To get the prices of GPUs, I use Amazon.com. If the price of a GPU drops on Amazon, there is a high chance that it will also be lower at your favorite GPU provider. All the links in this section are Amazon affiliate links.

RTX 4090 (24 GB): ASUS TUF Gaming GeForce RTX™ 4090 OG ($1,819.99, same card as last week)

RTX 4080 SUPER (16 GB): GIGABYTE GeForce RTX 4080 Super WINDFORCE V2 ($999.99, same card as last week)

RTX 4070 Ti SUPER (16 GB): MSI Gaming RTX 4070 Ti Super 16G Ventus ($779.99 (-$10.00); changed for a cheaper card)

RTX 4060 Ti (16 GB): MSI Gaming GeForce RTX 4060 Ti 16GB GDRR6 Extreme Clock ($429.99, same card as last week)

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

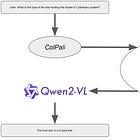

This week I reviewed the Qwen2-VL paper to understand better why it is performing so well.

I also briefly reviewed:

⭐Training Language Models to Self-Correct via Reinforcement Learning

Scaling Smart: Accelerating Large Language Model Pre-training with Small Model Initialization

Promptriever: Instruction-Trained Retrievers Can Be Prompted Like Language Models

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!

![Announcing The Kaitchup's Book: LLMs on a Budget [Pre-sales Open]](https://substackcdn.com/image/fetch/$s_!F5Dh!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fa2920b00-5ab4-4e2d-9ebb-6b46642e4876_1024x768.jpeg)