Hi Everyone,

In this edition of The Weekly Kaitchup:

OLMoE: The First Really Open Source Mixture of Expert Model

GuideLLM: Evaluate Your Hardware for LLM Deployment

vLLM 0.6.0: 2.7x Higher Throughput and 5x Time Latency Reduction

My book “LLMs on a Budget” is now available for pre-order! Get all the details here:

33 have already been sold. Thanks a lot for your support! I’m planning to publish the first chapter around the 15th of October.

The Kaitchup has now more than 5000 subscribers. If you are a free subscriber, consider upgrading to paid to access all the notebooks (100+) and more than 150 articles.

Note: If you are a monthly subscriber, consider switching to a yearly subscription. It is 38% cheaper.

I’ll publish next week my first exclusive article for The Kaitchup Pro subscribers. It will describe a new method that I’ve been working on for a few months to make LLMs smaller and faster while preserving their accuracy. New custom versions of Qwen2, Mistral NeMo Minitron, and Llama 3.1 will be released along with this article.

OLMoE: The First Really Open Source Mixture of Expert Model

Many LLM developers label their models as "open source" when releasing them under a permissive license. However, in many cases, "open source" merely refers to the availability of model weights and the code to run the model, without providing additional resources or transparency.

We are far from the definition of what “open source” is, for instance, for software development.

For an LLM to be open source, I believe the model and code to run it should be published, along with the training data, training scripts, and training recipe. Without this, we can’t reproduce the model.

6 months ago, Allen Institute for AI released OLMo, the first LLMs with billions of parameters to really be fully open source.

This week, they also released OLMoE, the first 7B mixture of expert (MoE) model to be open source.

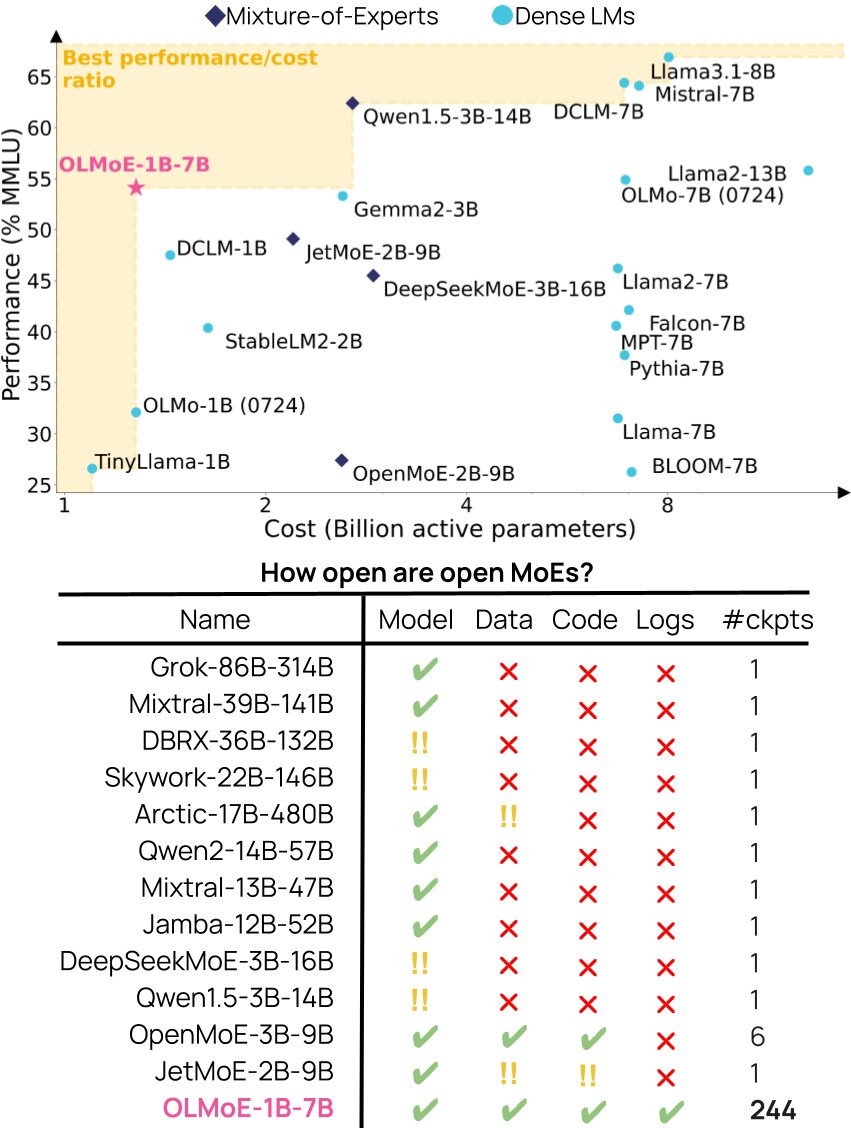

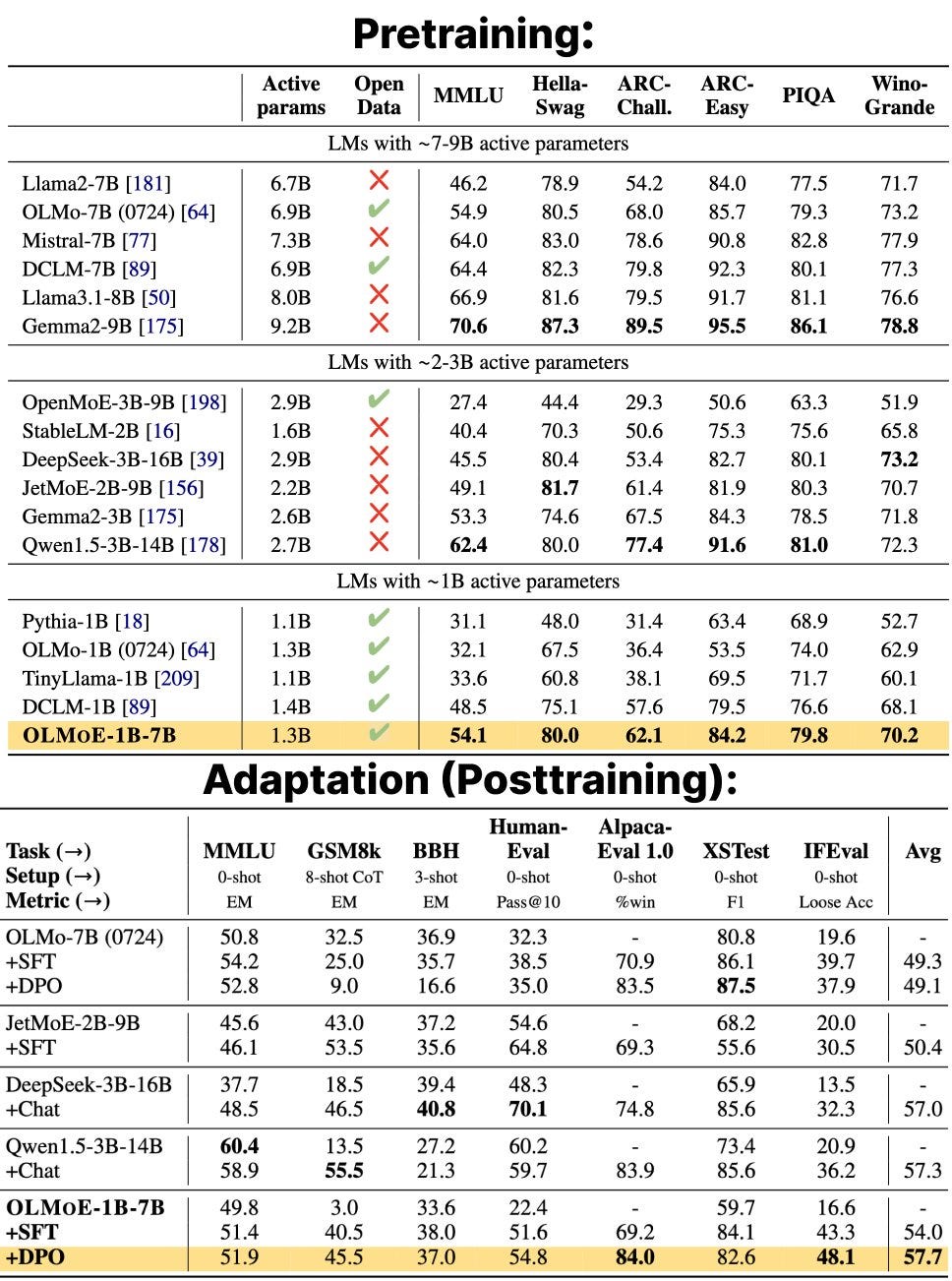

OLMoE exploits 64 expert subnetworks with only 8 among them being activated during inference for each token and each layer. Effectively, only 1B parameters are used simultaneously for inference making it a very fast model performing as well as 7B models according to their evaluation:

They released everything:

Pretraining Checkpoints, Code, Data and Logs.

SFT (Supervised Fine-Tuning) Checkpoints, Code, Data and Logs.

DPO/KTO (Direct Preference Optimization/Kahneman-Tversky Optimization), Checkpoints, Preference Data, DPO code, KTO code, and Logs.

If you are curious to know how MoE models work, I wrote about it when Mistral AI released their first version of Mixtral:

GuideLLM: Evaluate Your Hardware for LLM Deployment

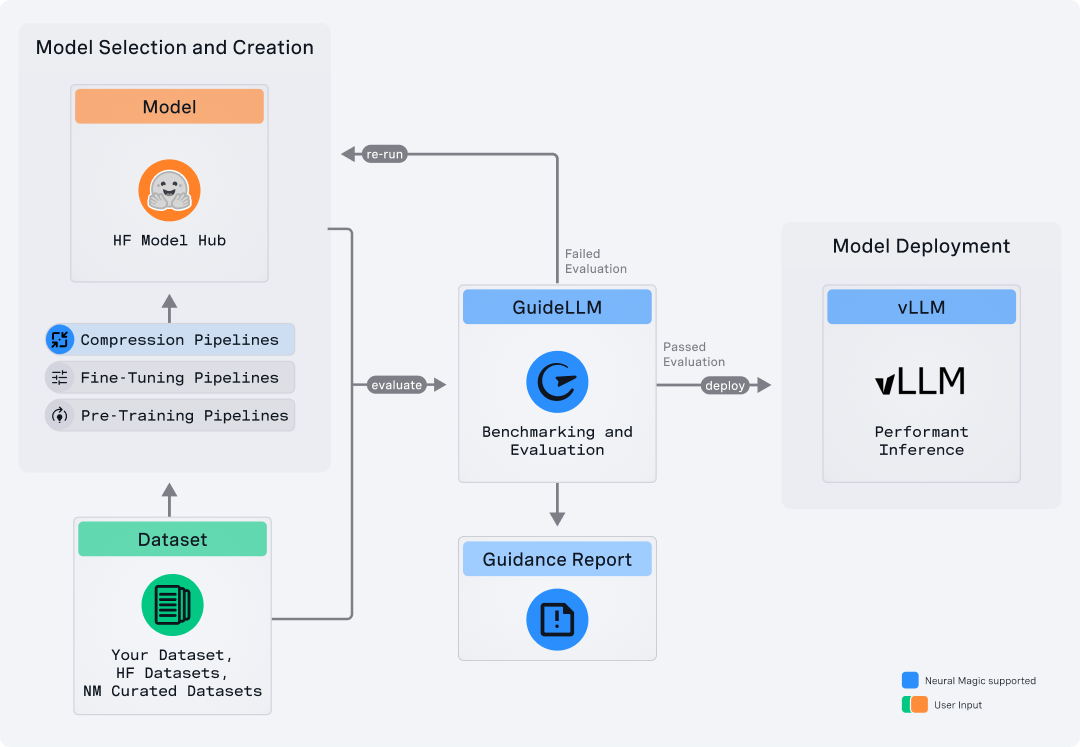

Neural Magic released GuideLLM:

GitHub: neuralmagic/guidellm (Apache 2.0 license)

It is a tool based on vLLM designed for evaluating and optimizing the deployment of LLMs. By simulating real-world inference workloads, it helps users assess the performance, resource requirements, and cost implications of deploying LLMs on different hardware configurations. The goal is to enable more efficient, scalable, and cost-effective LLM inference.

GuideLLM also helps in resource optimization, allowing users to identify the most suitable hardware configurations for running models efficiently.

Moreover, the tool provides cost estimation features that help users evaluate the financial impact of different deployment strategies.

To the best of my knowledge, this is the most complete tool of this kind. It’s open source and it seems easy to use. I’ll probably write an article next week to show how it works and to explain the reports it generates.

vLLM 0.6.0: 2.7x Higher Throughput and 5x Time Latency Reduction

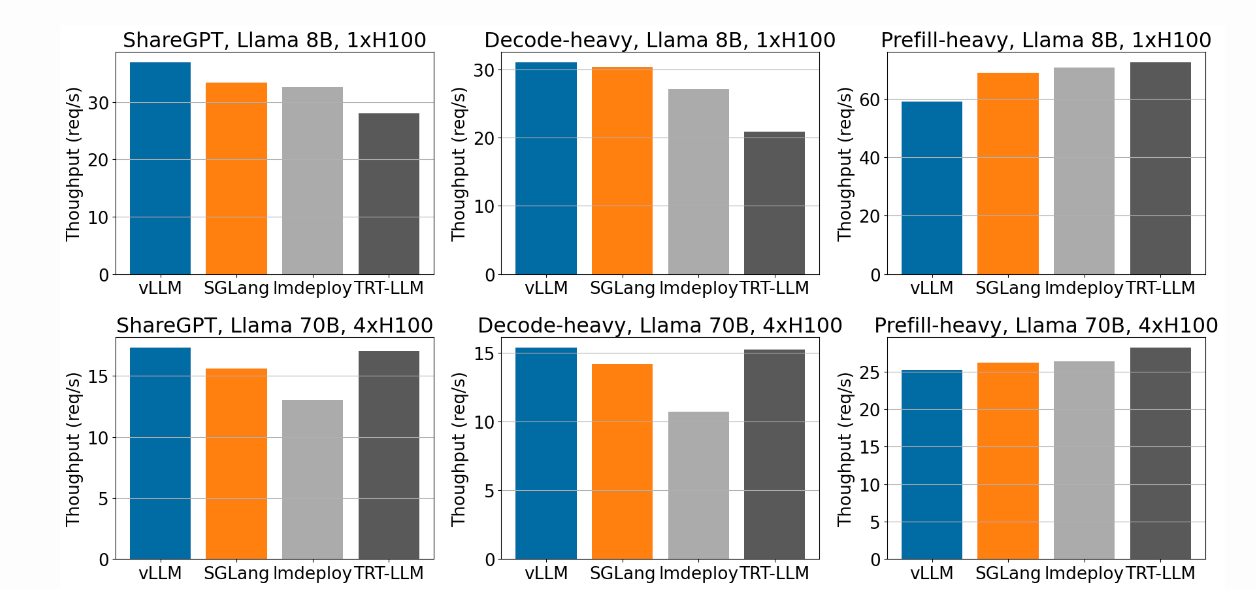

A very impressive update for vLLM which is now faster than TensorRT-LLM for decoding on H100 GPUs:

It’s also 2.7x faster than the previous version:

Initially, vLLM was optimized for large models on GPUs with limited memory, like Llama 2 13B on NVIDIA A100-40G. However, as faster GPUs with larger memory (e.g., NVIDIA H100) become available, CPU-related bottlenecks have become more significant.

Several optimizations were introduced in vLLM v0.6.0 to reduce CPU overhead and improve GPU utilization:

Process Separation: The API server and inference engine were split into separate processes.

Batch Scheduling: Multi-step scheduling was introduced to reduce GPU idle time by preparing multiple steps in advance. This change boosted the inference throughput by 28% when running Llama 70B models on 4xH100.

Asynchronous Output Processing: Output processing was made asynchronous, allowing CPU work to overlap with GPU computation. This change improved GPU utilization and reduced time per output token by 8.7%.

Additional improvements were made, such as caching objects to reduce CPU overhead, using non-blocking operations for data transfer, and introducing fast code paths for simple workloads.

Note that most of these improvements seem to target GPUs with a large memory. They don’t provide any information on a possible performance improvement for GPU with less VRAM.

source: vLLM v0.6.0: 2.7x Throughput Improvement and 5x Latency Reduction

GPU Cost Tracker

This section keeps track, week after week, of the cost of GPUs. It only covers consumer GPUs, from middle-end, e.g., RTX 4060, to high-end, e.g., RTX 4090.

While consumer GPUs have much less memory than GPUs dedicated to AI, they are more cost-effective, by far, for inference with small batches and fine-tuning LLMs with up to ~35B parameters using PEFT methods.

To get the prices of GPUs, I use Amazon.com. If the price of a GPU drops on Amazon, there is a high chance that it will also be lower at your favorite GPU provider. All the links in this section are Amazon affiliate links.

RTX 4090 (24 GB): GIGABYTE GV-N4090AERO OC-24GD GeForce RTX 4090 AERO ($1,751.00 (+$52.00), same card as last week)

RTX 4080 SUPER (16 GB): GIGABYTE GeForce RTX 4080 Super WINDFORCE V2 ($999.99, same card as last week)

RTX 4070 Ti SUPER (16 GB): ZOTAC GAMING GeForce RTX 4070 Ti SUPER Trinity Black Edition ($789.99 (-$10.00); same card as last week)

RTX 4060 Ti (16 GB): PNY GeForce RTX™ 4060 Ti 16GB VERTO™ ($439.99 (-$10.00), changed for a cheaper card)

Evergreen Kaitchup

In this section of The Weekly Kaitchup, I mention which of the Kaitchup’s AI notebook(s) and articles I have checked and updated, with a brief description of what I have done.

In June, llama.cpp received a major update breaking most applications based on it. It changed the names of binaries and the names of some scripts.

I have updated my notebook and article on “GGUF quantization”. The GGUF quantization notebook works again. Note that GGUF models are not as accurate as other quantized models but they have the advantage of being extremely easy to deploy as they are single files and optimized to run on a CPU.

Notebook: #49 GGUF Your LLM with llama.cpp and Run It on Your CPU

I’ll publish next week a follow-up article explaining how to quantize models with llama.cpp more accurately.

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

Published this week in The Salt:

Q-GaLore: Pre-Train 7B Parameter LLMs from Scratch on a 16 GB GPU

Pre-training large language models (LLMs) from scratch is extremely expensive. It requires professional GPUs with huge amounts of memory for many weeks. To make it possible for consumer hardware, we must first reduce memory consumption.

I also reviewed:

⭐The Mamba in the Llama: Distilling and Accelerating Hybrid Models

Efficient LLM Scheduling by Learning to Rank

Efficient Detection of Toxic Prompts in Large Language Models

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% (or 30% for Pro subscribers) discount for group subscriptions):

Have a nice weekend!

![Announcing The Kaitchup's Book: LLMs on a Budget [Pre-sales Open]](https://substackcdn.com/image/fetch/$s_!F5Dh!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fa2920b00-5ab4-4e2d-9ebb-6b46642e4876_1024x768.jpeg)

vLLM 0.6.0 support now tool_choice auto and a specific template to run Mistral functions call exaclty the same way as La plateforme. You only need to use theses args :

--enable-auto-tool-choice

--tool-call-parser mistral

--chat-template example/tool_chat_template_mistral.jinja

Thanks for sharing and looking forward to your review of GuideLLM!