Hi Everyone,

In this edition of The Weekly Kaitchup:

Sparse Llama: 70% Smaller, 3x Faster, and Full Accuracy

SUPRA: Turn a Transformer Model into an RNN Model

NVIDIA TensorRT Model Optimizer

The Kaitchup has now 3,521 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks (70+) and more than 100 articles.

I’m offering a 35% discount for the yearly subscription to The Salt, my other AI newsletter. Available until May 28th.

Sparse Llama: 70% Smaller, 3x Faster, and Full Accuracy

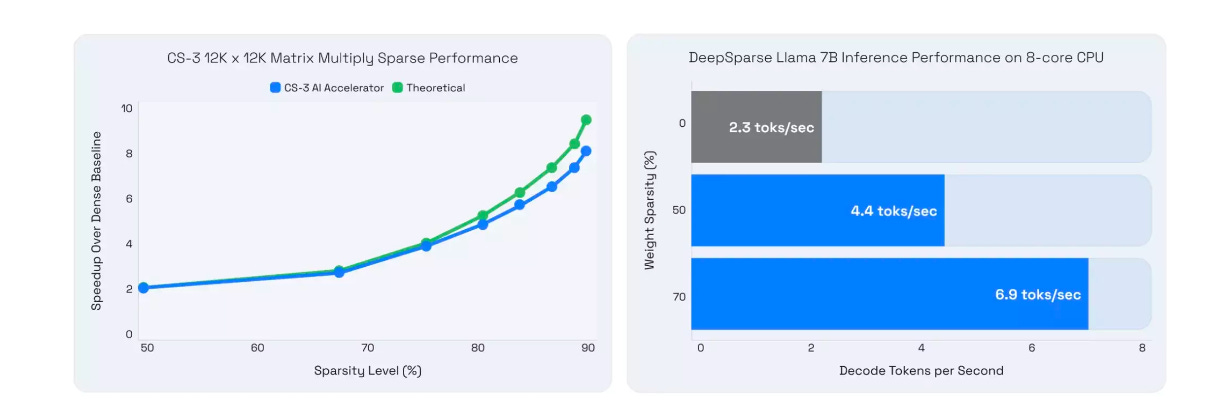

Cerebras and Neural Magic have combined pruning techniques and sparse pre-training to reduce parameters by up to 70% without compromising accuracy.

For instance, they have managed to sparsify Llama 2 to 50-70% while maintaining full accuracy for challenging downstream tasks. Neural Magic’s DeepSparse engine also delivers up to 3x faster inference compared to dense models.

Sparsity in deep learning aims to reduce computational and memory costs. While pruning has effectively reduced the size of computer vision models, it has not yielded similar results for LLMs. LLMs have a large number of parameters, and pruning can disrupt the delicate balance between parameters, leading to significant accuracy loss in tasks such as chat and coding. This complexity is why major LLMs are rarely exploiting sparsity.

While sparsifying a Llama model to 70% is impressive, it’s also a very complex process to maintain the accuracy of the model. A follow-up pre-training has to be done on a new dataset.

Consequently, sparsifying an LLM is costly. They have a model zoo storing already sparsified models. That’s also why more recent LLMs, such as Llama 3, are not yet available as sparse models. The conversion takes time.

For efficient inference with sparse models, they modified vLLM:

GitHub: neuralmagic/nm-vllm

They also published a technical report describing their approach:

Enabling High-Sparsity Foundational Llama Models with Efficient Pretraining and Deployment

SUPRA: Turn a Transformer Model into an RNN Model

Attention-free models such as Mamba and RWKV are much more efficient for inference. However, they also have the reputation to be extremely difficult to train. For instance, for The Salt, I explored and trained Jamba, which is an hybrid model using Mamba and found that it learns extremely slowly during fine-tuning:

With Scalable UPtraining for Recurrent Attention (SUPRA), training attention-free models is now much more simple. SUPRA doesn’t pre-trained a model from scratch but relies on a transformer model to initialize the training.

SUPRA turn the model into an RNN and “up-trains” it on new tokens. The authors of SUPRA applied the technique to Mistral 7B to turn it into an RNN followed by up-training on 100B tokens. The resulting model significantly outperforms RWKV-5, an attention-free model trained on many more tokens.

They released a Mistral RNN made with SUPRA on the Hugging Face Hub:

The code to turn a Transformer model into an RNN is available here:

GitHub: TRI-ML/linear_open_lm

The method is described in this paper:

Linearizing Large Language Models

NVIDIA TensorRT Model Optimizer

NVIDIA released a new library for quantizing models:

GitHub: NVIDIA TensorRT Model Optimizer

The NVIDIA TensorRT Model Optimizer is a library that integrates advanced optimization techniques such as quantization and sparsity to compress models. It supports torch or ONNX models.

For quantization, Model Optimizer supports a variety of quantization formats such as FP8, INT8, INT4, etc., and algorithms like SmoothQuant, AWQ, and Double Quantization. Additionally, the Model Optimizer supports both post-training quantization (PTQ) and quantization-aware training (QAT).

It also supports AWQ W4A8 quantization, i.e., the weights of the model are 4-bit and the activations (tensors created during inference) are 8-bit. It divides by 2 the memory consumption of the activations for inference. W4A8 is still “experimental” but looks promising.

The library has a permissive license (commercial use allowed).

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

This week in the Salt, I briefly reviewed:

Lory: Fully Differentiable Mixture-of-Experts for Autoregressive Language Model Pre-training

You Only Cache Once: Decoder-Decoder Architectures for Language Models

Large Language Models are Inconsistent and Biased Evaluators

⭐Fishing for Magikarp: Automatically Detecting Under-trained Tokens in Large Language Models

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!