Hi Everyone,

In this edition of The Weekly Kaitchup:

Gemma: Open LLMs by Google

COSMO 1B: A Tiny LLM by Hugging Face

LoRA+: LoRA but with Two Learning Rates

The Kaitchup has now 2,137 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks and articles. There is a 7-day trial that you can cancel anytime.

Gemma: Open LLMs by Google

Google released two open LLMs: Gemma 2B and 7B. They are available on the Hugging Face Hub as base and instruct LLMs:

Gemma collection (special Google license, OK for commercial use)

They have an architecture similar to Llama 2 but with an unusually large vocabulary of 256,000 tokens. It’s 8x more than Llama 2 and 100k more tokens than Qwen-1.5.

Another striking difference with other 7B LLMs is that Gemma has been trained on much more data: 6 trillion tokens, 3x more than Llama 2. Since Llama 2 7B didn’t reach saturation after training on 2T tokens, this choice of a longer training makes sense.

Google also published a technical report. As usual in these technical reports, there aren’t many technical details but a lot of benchmark scores.

Gemma: Open Models Based on Gemini Research and Technology

Gemma 7B seems to outperform all other 7B models, including Mistral and Qwen1-5 7B. However, while Gemma 2B also performs well in its category, it seems that Microsoft’s Phi-1.5 remains better.

I’m writing an article for next week about the Gemma models and how to use them.

COSMO 1B: A Tiny LLM by Hugging Face

Hugging Face had many sleeping H100 GPUs for one night, so they decided to use them for training a 1.8 billion parameters LLM.

Like the Phi models, COSMO 1B is entirely trained on synthetic data. The data were generated by Mixtral-8x7B instruct. Hugging Face released the datasets they have used to train the model:

As for the model itself, it is available here:

HuggingFaceTB/cosmo-1b (Apache 2.0 license)

The model card gives the training details and information about the performance of the model:

It doesn’t look good for Cosmo which seems to underperform models of similar sizes or smaller such as TinyLlama. However, note that Cosmo has only been trained for 15 hours on 180B tokens, 16.6 times less than TinyLlama. The training loss was still decreasing:

LoRA+: LoRA but with Two Learning Rates

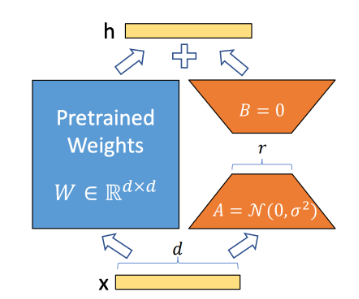

LoRA fine-tunes an adapter, i.e., a set of trainable parameters on top of a frozen base LLM. This adapter is composed of two matrices usually denoted A and B (orange blocks in the figure below):

All the parameters of A and B are trained using a single learning rate.

In a new paper, researchers from UC Berkeley demonstrate that the use of one learning is suboptimal and inefficient.

LoRA+: Efficient Low Rank Adaptation of Large Models

Using two different learnings x and y for A and B, respectively, improves the accuracy of the adapters in downstream tasks and also accelerates the training with a faster convergence.

The learning rates x and y are not independent: y = m*x, with m much greater than 1.

Setting two different learning rates for A and B is intuitive but it introduces one more hyperparameter, m, and, according to the authors, finding an optimal m is not trivial.

Here is an example of a Pytorch implementation:

GitHub: nikhil-ghosh-berkeley/loraplus

The Salt

In case you missed it, I published one new article in The Salt this week:

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!