Hi Everyone,

In this edition of The Weekly Kaitchup:

The Recipe to Pre-Train the Falcon LLMs

Microsoft Phi-2: Better and Larger than Phi-1.5

DeciLM-7B: The Fastest 7B Parameter LLM

The Kaitchup has now 1,277 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks and articles. There is a 7-day trial that you can cancel anytime.

The Recipe to Pre-Train the Falcon LLMs

The Technology Innovation Institute of Abu Dhabi published a paper disclosing how they validated each decision they had to take to pre-train the Falcon models:

The Falcon Series of Open Language Models

I’m currently reviewing this paper. It’s a gold mine. I find the sections about the training data and model architecture particularly insightful. I will publish an article explaining and summarizing this long paper, probably next week.

Meanwhile, you can find more information about the Falcon models and how to use them in my previous articles:

Microsoft Phi-2: Better than Phi-1.5 but Twice Larger

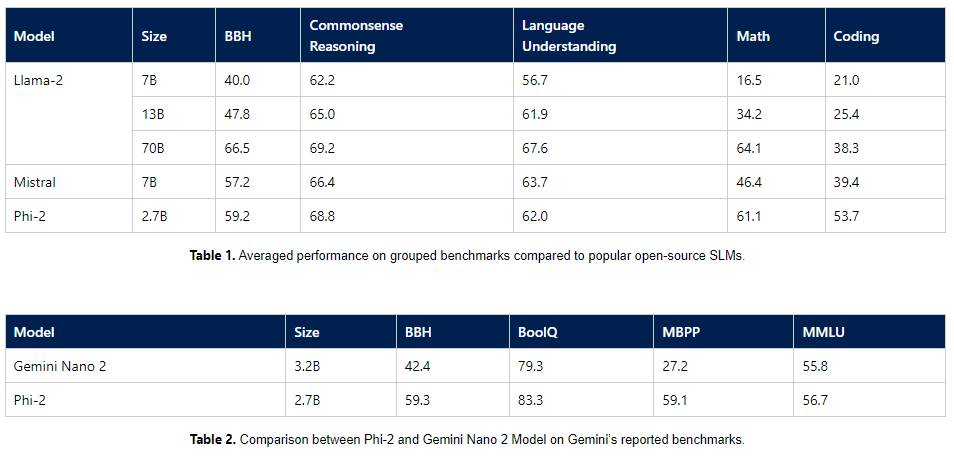

After Phi-1 and Phi-1.5, Microsoft continues to improve its small model with the release of Phi-2:

It significantly outperforms the previous versions but has 2.7B parameters (against 1.3B parameters for phi-1.5).

For training, Microsoft used the same data used to train Phi-1.5 but augmented with:

combination of NLP synthetic data created by AOAI GPT-3.5 and filtered web data from Falcon RefinedWeb and SlimPajama, which was assessed by AOAI GPT-4.

To the best of my knowledge, this is the first time that Microsoft has disclosed the source of Phi’s synthetic training data. Phi-1.5’s model card doesn’t mention the data source while the technical paper remains very vague.

Phi-2 is not aligned/fine-tuned. This is just a pre-trained model for research purposes only (non-commercial, non-revenue generating).

In the blog post announcing Phi-2, it’s interesting to note that Microsoft announces it as a “small language model” (SLM) and not as an LLM. In my opinion, the “SLM” term is too subjective to be largely adopted by the AI community. Many models with 2B parameters, or even less, are still mainly called LLMs.

DeciLM-7B: The Fastest 7B Parameter LLM

In the Weekly Kaitchup #6, I presented DeciLM 6B: A model with very fast inference thanks to the use, among other features, of grouped query attention (GQA). DeciLM 6B was also performing as well as models of similar size, such as Falcon 7B.

This weak, Deci released DeciLM 7B. This new model is currently ranked first on the OpenLLM leaderboard among the 7B LLM. It significantly outperforms Mistral 7B and Llama 2 7B while being almost twice as fast.

If we use Infery-LLM, Deci’s inference SDK, DeciLM 7B becomes 4 times faster than Mistral 7B served with vLLM.

The model is available on the Hugging Face hub and distributed with an Apache 2.0 license:

They have also released an instruct version fine-tuned with LoRA on SlimOrca (no preference optimization, just a simple fine-tuning):

If you want to further improve it with preference optimization, you can follow my tutorial on identity preference optimization (IPO):

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!

Yep, I had already done that, but the problem remains. In your Medium article about Phi-1.5, you mentioned this:

"The problem here is that phi-1.5 was pre-trained without padding and the implementation of MixFormerSequentialForCausalLM released by Microsoft with the model doesn’t support attention masking during training. In other words, we can’t properly fine-tune the model to learn when to stop generating. Pad tokens are interpreted as normal tokens. You would have to modify MixFormerSequentialForCausalLM to add support for the attention mask."

Is the same true with Phi-2?

https://medium.com/@bnjmn_marie/how-to-fine-tune-quantize-and-run-microsoft-phi-1-5-e14a1e22ec12

I just LoRA-tuned Phi-2, but it refuses to stop generating until `max_new_tokens` is reached. Phi-1.5 suffered from the same problem. Do you know how to correct it?