Run Llama 3.1 70B Instruct on Your GPU with ExLlamaV2 (2.2, 2.5, 3.0, and 4.0-bit)

Is ExLlamaV2 Still Good Enough?

Llama 3.1 70B Instruct is currently one of the best large language models (LLMs) for chat applications. However, running a 70B model on consumer GPUs for fast inference is challenging.

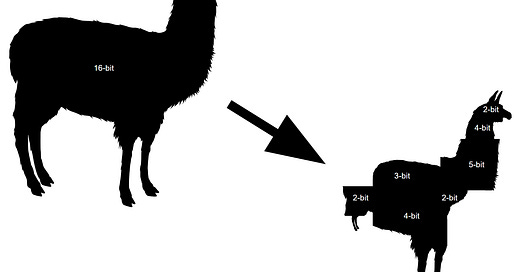

Llama 3.1 70B has 70.6 billion 16-bit parameters. Since one 16-bit parameter occupies 2 bytes of memory, Llama 3.1 70B requires 141.2 GB of GPU memory to be loaded.

With 4-bit quantization, one parameter would occupy only 0.5 bytes. We could run the model on 2x24 GB consumer GPUs such as 2 RTX 4090.

To run on a single GPU, we would need to quantize the model with a precision lower than 2.5-bit. However, if naively performed, such a quantization of Llama 3.1 70B would make the model worse than Llama 3.1 8B, while still being a few GBs larger.

We saw several 2-bit quantization methods that work well for 70B models but have significant drawbacks. They are either too costly (AQLM) or require subsequent fine-tuning (HQQ+).

ExLlamaV2 can quantize a 70B model on a consumer GPU. It applies mixed-precision quantization by aggressively quantizing the less important parameters while preserving the most useful ones.

Is ExLlamaV2 accurate enough to quantize Llama 3.1 70 Instruct to a low precision?

In this article, we will see how to quantize Llama 3.1 70B Instruct to 2.2, 2.5, 3.0, and 4.0 bits. It only takes a few hours and is feasible on a consumer GPU with 24 GB of VRAM. Then, we will compare the accuracy and inference throughput of the quantized models against GPTQ and AWQ models.

ExLlamaV2 quantization code and evaluation are implemented in this notebook: