Last Monday, I started a new project: fine-tuning a Qwen3 Base model using TULU 3’s open fine-tuning recipe. This builds on this article:

The main goal is to test whether a recipe that works well for Llama 3.1 models can also be applied to newer models like Qwen3.

On Tuesday, I evaluated the first checkpoints using the IFEval benchmark. Why IFEval? It's fast (just a few minutes with ~8B models) and dependable. If fine-tuning goes off track, IFEval will usually catch it.

The early results were encouraging. The best checkpoint scored 67.84 (prompt_loose), a significant jump from the base Qwen3 8B score of 52.68. It looked like the fine-tuning was working.

Then came Wednesday, and things went wrong.

Newer checkpoints dropped to 41.96, well below the earlier results. Oddly, the learning curve looked fine: no loss spikes, no signs of instability, just a smooth, steady decline.

So what’s happening here?

Time to investigate.

Going Down the Rabbit Hole

Rerunning the Previous Evaluations

When results start to look suspicious, one of the first steps is to rerun previous evaluations to verify whether the initial results were accurate or just misleading.

Sure enough, when I re-evaluated the earlier checkpoints, the scores came out much lower than before. I couldn’t reproduce Tuesday’s high scores.

Checking for Changes in the Evaluation Code

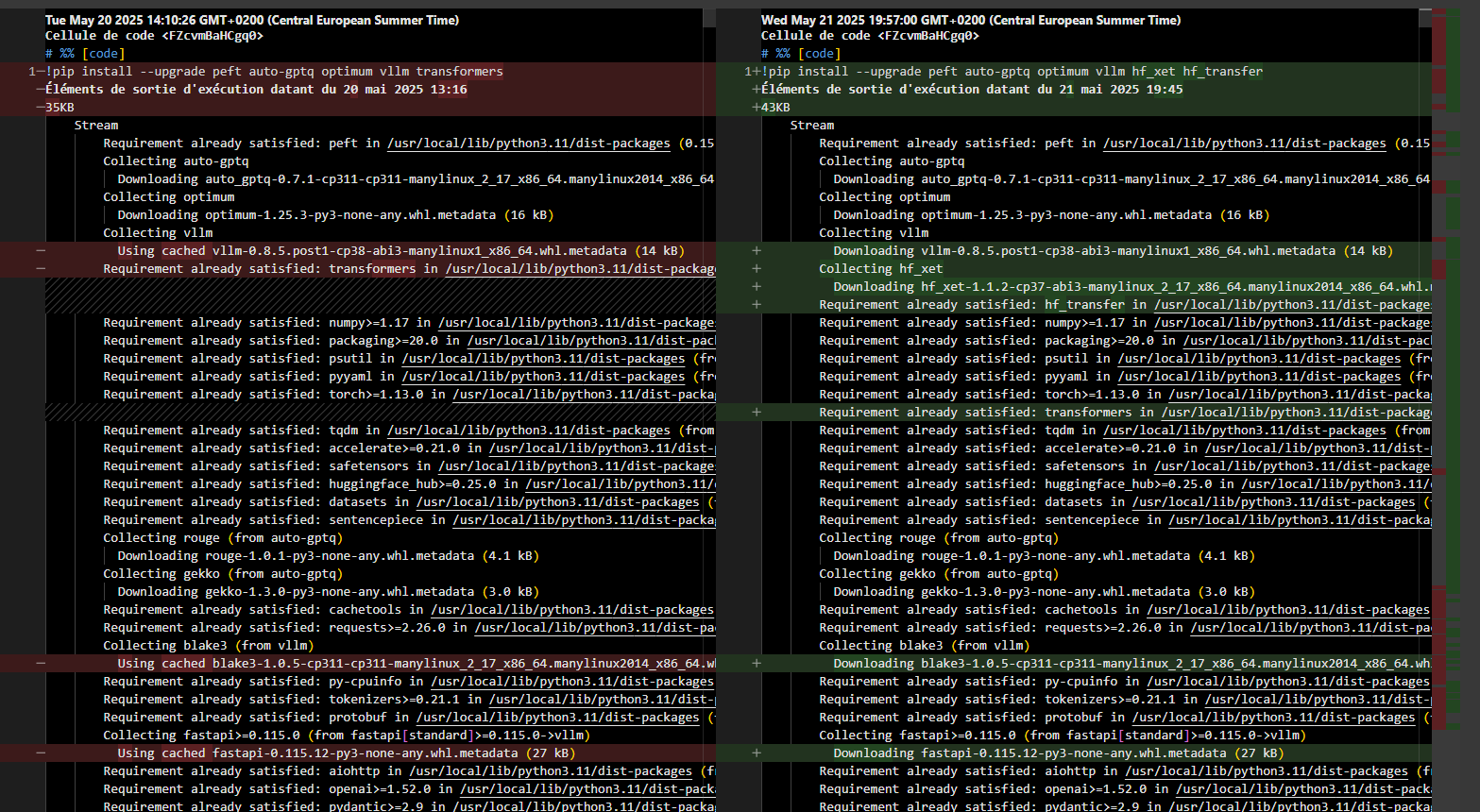

So, next, I reviewed my evaluation code to see if I had inadvertently changed something. I couldn’t find any issues manually. Thankfully, I had run the evaluations on Google Colab, which keeps a version history of notebooks.

Comparing Tuesday’s and Wednesday’s versions, I didn’t notice any meaningful code differences. To double-check, I sent both versions to ChatGPT and asked it to identify any discrepancies in the package versions, which were also saved in the notebook logs.

ChatGPT flagged only two version changes: llguidance and mistral-common. The update to llguidance was minor and unrelated to evaluation logic, and mistral-common wasn’t used at all. So those weren’t the culprits.

Something else was going on.

Checking the Evaluation Dataset

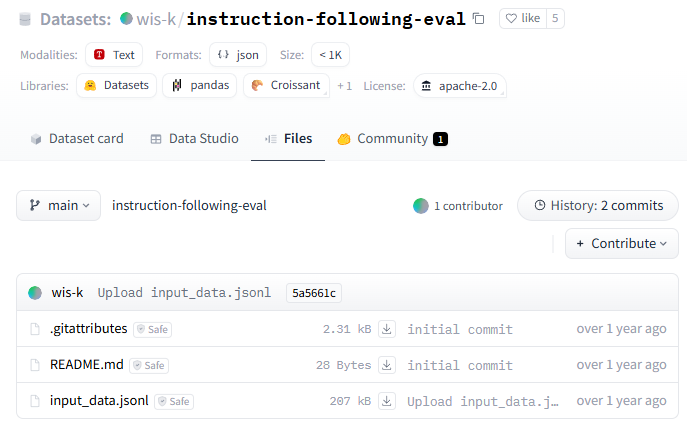

The evaluation framework I use, Evaluation Harness, downloads datasets directly from the Hugging Face Hub. It’s possible the dataset itself changed between evaluations.

Here’s the dataset in question:

That checked out as well. The dataset hasn’t seen any updates in over a year.

Checking the Model

Could the model itself have changed?

This was the last item on my checklist for a few reasons:

Qwen3 was released over three weeks ago, so major updates seemed unlikely.

Models are usually very stable since even minor changes can break thousands of notebooks, tutorials, code bases, etc.

There were no announcements, and I hadn’t seen anything on social media or received any notifications about a significant update.

It's also worth noting that any change to a model, such as adding a BOS token or modifying the chat template, can break comparability with earlier benchmarks. These kinds of adjustments can improve or degrade evaluation results in subtle but important ways. They must be avoided.

And yet...

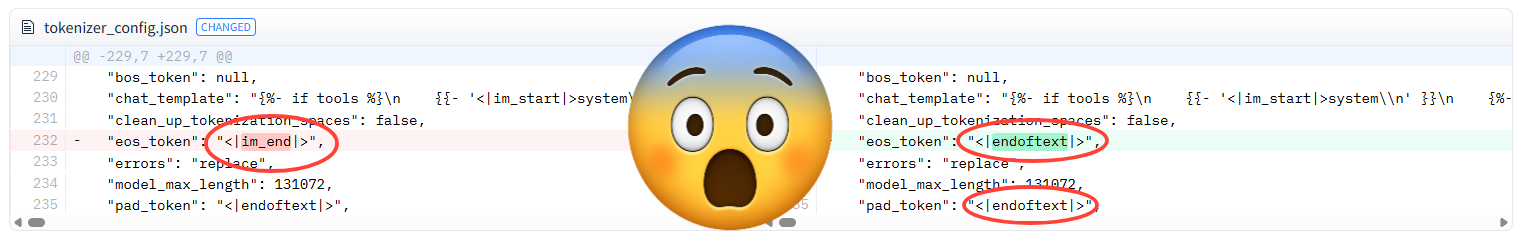

As you may have noticed in the image in the introduction, the Qwen team did push an update to the tokenizer:

This update changed the EOS token, which significantly affects how the base model behaves. I'm sure it has caught many off guard, especially those relying on the tokenizer and chat template of the Qwen3 Base model.

Note: The Qwen team also updated the chat template for the post-trained (non-Base) models. However, that change appears to be minimal, mainly adding a type check for some of the inputs. It's unlikely to break existing pipelines.

The Impact of Changing the EOS Token of Qwen3 Base

Let’s take a closer look at the impact of the tokenizer update in the Qwen3 Base model.

There was only one change: the eos_token <|im_end|> was replaced with <|endoftext|>. This change makes sense and aligns the tokenizer with more standard base model behavior. The <|im_end|> token is typically used in post-trained instruction models to mark the end of an assistant's reply, which is also where generation is expected to stop.

However, the chat template still uses <|im_end|> and never inserts <|endoftext|>. This creates a problem. If you’re fine-tuning the base model with the chat template and then use the new version of the tokenizer, <|im_end|> is no longer recognized as the end-of-sequence token. The inference framework won't know where to stop generating.

This could explain the poor IFEval results, especially since several prompts in that benchmark require a specific format or constrained response length.

To address this, when using the new tokenizer with the chat template, you need to manually add <|endoftext|> to your training examples. Otherwise, the model won't learn where to stop.

There’s an even more serious issue introduced by this update: the PAD token and the EOS token are now the same. I haven’t yet run a fine-tuning with this updated tokenizer, but this setup can cause problems. If the EOS token is used as the PAD token, it might be masked out during training. The model may never actually see it. Whether this happens or not depends on how the implementation handles masking, but to be safe, I strongly recommend setting a PAD token that is different from the EOS token, regardless of the framework you're using.

How Could I Have Prevented This?

In my LoRA fine-tuning of Qwen3 Base, I fully retrained the embeddings. Unfortunately, vLLM doesn’t support adapters with modified embeddings. That means I had to merge the adapter before evaluating the model with vLLM, which I used for faster IFEval evaluation.

Here’s the code I used to merge the adapter:

from transformers import AutoModelForCausalLM, AutoTokenizer

from peft import PeftModel

import torch

import os

# Define model ID and adapter ID

model_id = "Qwen/Qwen3-8B-Base"

adapter_folder = "./adapter_folder"

# Load the base model

model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.bfloat16)

tokenizer = AutoTokenizer.from_pretrained(model_id)

# Load the adapter from the local folder

adapter_model = PeftModel.from_pretrained(model, adapter_folder+"/checkpoint-4000/")

# Merge the adapter with the base model

merged_model = adapter_model.merge_and_unload()

merged_model.save_pretrained("merged_model4")

tokenizer.save_pretrained("merged_model4")What I should have done was use the tokenizer that was serialized alongside the adapter checkpoints. This is generally good practice, as it ensures you're using the exact same tokenizer that was used during fine-tuning.

Unfortunately, for this particular experiment, I wasn’t careful enough. I used the tokenizer from the base model directly, without considering that it could have been updated, and in this case, it had.

Resetting the environment settings, double-checking everything, downloading models, running evaluations, etc. That simple oversight cost me several hours.

But everything is back on track now. I’ve confirmed that the performance on IFEval is still improving with each checkpoint. I’ll write more about the final results once the process is complete.

Stay tuned!

Just thinking about it makes my head ache lol...This is exactly why I love Kaitchup - no pretenses, no sugarcoating, just rolling up sleeves to tackle practical problems head-on. You consistently deliver insights you simply can't find elsewhere. Thanks for sharing!