MobileLLM-R1: Tiny Models Can Also Reason!

The Weekly Kaitchup #110

Hi Everyone,

In this edition of The Weekly Kaitchup:

MobileLLM-R1: Tiny Models Can Also Reason!

A Magistral Small 1.2

GPTQ + EvoPress: Better “Dynamic” GGUF Models

MobileLLM-R1: Tiny Models Can Also Reason!

The MobileLLM line is Meta’s family of sub-billion-parameter language models optimized for on-device inference, introduced with the “MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases” work (ICML 2024).

Architecturally, these models favor “deep-but-thin” designs to reduce memory bandwidth and latency while maintaining token throughput, and they ship with training code and recipes for mobile/edge constraints (e.g., iOS/Android latency profiling, quantization-friendly layouts).

MobileLLM-R1 is the new series with reasoning capabilities. The release comprises two categories: base checkpoints (intended for fine-tuning) and “final” supervised fine-tuned checkpoints targeted at mathematical, programming (Python, C++), and scientific problem solving.

Models provided in this family (base and SFT variants for each size):

• 140M: 15 layers, 9 attention heads, 3 KV heads, model dim 576, MLP 2048.

• 360M:15 layers, 16 attention heads, 4 KV heads, model dim 1024, MLP 4096.

• 950M: 22 layers, 24 attention heads, 6 KV heads, model dim 1536, MLP 6144.

The “final” SFT variants are trained to follow problem-solving prompts, while the base variants serve as starting points for further training. The 950M model’s card reports a pre-training budget of approximately 2T “high-quality” tokens and fewer than 5T total tokens, and lists benchmark evaluations (MATH, GSM8K, MMLU, LiveCodeBench) used to characterize performance.

These benchmark results make competing small models (e.g., Gemma 3 and SmolLM2) look weak on selected tasks. Note: After task-specific fine-tuning, I’ve found that Gemma 3 270M can perform well. In an upcoming article, I’ll show that it’s even possible to make it much smaller (no quantization) while preserving its accuracy.

Benchmark scores should be interpreted cautiously. Many recent releases are tuned to the exact benchmarks they report, which narrows the gap between evaluation and training and limits conclusions about out-of-distribution behavior. Meta also notes that MobileLLM-R1 models are not general-purpose chat models, so they are unlikely to replace instruction-tuned chat models for everyday conversational use on your smartphone.

Note also that the access is gated by the FAIR Noncommercial Research License v1 and an acceptable-use policy; login and explicit acceptance are required to view or download files.

A Magistral Small 1.2

Mistral AI released a new version of its open reasoning model:

Note: Let’s clear out possible confusion: Magistral-Small-2509 is also Magistral Small 1.2.

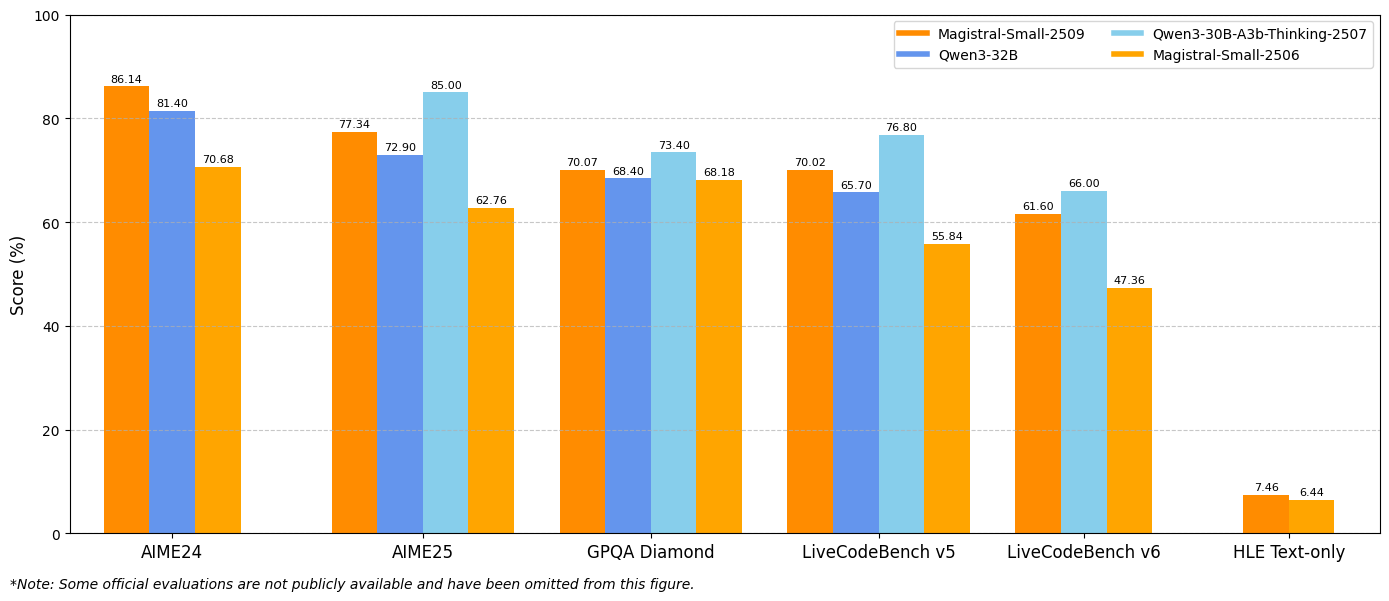

It looks impressive, but again, read these results with caution. The missing entries for Qwen3-30B-A3B suggest Mistral AI likely didn’t run those evaluations themselves and instead reused published numbers, probably computed under different setups, so the Qwen3 results are almost certainly not comparable. For AIME24 and AIME25, from one run to another, using the exact same configuration, results can vary widely.

Multimodality: The model now has a vision encoder and can take multimodal inputs, extending its reasoning capabilities to vision.

Performance upgrade: Magistral Small 1.2 should give you significantly better performance than Magistral Small 1.1 as seen in the benchmark results.

Better tone and persona: You should experience better LaTeX and Markdown formatting, and shorter answers on easy general prompts.

Finite generation: The model is less likely to enter infinite generation loops.

Special think tokens: [THINK] and [/THINK] special tokens encapsulate the reasoning content in a thinking chunk. This makes it easier to parse the reasoning trace and prevents confusion when the '[THINK]' token is given as a string in the prompt.

Reasoning prompt: The reasoning prompt is given in the system prompt.

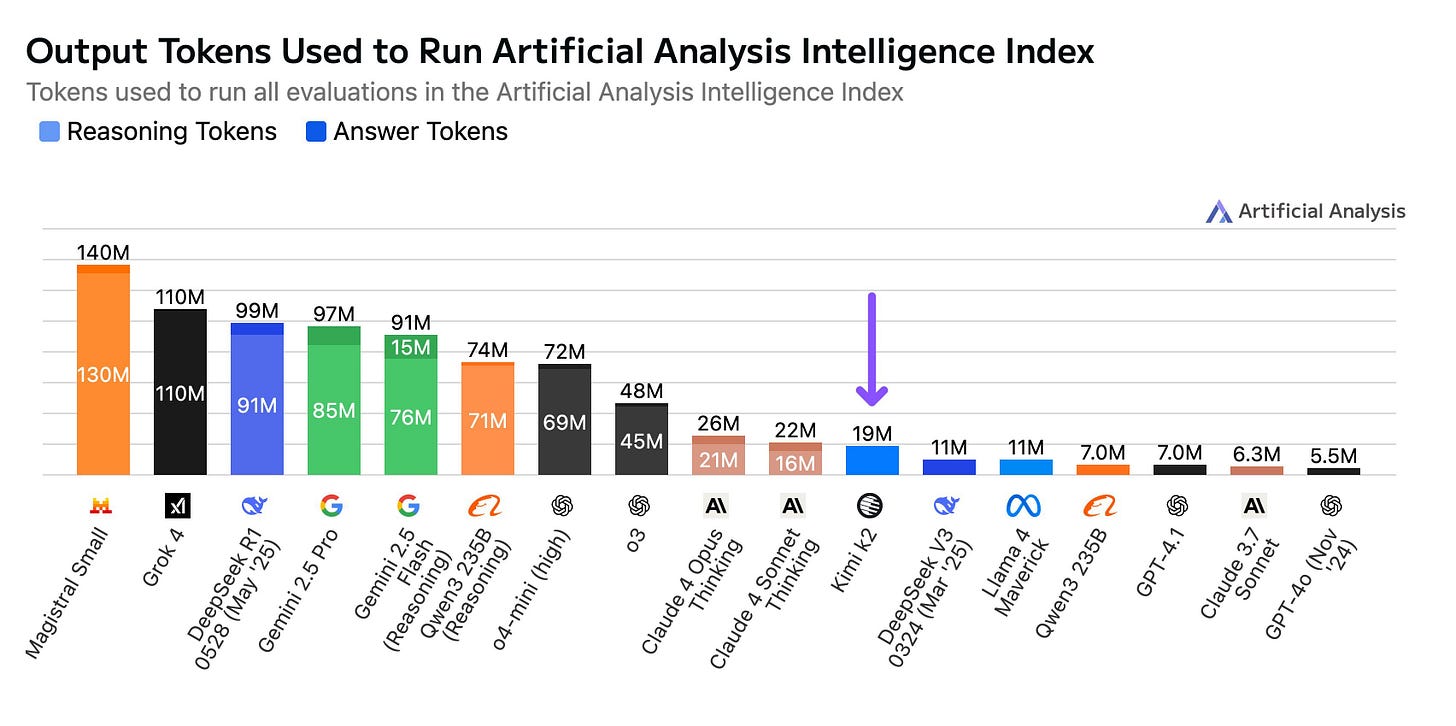

I haven’t rerun the same reasoning benchmarks on Magistral Small 1.2, so I can’t confirm whether it still produces an exceptionally large number of reasoning tokens, like the prior version, which Artificial Analysis previously reported as the longest reasoning traces by a wide margin.

GPTQ + EvoPress: Better “Dynamic” GGUF Models

The GGUF Quantization Toolkit by the author of GPTQ is an open pipeline for building mixed-precision GGUF models, i.e., something closer to Unsloth’s Dynamic GGUF, but open-source. It combines GPTQ post-training quantization with GGUF K-Quants and uses an evolutionary search to assign per-layer bit widths. Yes, it’s complicated, but it works!

The aim is to keep accuracy close to full precision while reducing model size and latency, using scripts that run locally.

The workflow has three stages:

build uniform baselines (standard Q2_K…Q6_K or GPTQ with direct K-Quant export),

search for per-layer bit widths over a database of quantized layers under a target average,

stitch the chosen layers into one GGUF and validate with perplexity and zero-shot checks.

The quantization logic reconciles K-Quants (fixed groups, mostly asymmetric, double-quantized scales) with GPTQ (symmetric/asymmetric, variable groups, error-correcting updates). The pipeline computes K-Quant scales/offsets, then applies GPTQ to reduce residual error. Targets are chosen via a regex over transformer projections, per-layer bit widths can be set in a JSON map with a default (e.g., Q4_K), and packing scripts emit .gguf files while handling symmetry specifics (e.g., Q3_K, Q5_K) and preserving metadata.

For search, a mapper extracts layers from several quantized models and indexes them by tensor and bit width. EvoPress evolves configurations toward a requested average bits figure, scores with KL divergence, and increases calibration tokens across selection rounds. An optional importance-matrix calibration prioritizes sensitive weights at low bits. The stitcher assembles the final model, lets you pin embeddings/LM head/rope to higher precision, verifies shapes, and preserves GGUF headers for engines like llama.cpp.

Evaluation

At matched bit budgets, the toolkit is competitive with popular dynamic baselines.

On Llama-3.1-8B near 4.9 bits, an EvoPress + I-Matrix config reaches C4 PPL 11.18 vs 11.23 for UD-Q4_K_XL with similar zero-shot scores; near 3.0 bits it improves C4 to 13.17 vs 13.75 for UD-Q2_K_XL while keeping downstream accuracy close.

On Llama-3.2-1B, GPTQ-4 beats standard Q4_K on perplexity and EvoPress variants hold accuracy at the same storage.

This is promising. I’ll definitely try it with much harder tasks and benchmarks (multilingual + long context).

The Salt

The Salt is my other newsletter that takes a more scientific approach. In The Salt, I primarily feature short reviews of recent papers (for free), detailed analyses of noteworthy publications, and articles centered on LLM evaluation.

I reviewed in The Weekly Salt:

⭐mmBERT: A Modern Multilingual Encoder with Annealed Language Learning

GAPrune: Gradient-Alignment Pruning for Domain-Aware Embeddings

Single-stream Policy Optimization

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers (there is a 20% discount for group subscriptions):

Have a nice weekend!