Introducing Minivoc: Faster and Memory-Efficient LLMs Through Vocabulary Reduction [WIP]

From 128k to 32k tokens

More and more large language models (LLMs) are adopting a large vocabulary. For instance, recent LLMs such as Llama 3, Qwen2, and Gemma all use a vocabulary with more than 128k entries.

Expanding the vocabulary allows LLMs to handle better more diverse use cases such as multilingual tasks, code generation, and function-calling. A larger vocabulary also reduces tokenizer fertility, meaning fewer tokens are needed to encode a sentence. Fewer tokens to generate leads to faster inference. Recent research (Tao et al., 2024) has also shown that increasing both the model and vocabulary size improves performance.

However, a larger vocabulary isn't without significant drawbacks. For example, if an LLM is intended for English-only chat applications, most non-English tokens—added to cover other languages—are rarely, if ever, used. Despite this, they still consume computational resources, as the model must predict their probability at every decoding step. Additionally, their embeddings occupy memory and lead to significantly larger activations during inference and fine-tuning.

Reducing the vocabulary would shrink the model and enhance memory efficiency for both inference and fine-tuning. This would be even more significant when the model is quantized since most quantization methods avoid quantizing token embeddings and the language modeling head to preserve accuracy.

Nonetheless, reducing the size of the vocabulary is not without challenges. It can severely damage the model’s performance in language generation tasks.

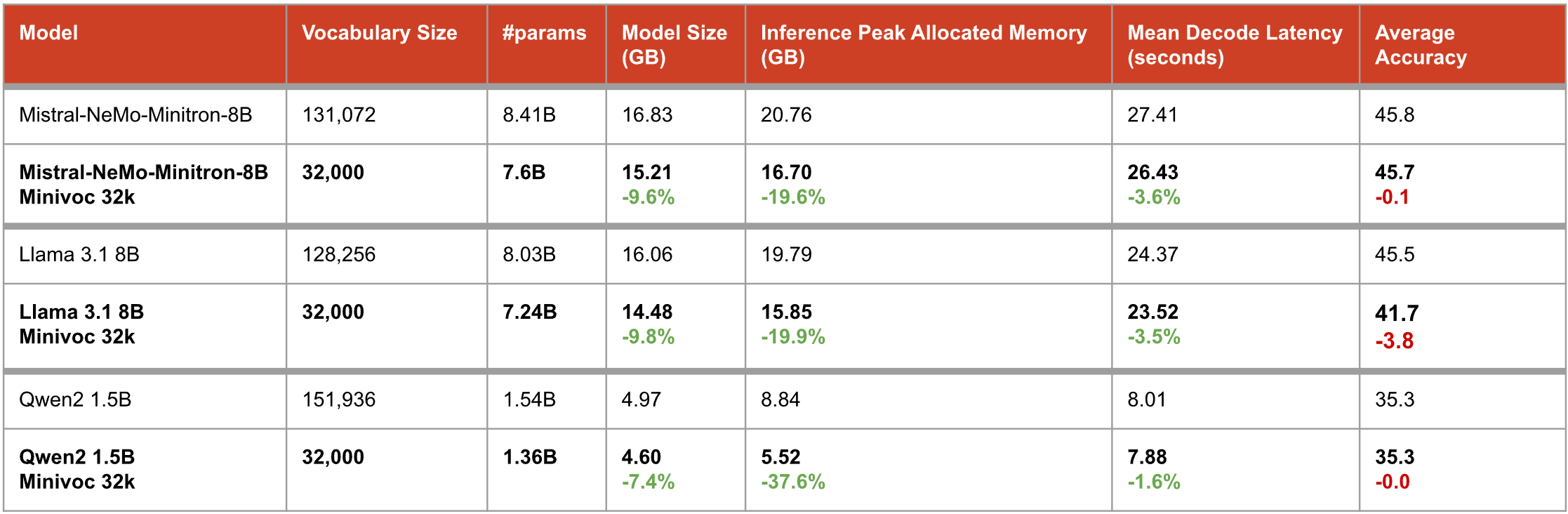

In this article, I introduce Minivoc, a cheap method for reducing the vocabulary of LLMs, for instance, from 128,000 tokens to 32,000 tokens. Minivoc makes models significantly smaller and slightly faster while maintaining their accuracy. I have applied Minivoc to three models: Mistral NeMo Minitron 8B, Llama 3.1 8B, and Qwen2-1.5B.

Note that this is:

still a work in progress (better checkpoints are coming)

*not* a scientific paper (the method is still extremely simple and naive, but I believe it already works well enough to be useful)

I prioritized keeping Minivoc extremely cost-effective. It is designed to be applicable to 8B models with one consumer GPU in less than 24 hours.

Preliminary results:

The Minivoc models are still undergoing training. As you can see in Table 1, the Minivoc version of Llama 3.1 still has to recover some accuracy. More accurate and even smaller Minivoc checkpoints with a 16k vocabulary are coming, next month.

Preliminary checkpoints for the Minivoc models are available here:

kaitchup/Mistral-NeMo-Minitron-8B-Base-Minivoc-32k-v0.1a (for The Kaitchup Pro subscribers only)

kaitchup/Llama-3.1-8B-Minivoc-32k-v0.1a (for The Kaitchup Pro subscribers only)

kaitchup/Qwen2-1.5B-Minivoc-32k-v0.1a (public, Apache 2.0 license)

Note that these models are "base" LLMs. I don’t have the budget yet to train instruct/chat versions.

The remainder of this article outlines the full process for creating Minivoc models, presents further results, and highlights my ongoing work to improve this method further.

I am very grateful to The Kaitchup Pro subscribers, whose generous support has made this work possible.

By becoming a Pro subscriber, you can gain early access to my research and help fund further developments:

The methods and models discussed below will remain in limited access for at least a few weeks while I focus on optimizations, training additional baseline systems, and conducting ablation experiments to better understand the results. Once this phase is finished, I plan to make all the models and findings publicly available for free and under an Apache 2.0 license. If this research has enough contributions, I may even publish a paper.

This is still an ongoing project. Kaitchup Pro subscribers will receive notifications of major updates and the release of new exclusive Minivoc checkpoints as they become available.