How Good is NVFP4 for Reasoning and with Small Models?

and should you care about the calibration step?

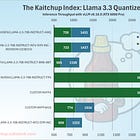

In a previous article, we saw that NVFP4 performs on par with other quantization techniques, with its main advantage being hardware-accelerated support on Blackwell GPUs, enabling more than 2x faster inference.

In these experiments, I evaluated NVFP4 only on Llama 3.3, a 70B-parameter model, with relatively short sequences (<2,500 tokens). NVIDIA’s own results indicate that NVFP4 also works well on very large models and reasoning tasks, performing slightly below FP8 in these configurations. That’s notable because reasoning typically produces very long sequences and, with quantized activations, errors tend to accumulate with each new generated token.

But does NVFP4 remain accurate for much smaller models and on reasoning tasks?

Quantization often causes a noticeable drop in downstream accuracy for smaller models.

In this article, we set out to answer that question so you know whether it’s safe to use NVFP4 on small models when running on Blackwell GPUs. I first evaluated NVFP4 for all the Qwen3 models from 8B to 0.6B parameters. Then, I experimented with Qwen3-1.7B and Qwen3-4B on math-reasoning benchmarks such as MATH-500 and AIME. I also varied the calibration datasets and sequence lengths to see whether calibration choices improve accuracy.

Here is the code I used to calibrate NVFP4 with a specified minimum sequence length: