GGUF Quantization for Fast and Memory-Efficient Inference on Your CPU

How to quantize and run GGUF LLMs with llama.cpp

Quantization of large language models (LLMs) with GPTQ and AWQ yields smaller LLMs while preserving most of their accuracy in downstream tasks. These quantized LLMs can also be fast during inference when using a GPU, especially with optimized CUDA kernels and an efficient backend, e.g., ExLlamaV2 for GPTQ.

However, GPTQ and AWQ implementations are not optimized for CPU inference. Most implementations can’t even offload parts of GPTQ/AWQ quantized LLMs to the CPU RAM when the GPU doesn’t have enough VRAM. In other words, inference will be extremely slow if the model is still too large to be loaded in the GPU VRAM after quantization.

A popular alternative is to use llama.cpp to quantize LLMs with the GGUF format. This approach can run very fast quantized LLMs on the CPU.

In this article, we will see how to easily quantize LLMs and convert them in the GGUF format using llama.cpp. This method supports many LLM architectures such as Llama 3, Qwen2, and Google’s Gemma 2. Running GGUF quantization is possible on consumer hardware and doesn’t require a GPU but can use a GPU for faster quantization.

The following notebook implements GGUF quantization for recent LLMs and inference with llama.cpp:

Last update: September 4th, 2024; The code has been updated in the article (below) and the notebook to be compatible with the current version of llama.cpp.

GGUF Quantization in a Nutshell

GGUF is an advanced binary file format for efficient storage and inference with GGML, a tensor library for machine learning written in C. It’s also designed for rapid model loading.

Update (August 20th, 2024): The author of llama.cpp wrote a blog post explaining GGML and how it is coded. If you know a bit of C, I recommend this reading: Introduction to ggml

GGUF principles guarantee that all essential information for model loading is encapsulated within a single file. The tokenizer and all the code necessary to run the model are encoded in the GGUF file. Most of the LLMs can be easily converted to GGUF: Llama, RWKV, Falcon, Mixtral, and many more are supported.

What is even more interesting is that GGUF also supports quantization to lower precisions: 1.5-bit, 2-bit, 3-bit, 4-bit, 5-bit, 6-bit, and 8-bit integer quantization are supported to get models that are both fast and memory-efficient on a CPU. This is often called “GGUF quantization” but technically GGUF is not a quantization method. It’s a file format.

The quantization code behind llama.cpp is complex (more than 10k lines of C code…) to support these numerous LLM architectures. It also has important hyperparameters that impact the model’s size and performance. They are explained here:

https://github.com/ggerganov/llama.cpp/pull/1684

It uses a block-wise quantization algorithm and there are two main types of quantization: 0 and 1.

In "type-0", weights

ware obtained from quantsqusingw = d * q, wheredis the block scale. In "type-1", weights are given byw = d * q + m, wheremis the block minimum.

Using 0 or 1 will slightly affect the size of the model and its accuracy. A model quantized with GGUF will usually have the quantization information in its name, e.g., Q4_0 means that the model is quantized to 4-bit (INT4) with type 0, while Q3_1 indicates that the model is quantized to 3-bit (INT3) with type 1. Many more types are supported but poorly documented (you might find the information you need only by reading the C code). Quantization methods with mixed precision are also available.

The main type 0 and 1 will fit most scenarios in my opinion. In terms of accuracy and model size, they are very similar to GPTQ.

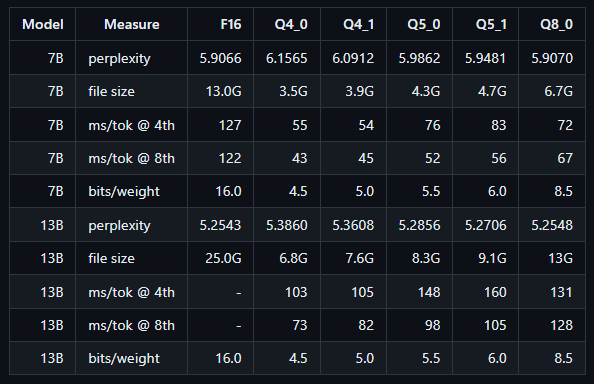

To give you an idea of the impact of using type 1 or 0 for quantization, llama.cpp published these results:

Note: These numbers might be slightly different with the current implementation of the quantization.

As we can see in this table, type 0 produces smaller models but achieves a higher (i.e., worse) perplexity than type 1. If you have enough memory, type 1 is better.

Fast GGUF Quantization with llama.cpp

The quantization method presented in this section is compatible with the following LLM architectures: GPTNeoX, Baichuan, MPT, Falcon, BLOOM, GPTBigCode, GPTRefact, Persimmon, StableLM, Qwen, Qwen2, Mixtral, GPT2, Phi, Plamo, CodeShell, InternLM2, Orion, BERT, NomicBERT, Nomic, Gemma, Llama, and many more.

First, we have to install llama.cpp:

!git clone https://github.com/ggerganov/llama.cpp

!cd llama.cpp && GGML_CUDA=1 make && pip install -r requirements.txtNote: I set GGML_CUDA=1 to use the GPU for quantization. If you don’t have a GPU, remove it.

For demonstration, I will quantize Qwen1.5 1.8B in 4 bits using the type 0 described in the previous section. This quantization format is denoted q4_0. Other valid formats, for example, are: q2_k, q3_k_m, q4_0, q4_k_m, q5_0, q5_k_m, q6_k, and q8_0 for which the number is the bit width.

Let’s set the following variables:

from huggingface_hub import snapshot_download

model_name = "Qwen/Qwen1.5-1.8B"

#methods = ['q2_k', 'q3_k_m', 'q4_0', 'q4_k_m', 'q5_0', 'q5_k_m', 'q6_k', 'q8_0'] #examples of quantization formats

methods = ['q4_0']

base_model = "./original_model/"

quantized_path = "./quantized_model/"

model_name: is the model name on the Hugging Face Hub.

methods: is a list of quantization methods that you want to run. One file will be created for each method in this list.

base_model: is the local directory in which the model to quantize will be stored

quantized_path: is the local directory in which the quantized model will be stored

First, we must download the model to quantize. We can do this with Transformers’ “snapshot_download”:

snapshot_download(repo_id=model_name, local_dir=base_model , local_dir_use_symlinks=False)

original_model = quantized_path+'/FP16.gguf'Then, we have to convert this model to the GGUF format, before quantization, in FP16. We can do this with the script convert_hf_to_gguf.py provided by llama.cpp:

mkdir ./quantized_model/

python llama.cpp/convert_hf_to_gguf.py ./original_model/ --outtype f16 --outfile ./quantized_model/FP16.ggufFinally, we can proceed with the quantization:

for m in methods:

qtype = f"{quantized_path}/{m.upper()}.gguf"

os.system("./llama.cpp/llama-quantize "+quantized_path+"/FP16.gguf "+qtype+" "+m)It should only take a few minutes.

To test the quantized model, you can run llama.cpp. It’s very fast and will only use the CPU:

./llama.cpp/llama-cli -m ./quantized_model/Q4_0.gguf -n 90 --repeat_penalty 1.0 --color -i -r "User:" -f llama.cpp/prompts/chat-with-bob.txt

Note: -r "User:" and -f llama.cpp/prompts/chat-with-bob.txt are not optimal for a Qwen base model. Modify them to match your model’s prompt template.

In my experiments, this quantized model reached a throughput of around 20 tokens/second. This is fast considering that Google Colab uses a very old CPU (Haswell architecture with only 2 cores available),

Conclusion

llama.cpp is one of the most used frameworks for quantizing LLMs. It’s much faster for quantization than other methods such as GPTQ and AWQ and produces a GGUF file containing the quantized model and everything it needs for inference (e.g., its tokenizer).

llama.cpp is also very well optimized for running models on the CPU. If you don’t have a GPU with enough memory to run your LLMs, using llama.cpp is a good alternative.

Have you ever tried quantizing a Bert or Roberta classifier model with GGUF? I'm curious how the performance compares on CPU to that of an unquantized classifier on GPU.