Fine-Tuning Gemma 3 on Your Computer with LoRA and QLoRA (+model review)

The efficiency of global-local attention with QK-Norm and no more soft-capping

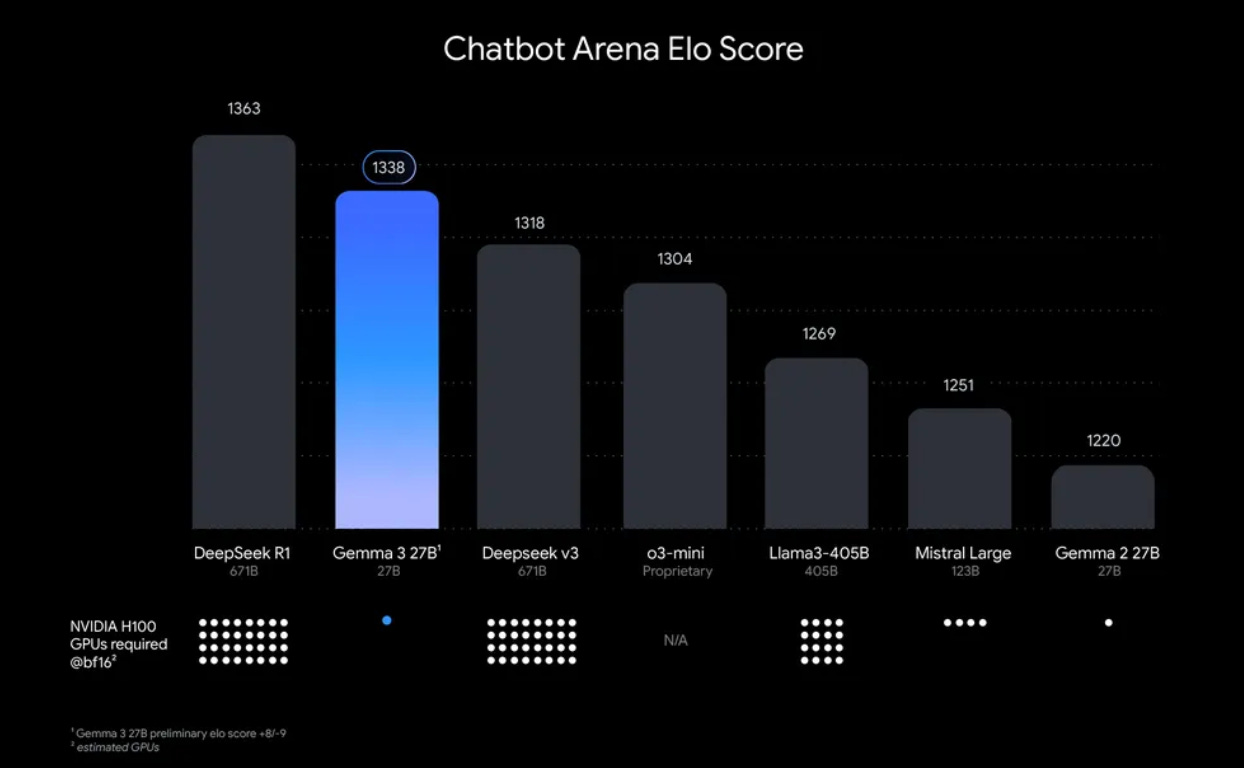

Google introduced Gemma 3, an updated iteration of its Gemma model series. These models show strong performance compared to other open models of similar sizes, with the 27B variant (probably) being the most capable open LLM under 100B parameters.

To achieve this, Google developed a new architecture that improves both accuracy and efficiency. Additionally, Gemma 3 is natively multimodal, allowing it to process both text and image inputs.

In this article, we will take a close look at the key architectural changes in Gemma 3, breaking down how these improvements impact performance, efficiency, and usability. We will explore both its strengths and limitations. Beyond architecture, I’ll also provide a hands-on guide with code for fine-tuning Gemma 3 on a 24GB GPU, covering best practices to optimize the process. Additionally, we will analyze memory consumption, discussing key factors that influence training efficiency. Special attention will be given to important fine-tuning settings, including model parameters, quantization options, and tokenizer considerations.

The notebook for fine-tuning Gemma 3 is available here: