Fine-tuning Base LLMs vs. Fine-tuning Their Instruct Version

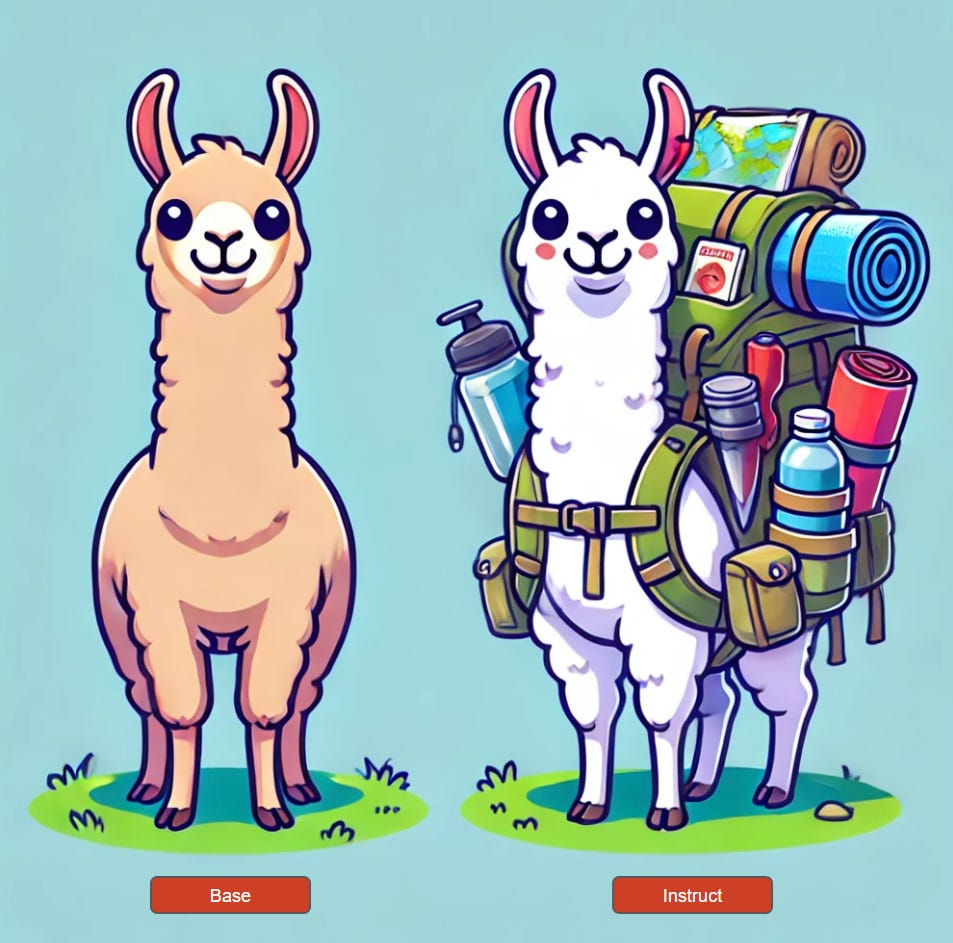

Should you fine-tune Llama 3 or Llama 3 Instruct, Gemma 2 or Gemma 2 it?

Instruct large language models (LLMs) are specialized versions of base LLMs that have been fine-tuned on instruction datasets. These datasets consist of pairs of instructions or questions and corresponding answers, which are either written by humans or generated by AI. Instruct LLMs are commonly used in chat applications, with GPT-4 being a prominent example.

Instruct LLMs undergo several post-training stages, including supervised fine-tuning, reinforcement learning with human feedback (RLHF), and Direct Preference Optimization (DPO). Through these processes, the models are trained to respond more effectively to human prompts by adhering to a specific format or chat template, often defined by special tokens added to their vocabulary.

As a result, instruct LLMs produce outputs formatted according to the templates they learned during fine-tuning, whereas base LLMs generate text by predicting the next token without these learned constraints.

When we want to fine-tune an LLM on our own data, these differences raise an important question: Should we fine-tune the base version or the instruct version?

In this article, we will first explore the process of creating instruct LLMs. Then, we will discuss the potential drawbacks of fine-tuning an instruct model and why fine-tuning base models is almost always preferable.

The following notebook implements fine-tuning and examples of inference with both types of models: