Fast and Small Llama 3 with Activation-Aware Quantization (AWQ)

Better, fast, and more simple than GPTQ quantization

Note: This article and the notebook were originally written for Llama 2. Following the release of Llama 3, I revised the article and released a new notebook showing how to quantize Llama 3 using AWQ.

Most state-of-the-art large language models (LLMs) are too large to be loaded on a consumer GPU. LLMs with more than 12 billion fp16 parameters can’t be loaded on a high-end GPU with 24 GB of VRAM.

One way to reduce the size of an LLM is quantization. This is an active research area with two very popular algorithms proposed in 2023: GPTQ and bitsandbytes nf4. They are both effective at reducing the size of LLMs while preserving most of their performance in downstream tasks.

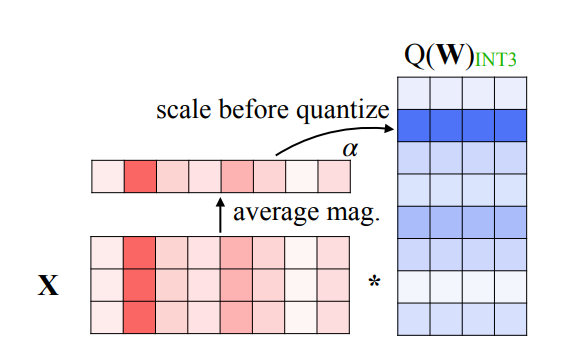

Yet, they have also well-identified flaws. For instance, they naively consider all the parameters with the same importance. bitsandbytes nf4 quantized models are also slow during inference.

Activation-Aware Quantization (AWQ) proposed solutions for these issues. AWQ protects important weights and exploits a reorder-free online dequantization to speed up inference.

In this article, I explain the main features of AWQ. We will see how to quantlze LLMs (Llama 3) with AutoAWQ. I also benchmark inference speed, VRAM consumption, and perplexity of AWQ models.

I implemented AWQ quantization for Llama 3 in this notebook:

The original version of this article was published along with this notebook showing how to quantize Llama 2:

Last update: April 24th, 2024